Stable Fast 3D brings better lighting, textures, and materials to AI-generated 3D models

Stability AI has made significant progress in generative AI for 3D objects with its new Stable Fast 3D (SF3D) technique. This development is particularly promising for video game creation.

SF3D builds on Stability AI's earlier TripoSR technology, which could generate 3D models in half a second. SF3D maintains this high speed while addressing issues that alternative methods have struggled with.

One problem SF3D tackles is burnt-in lighting effects in the generated 3D models. These effects occur when the original image's lighting is directly transferred to the 3D model's texture, which can limit the model's usability in various applications.

SF3D solves this by modeling lighting and reflection properties separately using a spherical Gaussian function. This creates more consistent illumination across different lighting conditions.

Video: Stability AI

Fewer polygons, but more details

Another issue with other methods is using vertex colors to represent textures. Vertex colors are color information stored directly at the vertices of the 3D model, which can be inefficient for models with many polygons, especially in applications such as video games.

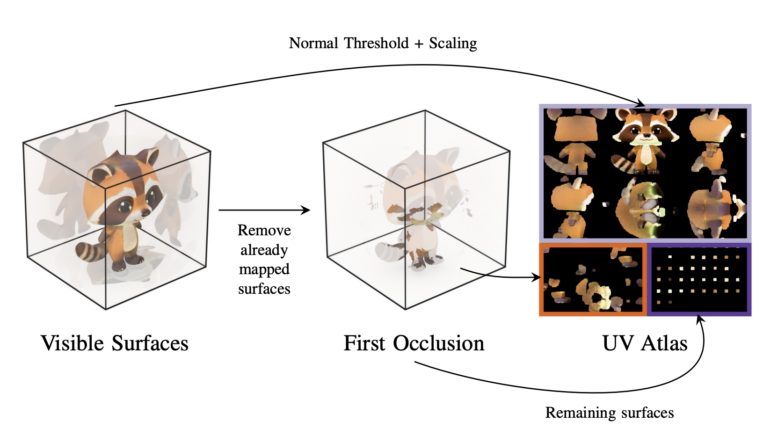

SF3D overcomes this by using a texture deconvolution technique called UV deconvolution. This projects the object's texture onto a flat 2D surface, allowing for finer details with fewer polygons compared to previous methods.

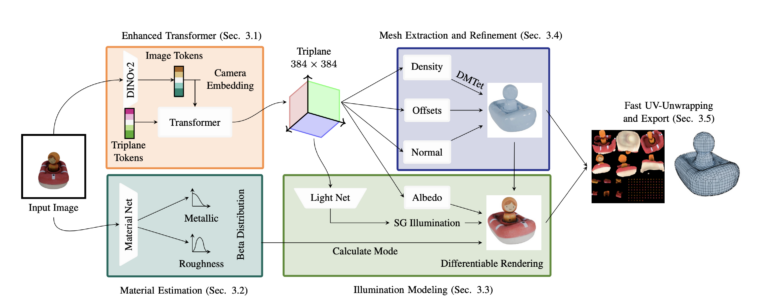

"Staircase artifacts" are caused by the marching cube algorithm, which is often used in feed-forward meshing to generate polygon meshes from the generated 3D data. SF3D uses a more efficient architecture for higher resolution 3D data and generates meshes with an improved algorithm called DMTet.

In addition, the mesh vertices are slightly shifted and normal maps are used to achieve smoother surfaces. Normal maps store the surface orientation at every point in the 3D model, allowing for more detailed lighting effects.

To further enhance the appearance of the generated objects, SF3D predicts overall material properties such as roughness and metallic qualities, allowing for more realistic light reflection. This is particularly noticeable when objects are displayed under different lighting conditions, as it allows for more realistic light reflection.

The SF3D pipeline consists of five main components: an advanced neural network for predicting higher-resolution 3D data, a network for estimating material properties, an illumination prediction module, a step for extracting and refining the polygon mesh, and a fast module for UV development and exporting the final 3D model.

Experimental results show SF3D outperforms existing methods. It generates small (under 1 MB) 3D models in just 0.5 seconds. When combined with a text-to-image model, it can create 3D models from text descriptions in about one second.

Stability AI has made interactive examples available on GitHub, with a demo on Hugging Face and open-sourced code. This development adds to Stability AI's recent achievements in 3D modeling, including Stable 3D, Zero123, Stable Video 3D, and Stable Video 4D.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.