Are open-source AI models more dangerous than closed models like GPT-4? A new study says no, and offers recommendations for policymakers.

Open Foundation Models (OFMs) offer significant benefits by fostering competition, accelerating innovation, and improving the distribution of power, concludes a study by the Stanford Institute for Human-Centered Artificial Intelligence. In the study, the authors examined the social and political implications of OFMs, compared potential risks with those of closed models, and offered recommendations for policymakers.

The risks of open foundation models examined include disinformation, biorisks, cybersecurity, spear phishing, non-consensual intimate images (NCII), and child sexual abuse material (CSAM). The study concludes that there is currently limited evidence of the marginal risk of OFMs compared to closed models or existing technologies.

Where the risks are better documented, as in the case of NCII and CSAM, proposals to limit the proliferation of OFMs by licensing computationally intensive models are inappropriate because the text-image models that cause these harms require far fewer resources to train, the study says. Existing security mechanisms for closed models are also vulnerable. However, the authors emphasize that further research is needed to better assess the risks.

Warning against overregulation for open source models

At the same time, they warn that some policy proposals, such as liability for damages caused by downstream use or strict content provenance requirements, could have a disproportionate impact on the OFM ecosystem. Therefore, policymakers should exercise caution when implementing such policies and consult with OFM developers.

The key takeaways, according to the team, are:

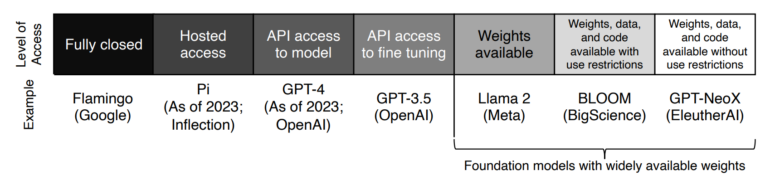

- Open foundation models, meaning models with widely available weights, provide significant benefits by combatting market concentration, catalyzing innovation, and improving transparency.

- Some policy proposals have focused on restricting open foundation models. The critical question is the marginal risk of open foundation models relative to (a) closed models or (b) pre-existing technologies, but current evidence of this marginal risk remains quite limited.

- Some interventions are better targeted at choke points downstream of the foundation model layer.

- Several current policy proposals (e.g., liability for downstream harm, licensing) are likely to disproportionately damage open foundation model developers.

- Policymakers should explicitly consider potential unintended consequences of AI regulation on the vibrant innovation ecosystem around open foundation models.

Meta's head of AI, Yann LeCun, described the study on LinkedIn as "A big bunch of nails in the coffin of the idea that open source AI models are more dangerous than closed ones." Since the release of Metas Llama-2, there has been "an explosion of applications built on top of open source LLMs, and none of the catastrophe scenarios the AI doomers had predicted," LeCun said.