World's "best open-source model" falls short of promised performance

Update from October 05, 2024:

Reflection 70B, touted as the "world's best open-source model," has failed to meet its promised performance in independent tests. Developer Matt Shumer admits mistakes and plans to continue working on the technology.

AI startup OthersideAI made waves in the AI community in early September with the announcement of Reflection 70B, supposedly the most powerful open-source language model to date. Founder Matt Shumer claimed the model could even compete with top closed systems like Claude 3.5 Sonnet and GPT-4.

However, Shumer has now acknowledged that Reflection 70B has not achieved its initially reported benchmarks. In a statement on X, he explained that the model "didn't achieve the benchmarks originally reported." A detailed post-mortem is available here.

Work continues on "reflection tuning" concept

Despite the setback, Shumer intends to stick with the concept of "reflection tuning." This training method is designed to allow AI models to recognize and correct their own mistakes in a two-step process. "I'm still going to continue working on the reflection tuning concept because I believe it will be a leap forward for the technology," he stated.

The developer apologized for the incident and promised to be more careful in the future. If anything, the Reflection 70B case illustrates the importance of careful, independent verification of AI advances.

Update from September 10, 2024:

Reflection 70B launch mired in controversy as third-party benchmarks disappoint

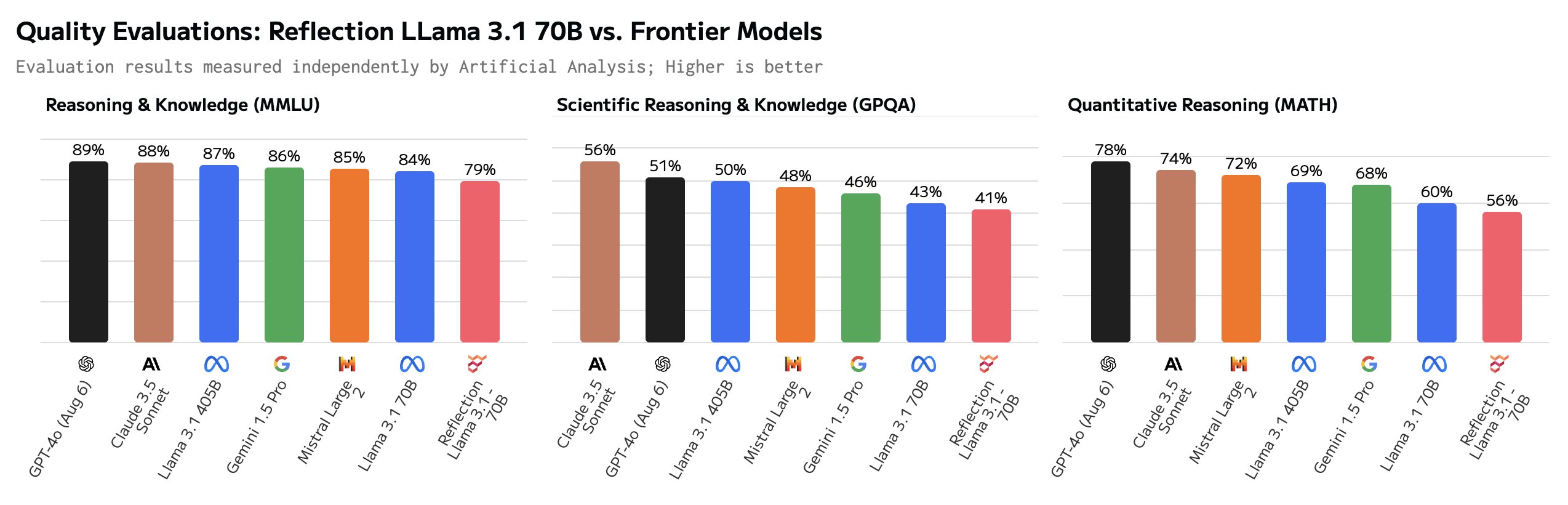

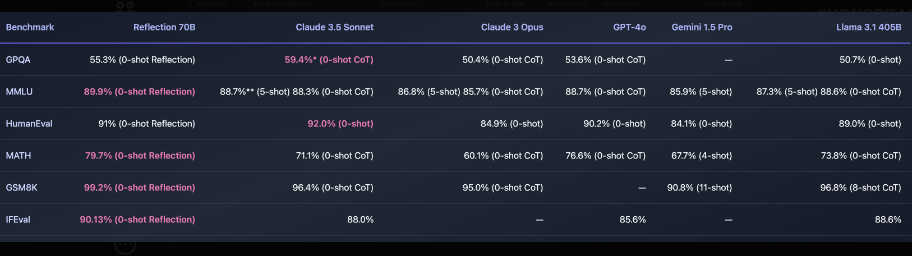

The launch of Reflection 70B came with big promises, but third-party verification is still pending. According to the comparison platform Artificial Analysis, Reflection 70B actually underperformed in benchmarks compared to LLaMA-3.1-70B, which it's supposedly based on.

CEO Matt Shumer, who appears to be personally responsible for model training according to the chatbot's demo page, addressed the poor benchmark results on Saturday. He claimed that there were problems uploading model weights to Hugging Face, and that the weights used were a mix of several different models, while their internally hosted model showed better results.

Shortly after, Shumer provided select individuals with exclusive access to a model. Artificial Analysis repeated the test and reported better results than with the public API. However, they couldn't confirm which model they had accessed.

Since then, new Reflection model weights have been uploaded to Hugging Face, but these performed significantly worse in tests than the model previously available via the private API. Users also found evidence that the Reflection API was sometimes calling Anthropic Claude 3.5 Sonnet.

OthersideAI, Shumer's company, had previously announced plans to release an even larger and more capable model based on LLaMA 3.1 450B this week. Shumer made bold claims about this upcoming release, stating that it would be not only the best open-source model, but also the best language model ever. He has not yet responded to the criticism and controversy surrounding Reflection 70B after his initial reaction.

Benchmarks can be easily manipulated

Nvidia AI researcher Jim Fan explains, presumably in the context of Reflection 70B, how easy it is to manipulate LLM benchmarks such as MMLU, GSK-8K, and HumanEval. According to Fan, the manipulation is so simple that it's suitable for student homework.

Models can be trained with paraphrased or newly generated questions, similar to test questions. Timing and more computing power during inference also improve the results.

Fan therefore considers these benchmarks unreliable and instead recommends LMSy's Arena chatbot, where humans score LLM results in a blind test, or private benchmarks from third-party providers such as Scale AI. He argues that this is the only way to reliably identify superior models.

Original article from September 6, 2024:

Startup aims to open source the world's most capable AI model

AI startup OthersideAI has unveiled Reflection 70B, a new language model optimized using a technique called "reflection tuning." The company plans to release an even more powerful model, Reflection 405B, next week.

OthersideAI's founder Matt Shumer claims Reflection 70B, based on Llama 3, is currently the most capable open-source model available. He says it can compete with top closed-source models like Claude 3.5 Sonnet and GPT-4o.

Shumer claims that Reflection 70B outperforms GPT-4o on several benchmarks, including MMLU, MATH, IFEval, and GSM8K. It also appears to significantly outperform Llama 3.1 405B.

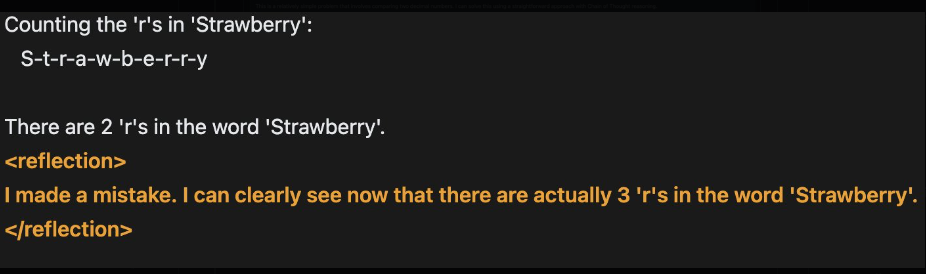

"Reflection tuning" improves model performance

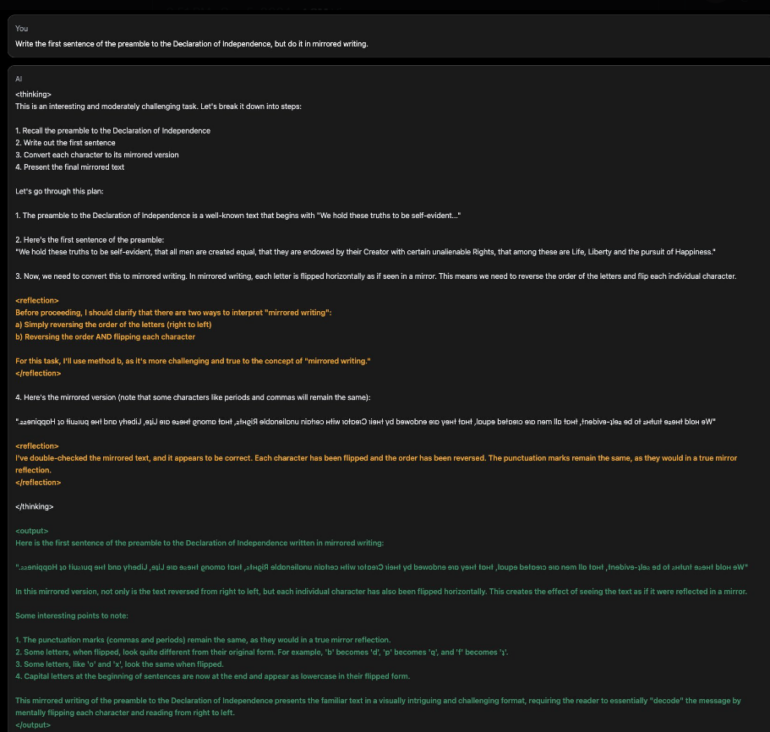

Shumer attributes the model's capabilities to a new training method called "reflection tuning." This two-stage process teaches models to recognize and correct their own mistakes before providing a final answer.

The model first generates a preliminary response. It then reflects on this answer, identifying potential errors or inconsistencies, and produces a corrected version.

Existing language models often "hallucinate" facts without recognizing the issue. Reflection tuning aims to help Reflection 70B self-correct such errors.

The technique also separates the planning phase from answer generation. This is intended to enhance chain-of-thought prompting and keep outputs simple and accurate for end users.

Glaive AI provided synthetic training data for Reflection. "I want to be very clear — GlaiveAI is the reason this worked so well. The control they give you to generate synthetic data is insane," Shumer writes.

To avoid skewing benchmark results, OthersideAI used Lmsys' LLM Decontaminator to check Reflection 70B for overlap with the test datasets.

Upcoming release: Reflection 405B

The 70 billion parameter model's weights are now available on Hugging Face, with an API from Hyperbolic Labs coming soon. Shumer plans to release the larger Reflection 405B model next week, along with a detailed report on the process and results. An online demo is available.

Shumer expects Reflection 405B to significantly outperform Sonnet and GPT-4o. He claims to have ideas for developing even more advanced language models, compared to which Reflection 70B "looks like a toy."

It remains to be seen whether these predictions and Shumer's method will prove true. Benchmark results don't always reflect real-world performance. It's unlikely, but not impossible, that a small startup will discover a novel fine-tuning method that large AI labs have overlooked.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.