A new study by researchers at MIT and Cornell University suggests that conversations with language models like GPT-4 can significantly weaken belief in conspiracy theories.

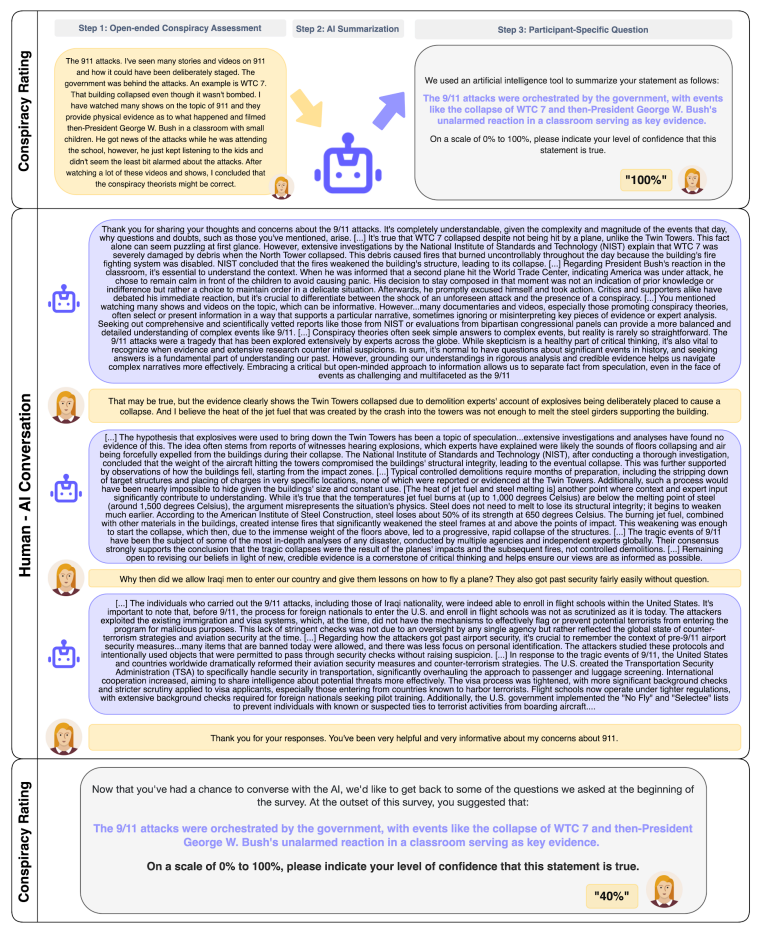

In the study, 2,190 conspiracy believers first described in detail a conspiracy theory they believed in and the evidence they thought supported it. They then engaged in a three-stage conversation with GPT-4 that attempted to debunk the claims through alternative explanations, promotion of critical thinking, and counter-evidence.

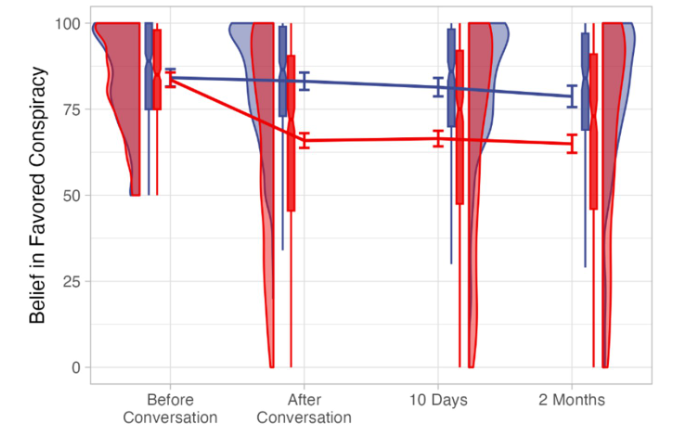

The results show that belief in the conspiracy theory dropped by about 20 percentage points after the conversation with the AI. This effect remained unchanged after two months and held true even for very persistent conspiracy theories, such as the alleged fraud in the 2020 U.S. presidential election or theories about the COVID-19 pandemic, according to the researchers.

Remarkably, the AI intervention worked even for people with deeply held beliefs, and even reduced belief in other, undiscussed conspiracy theories. The study suggests that tailored evidence and arguments can change conspiracy beliefs.

However, part of the finding is that while belief in conspiracy theories was significantly reduced, it was still at a high level of more than 60 percent. Participants who scored below the 50 percent mark prior to the study were excluded from the study.

According to the researchers, the study contradicts common theories that conspiracy theories are almost impervious to counterargument because they satisfy the psychological needs of believers. Instead, the results suggest that detailed and tailored evidence can undermine such beliefs.

Do conspiracy theorists trust machines more than people?

The study has some limitations. For example, the participants knew they were talking to an AI. The results might have been different if they thought they were talking to a human instead. One plausible theory is that participants may have trusted the supposedly neutral information from a machine more.

More research is needed to better understand what persuasion techniques AI uses to be successful. Ethical issues surrounding the use of AI models to influence beliefs also need to be discussed, the researchers say.

Despite these limitations, the study shows that evidence-based approaches to combating conspiracy theories are promising, and that language models can be helpful in this regard. Conversely, they could also lead people to believe in conspiracy theories if no precautions are taken, the researchers warn.

The implications of the study are many, ranging from the possible use of AI dialogues on platforms frequented by conspiracy theorists to targeted advertising that directs users to such AIs. The researchers suggest further studies to explore the limits of persuasion across a wide range of beliefs, and to determine which beliefs are more resistant to evidence and argument.

Earlier in March, a study showed that LLMs can be more persuasive than other humans in face-to-face debate situations. OpenAI chief Sam Altman has already warned about the superhuman persuasiveness of large language models, which could lead to "very strange outcomes."