Runway Gen-2 is one of the first commercial AI models that can generate short videos from text or images. We show you what Runway Gen-2 can do and how to get started.

What Midjourney is to AI image generation, Runway is to AI video: the most technically advanced product on the market today. Gen-1 supported video-to-video conversions; launched in March, Gen-2 lets you create new videos from text, text with image, or image only. Most recently, Runway released a major update to Gen-2 in early July.

In this article, we collect great examples that demonstrate the potential of AI video generation and explain how to get started.

Use Runway Gen-2: Here's how

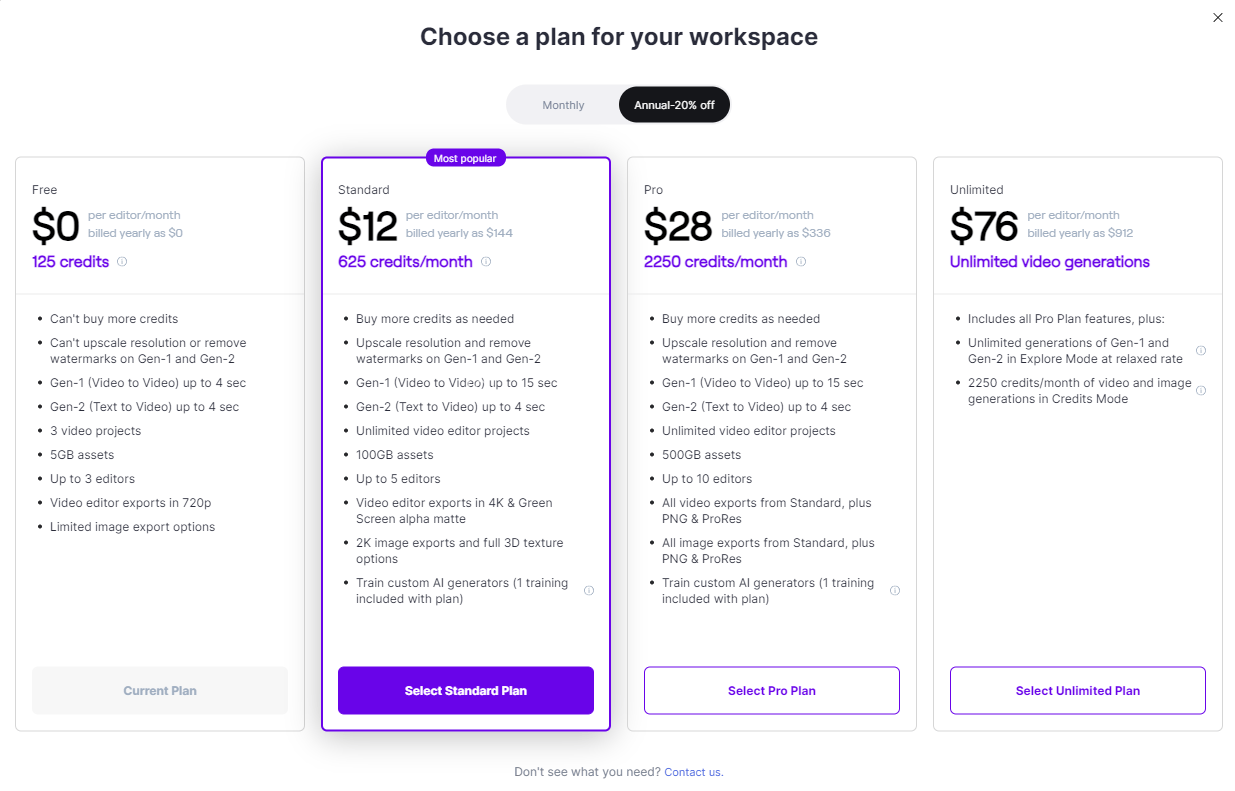

The startup unveiled Runway Gen-2 in March and launched it in June. Currently, the software is available in the browser and as an iOS app for smartphones. Runway offers 125 credits per month for free. One credit equals one second of video generated.

This allows you to create video sequences of up to four seconds in length (same as paid plans). You do not need a credit card to use it for free.

To avoid wasting precious credits on results that go in a wholly different direction than planned, there is a "Preview" button next to the "Generate" button. After a few moments, Runway will show four frames of how the AI might interpret the prompt, and you can decide if you want to continue.

If one of the four frames is what you want, you can select it and edit it into a four-second clip. Depending on server load, the video can take anywhere from a few seconds to a few minutes to complete and is automatically saved for later download to your personal library.

Once downloaded, the MP4 videos are a good 1 megabyte in size and have a relatively low resolution of 896 x 512 pixels in the popular 16:9 format.

Some great Runway Gen-2 examples

The images are synthetic, but the sound is real and added in a separate step: With the right acoustic background, our eyes can be better tricked, so that the AI videos are sometimes only recognizable as such at second glance.

A few moments of tranquility with Gen-2 Image to Video. pic.twitter.com/DMmJltQ7Ld

— Runway (@runwayml) July 28, 2023

How would a lifelike Pikachu move? Runway Gen-2 can visualize this if you give the system an appropriate initial image. This realistic Pikachu, created with Midjourney, is animated by Gen-2 in a four-second clip.

However, when Runway's Gen 2 manages to animate, it often pulls off some crazy stuff. When it works, it really delivers some incredible results! #Runway #Gen2 pic.twitter.com/iU1TzCiBIa

— Pierrick Chevallier | IA (@CharaspowerAI) July 24, 2023

Twitter user @I_need_moneyy has used Midjourney as a starting point for numerous clips created with Gen-2, combining image and video. You can see many styles, from photorealism to cartoons to scenes that would only be possible with CGI, even in Hollywood.

Le combo MidJourney + Gen-2 de Runway est juste incroyable ! 🤯

Parfait pour opti vos Tiktok 👍 pic.twitter.com/xqfuV7E82o

— I NEED MONEY 💸 (@I_need_moneyyyy) July 25, 2023

Javi Lopez also shows the photo and the dynamic AI animation side by side. Unfortunately, he does not mention whether he included a text prompt alongside the image.

🔴 Runway Gen-2 image to video has been released!

We are now one step closer to being able to create our own movies!

Here are my top 10 generations I've crafted in the last 48 hours from my very own experiments! pic.twitter.com/GWzjkGWWJX

— Javi Lopez ⛩️ (@javilopen) July 24, 2023

Min Choi shows that editing and music are the most important things to make AI clips look good. He produced a short music video with Gen-2 that tells a story with different scenes.

Runway Gen-2 Image-to-Video is a game changer 🤯

You can craft a music video or short film draft from Midjourney still images, combined with music/voice in record time.

Here is how I created this music video and 11 INSANE examples, Sound ON

(THREAD 🧵) 1/13 pic.twitter.com/dnz62GCB8R

— Min Choi (@minchoi) July 26, 2023

How exactly the creators of the YouTube channel Curious Refuge created the trailer for the movie crossover of the year between "Barbie" and "Oppenheimer" is something they only reveal in their $499 bootcamp, but Runway Gen-2 is involved. They, too, try to make a coherent piece out of four-second clips through clever editing.

Javi Lopez combined the following tools to create a supernatural AI movie scene: Midjourney for the image, Runway Gen-2 for the video, Elevenlabs for the AI voice, and TikTok's free video editor CapCut for the editing and particle effects.

🔴 Quick test generating a scene using Generative AI

Midjourney + Runway (Gen-2) + Elevenlabs (the voice) + CapCut (sound/particle effects).

"Evil and good, they're merely a matter of perspective. We all are players in life's theatre."

Hollywood, here we go! pic.twitter.com/aR0qI4MuHR

— Javi Lopez ⛩️ (@javilopen) July 24, 2023

Tips and observations from the Runway community on Gen-2

Many users share their experiences with Gen-2 on Twitter, for example, Mia Blume. Here are some of their observations and tips for using Runway Gen-2.

- Runway Gen-2 tends to give more weight to the text than to the image in a mixed prompt. There is (currently) no way to change this weighting.

- Just as image models have long struggled to produce realistic hands, Runway Gen-2 struggles to produce realistic walking animations.

- Gen-2 has a problem generating videos from illustrations. Photorealistic images work better.

- The four-second maximum that Gen-2 can currently generate is a severe limitation, but it can also stimulate creativity.