The "best open-weight LLM from a US company" is a finetuned Deepseek model

Deep Cogito positions its latest release as the "best open-weight LLM by a US company." Deep Cogito has released Cogito‑v2.1‑671B, a finetune built on a Deepseek base model from November 2024 (presumably Deepseek R1‑Lite, since Deepseek‑V3‑Base did not ship until December). After retraining the model internally, Deep Cogito says it now competes with top closed and open systems and outperforms other US open models like GPT‑OSS‑120B.

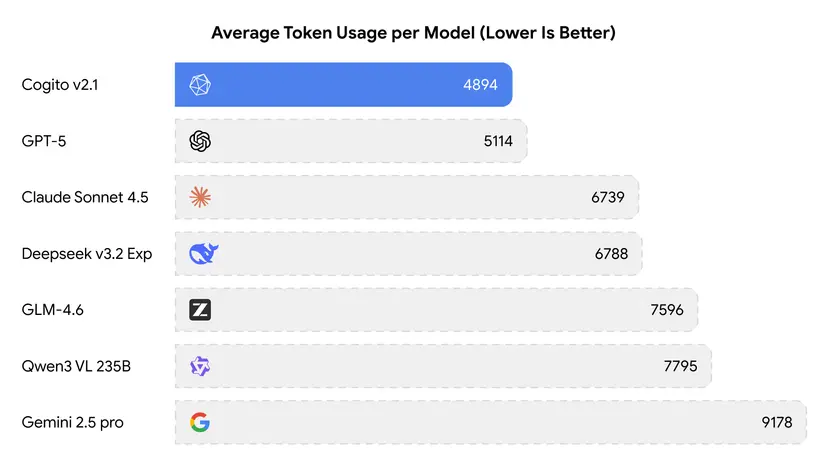

According to Deep Cogito, Cogito v2.1's main advantage is efficiency. The model uses far fewer tokens on standard benchmarks than comparable systems, which can lower API costs. The team also trained it with process monitoring for thought steps, allowing it to reach conclusions with shorter reasoning chains. They report improvements in prompt-following, programming tasks, long-form queries, and creativity. Users can try the model for free through chat.deepcogito.com, where the developer says no chats are stored. The model weights are available on Hugging Face, and smaller editions are planned.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now