The scientist who taught AI to see now wants it to understand space

Fei‑Fei Li believes the next leap in AI won’t come from language, it’ll come from space. Only by understanding motion, distance, and physical relationships, she says, can machines become truly creative partners.

Half a billion years ago, evolution gave animals their first sense of where they were in the world. Now, humanity is close to building that skill into silicon - at least that’s the vision of Stanford AI pioneer Fei‑Fei Li, co‑creator of ImageNet and founder of the research startup World Labs.

In a recent essay, Li argues that the ability to reason about the physical world will define the next frontier of artificial intelligence.

Why current AI can’t see the world it describes

Li points out that today’s large language models (LLMs) are brilliant with text but nearly blind to physics. Even multimodal versions that process images struggle to estimate distance, orientation, or size. Ask them to rotate an object mentally or predict where a ball will land, and they usually fail.

"While current state‑of‑the‑art AI can excel at reading, writing, research, and pattern recognition in data, these same models bear fundamental limitations when representing or interacting with the physical world," Li writes.

Humans, on the other hand, seem to integrate perception and meaning seamlessly. We don’t just recognize a coffee mug, we instantly grasp its size, its weight, and where it sits in space. That implicit spatial reasoning, says Li, is something AI still lacks entirely.

The cognitive scaffold that made us intelligent

Li traces the roots of intelligence back to the simplest perceptual loops. Long before animals could nurture offspring or communicate, they sensed and moved, starting a feedback cycle that eventually gave rise to thought itself.

That same ability underpins everything from driving a car to sketching a building or catching a ball. Words can describe these acts, but they can’t reproduce the intuition behind them. "Thus, many scientists have conjectured that perception and action became the core loop driving the evolution of intelligence," Li notes.

How spatial insight powered human discovery

Throughout history, Li writes, breakthroughs have often come from seeing the world (literally) differently: Eratosthenes calculated Earth’s circumference using shadows cast in two Egyptian cities at the same moment. James Hargreaves revolutionized the textile industry with the "Spinning Jenny," realizing that placing multiple spindles in parallel let one worker spin several threads at once. Watson and Crick cracked the structure of DNA only after wrestling with metal and wire models until the geometry clicked.

"In each case, spatial intelligence drove civilization forward when scientists and inventors had to manipulate objects, visualize structures, and reason about physical spaces - none of which can be captured in text alone," Li she says.

From language models to world models

To replicate this kind of reasoning, world models are needed. Unlike LLMs that generate words, these would generate and interact with coherent 3D worlds governed by the laws of physics.

A true world model, she says, must be generative (able to create consistent environments), multimodal (capable of processing text, images, videos, depth data, or gestures), and interactive (able to predict how actions change a scene).

"The scope of this challenge exceeds anything AI has faced before," Li writes. Language, after all, is abstract, while worlds are bound by physics.

Training AIs to understand reality

At World Labs, Li’s team is tackling what she calls “the hardest unsolved problem in AI.” The goal is to find a training objective as simple and universal as next‑token prediction — the rule that drives LLMs — but adapted for space, motion, and causality.

Text alone won’t do. World models require exposure to massive datasets of images, videos, and 3D scans as well as algorithms capable of extracting genuine spatial structure from 2D pixels. Current multimodal architectures flatten those signals into 1D or 2D sequences, erasing spatial coherence. Future designs will likely need 3D‑ or even 4D‑aware tokenization and memory.

The Munich startup Spaitial shares that vision. It’s developing Spatial Foundation Models (SFMs) built to generate and interpret realistic or imagined 3D worlds directly from text or images, capturing geometry, material, and physical dynamics with internal consistency.

From creative sandbox to scientific engine

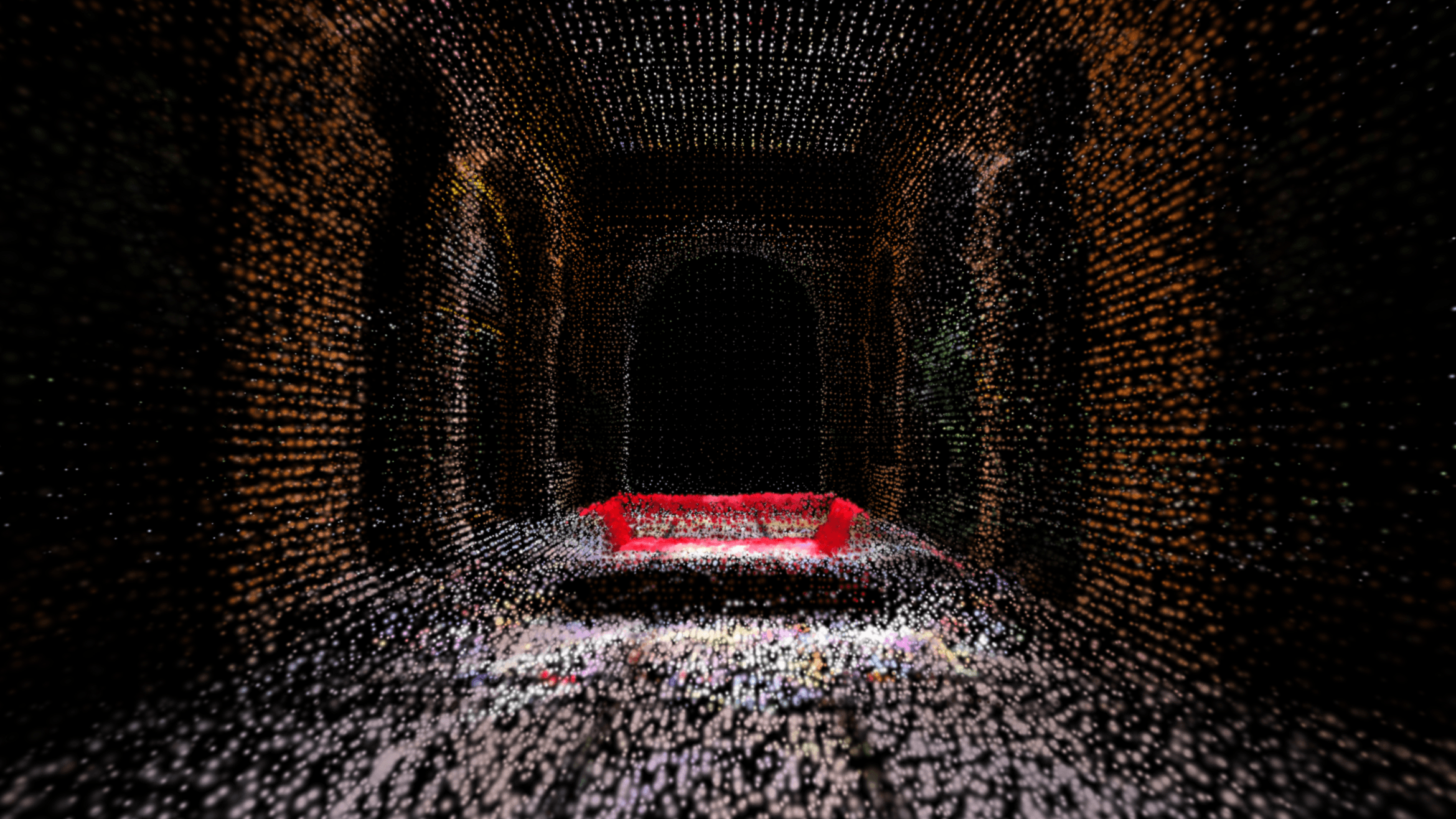

World Labs recently unveiled Marble, an early prototype shared with a small group of users. The system can take multimodal prompts and render persistent 3D environments — a glimpse of what Li calls “spatially grounded AI.” However, the prototype has many of the same persistence problems as other projects.

In the short term, tools like Marble target designers and artists. Next could be robotics, where spatial understanding determines whether a machine can grasp an object or plan a path. Eventually, Li imagines scientific applications: AI systems that simulate experiments, test hypotheses in parallel, or explore places humans can’t reach — from deep oceans to distant moons.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.