Thinking Machines wants large language models to give consistent answers every time

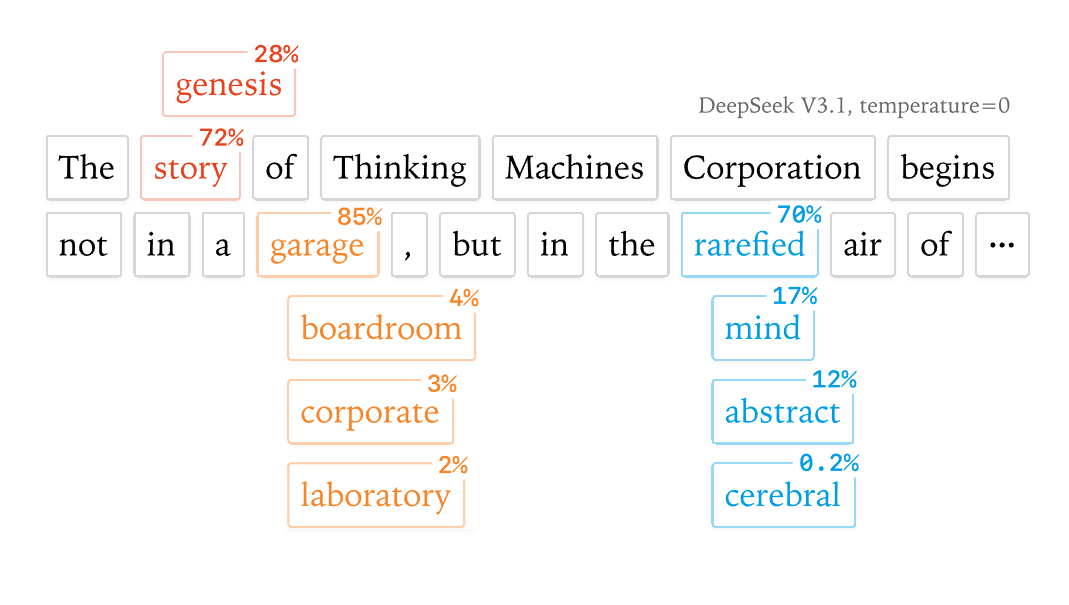

AI startup Thinking Machines wants to make large language models more predictable. The team is studying why large language models sometimes give different answers to the same question, even when temperature is set to 0, a setting that should always return the most probable answer.

According to Thinking Machines, the problem isn't just GPU precision, which they say is "not entirely wrong" but "doesn’t reveal the full picture." Server load also affects how a model responds: when the system is under heavy load, the same model can produce slightly different results. To fix this, the team developed a custom inference method that keeps outputs consistent regardless of system load. More predictable behavior like this could make AI-supported research more reliable.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.