Metas Toolformer is designed to learn to use tools independently, outperforming larger language models in certain downstream tasks.

Natural Language is the programming language of the brain, wrote science fiction author Neal Stephenson in his 1992 novel Snow Crash. Recent advances in machine processing of natural language show that language can also be the programming language of machines - as they get better at understanding it.

With "Toolformer", Meta wants to extend this principle to the use of tools.

That external tools can be docked onto a language model to improve its performance is not a new insight. What is new about Toolformer is that the system has learned to do this on its own, allowing it to access a much larger set of tools in a self-directed way.

Previous tools required large amounts of human annotation or limited the use of external tools to specific tasks, the researchers write. This hinders the use of language models for broader tool applications, they say.

With the Toolformer approach, however, a language model can control a variety of tools and decide for itself which tool to use, when, and how, the researchers write.

Toolformer autonomously searches for useful APIs to better solve tasks

Toolformer is optimized to autonomously decide which APIs to call and which arguments to pass. It also integrates the results generated in the process into future token prediction.

The model learns this process in a self-supervised manner based on examples: The researchers had a language model learn a "handful" of human-written API statements to label a large dataset of potential API actions. From these, the researchers automatically selected useful examples to fine-tune their Toolformer model. They used about 25,000 examples per API.

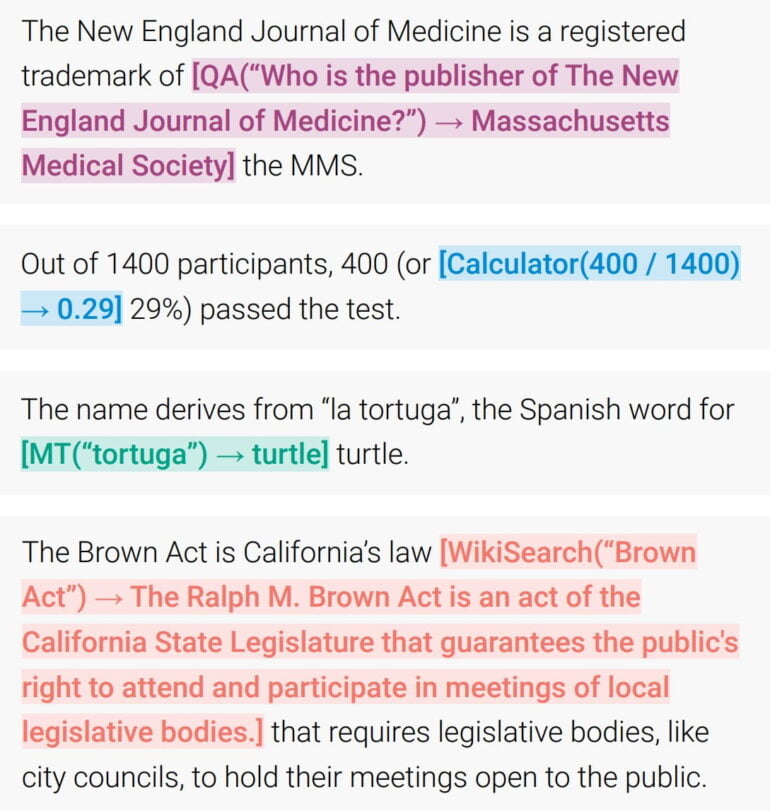

After training, the researchers say the language model automatically and successfully API-called a calculator, a Q&A system, two different search engines including a Wikipedia search, a translation system, and a calendar. Depending on the textual task, the model independently decides if and when to access a tool.

🎉 New paper 🎉 Introducing the Toolformer, a language model that teaches itself to use various tools in a self-supervised way. This significantly improves zero-shot performance and enables it to outperform much larger models. 🧰

🔗 Link: https://t.co/FvjzhysMze pic.twitter.com/sBSGaQIABI

— Timo Schick (@timo_schick) February 10, 2023

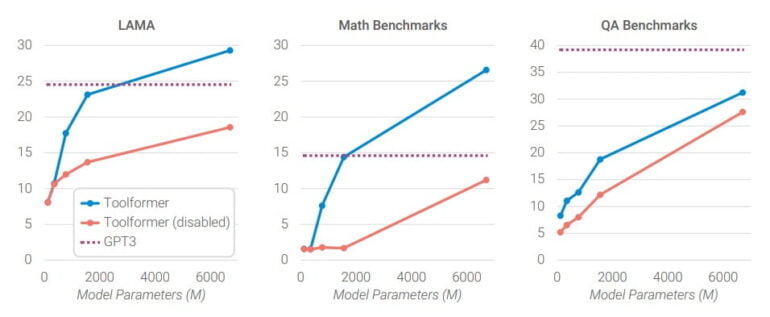

According to the researchers, using the tools significantly improved the zero-shot performance of a GPT-J model with only 6.7 billion parameters, allowing it to outperform the much larger GPT-3 model with 175 billion parameters on selected tasks.

The ability of a model to perform better with tools was evident in tests starting at about 775 million parameters. Smaller models performed similarly with and without tools. One exception was the Wikipedia API used for question-answering tasks, probably because this API was very easy to use, the researchers suggest. However, Toolformer did not outperform GPT-3 on the QA benchmarks.

While models become better at solving tasks without API calls as they grow in size, their ability to make good use of the provided API improves at the same time. As a consequence, there remains a large gap between predictions with and without API calls even for our biggest model.

From the Paper

Toolformer principle could help minimize fundamental problems of large language models

The ability of a language model to use external tools on its own can help solve fundamental problems of large language models, such as reliably solving math problems or fact checking, according to the researchers.

However, the system still has limitations, they said. Tools cannot be used sequentially, such as using the output of one tool as input to the next, because the API instructions for each tool are generated independently. This limits application scenarios.

In addition, the model cannot use the tools interactively. For example, the language model cannot search through the many results of a search engine and specify its query based on those results.

Moreover, tool access is sensitive to the exact phrasing of a query to decide whether to invoke the tool, and was sample-inefficient. Processing more than a million documents would yield only a few thousand examples of meaningful calls to the calculator API. Furthermore, Toolformer does not consider the computational cost of an API call.