Waymo taps Google Deepmind's Genie 3 to simulate driving scenarios its cars have never seen

Waymo has unveiled a generative simulation model for autonomous driving built on Google DeepMind's Genie 3. The system creates hyper-realistic scenarios—from elephant encounters to tornadoes—that would be nearly impossible to capture in the real world at scale.

Robotaxi operator Waymo has introduced the Waymo World Model, a generative world model for simulating autonomous driving situations. It's based on Genie 3, which Google Deepmind calls its most advanced general world model, adapted specifically for road traffic.

"Genie 3's strong world knowledge, acquired through its pre-training on an extremely large and diverse set of videos, allows us to explore situations never directly observed by our fleet," Waymo writes.

Waymo sees simulation as one of the three core pillars of its safety approach. The Waymo Driver has logged nearly 200 million fully autonomous miles so far, but it racks up billions of miles in virtual worlds before facing scenarios on public roads, the company says.

Waymo argues that simulating rare scenarios better prepares the Waymo Driver for complex situations. That said, the company didn't share any benchmark results or independent evaluations in its announcement.

Broad world knowledge beats limited driving data

According to Waymo, most simulation models in the industry train only on a company's own driving data, which boxes the system into that company's direct experience. The Waymo World Model draws on the broad world knowledge that Genie 3 picked up through pre-training on a massive and diverse video dataset.

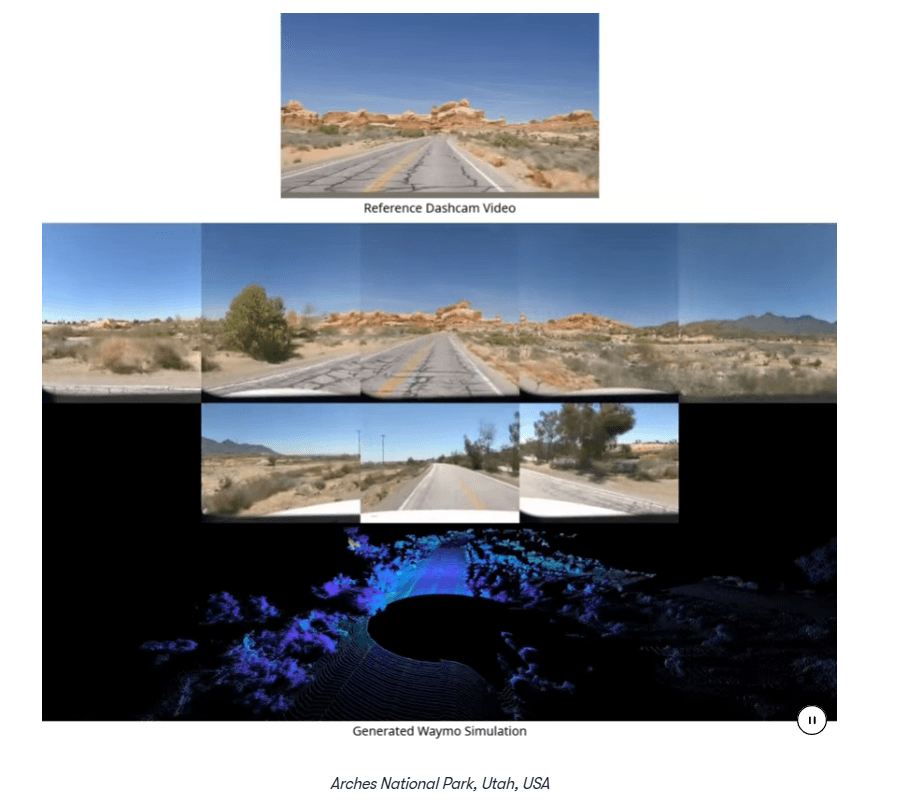

Through specialized post-training, this 2D video knowledge gets translated into 3D lidar outputs tailored to Waymo's proprietary hardware. The model generates both camera and lidar data—cameras provide visual details while lidar delivers precise depth information as a complementary signal.

This lets the system simulate situations the Waymo fleet has never actually seen, like an encounter with an elephant, a tornado, a flooded residential area, or snow on tropical roads lined with palm trees.

How the system handles different scenarios

The Waymo World Model offers three ways to control simulations. Driving action control lets engineers test counterfactual scenarios, for example, whether the Waymo Driver could have handled a situation differently. Unlike reconstructive methods like 3D Gaussian splats, which fall apart visually when routes deviate, the generative model keeps things realistic and consistent, Waymo claims.

Scene layout control adjusts road layouts, traffic light conditions, and how other road users behave. Text prompts offer the most flexibility: they can generate different times of day, weather conditions, or entirely synthetic scenes. The model can also convert ordinary dashcam or cell phone videos into multimodal simulations that show how the Waymo Driver would perceive a scene with its sensors.

For scenarios that take longer to play out, like negotiating passage in a narrow lane, Waymo built a leaner version of the model that it says achieves a "dramatic reduction in compute" and enables large-scale simulations.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.