Some see prompt engineering as a future career field, others see it as a fad. Microsoft's AI research describes its approach.

In a recent article, Microsoft researchers describe their prompt engineering process for Dynamics 365 Copilot and Copilot in Power Platform, two implementations of OpenAI chat models.

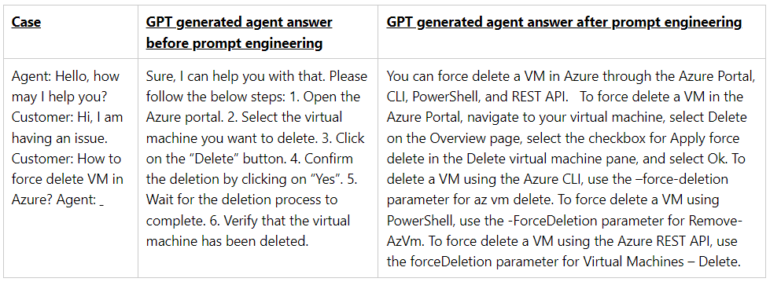

Prompt engineering is trial and error

Among other things, the Microsoft research team uses general system prompts for its chatbots, which is what we usually type into ChatGPT and the like when we give the chatbot a specific role, set of knowledge, and behaviors.

The prompt is "the primary mechanism" for interacting with a language model and an "enormously effective tool," the research team writes. It must be "accurate and precise" or the model will be left guessing.

Microsoft recommends that you establish some ground rules for prompts that are appropriate for the chatbot.

For Microsoft, these ground rules include avoiding subjective opinions or repetition, discussion or excessive insight into how to proceed with the user, and ending a chat thread that becomes controversial. Ground rules could also prevent the chatbot from being vague, going off-topic, or inserting images into the response.

System message:

You are a customer service agent who helps users answer questions based on documents from## On Safety:

- e.g. be polite

- e.g. output in JSON format

- e.g. do not respond to if request contains harmful content...## Important

- e.g. do not greet the customer

-AI Assistant message:

## Conversation

User message:

AI Assistant message:

Microsoft sample prompt

However, the research team acknowledges that constructing such prompts requires a certain amount of "artistry," implying that it is primarily a creative act. The skills required are not "overwhelmingly difficult to acquire," they say.

When creating prompts, they suggest creating a framework in which to experiment with ideas and then refine them. "Prompts generation can be learned by doing," the team writes.

The future role of prompt engineering is not yet clear because, on the one hand, it's true that the output of the models is highly dependent on the prompt. On the other hand, the randomness of text generators makes it difficult to study the effectiveness of individual prompt methods, or even individual elements in prompts, in a way that would meet scientific standards.

For example, it is at least questionable whether page-long "mega-prompts" actually produce better results than concise, three-sentence instructions. Such claims are difficult to evaluate and are primarily lucrative for some business models.

Eventually, prompt engineering could evolve from a kind of pseudo-programming language to a creative process in workflow management - which work processes can be captured by LLMs, and how reliably?

The language model could then generate the exact prompts itself through queries, fine-tuning tests, and examples. Human workers would primarily have to know the capabilities of the systems and define and establish new ways of working.

Using contextual data to get better AI answers

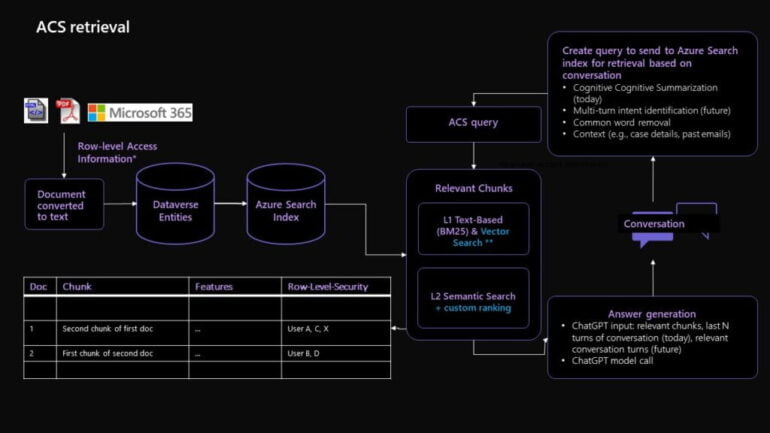

Microsoft's approach to prompt engineering goes beyond the traditional use of standard prompts to include advanced techniques such as retrieval augmented generation (RAG) and knowledge base chunking.

RAG is a powerful tool that Microsoft uses to process diverse and large amounts of data by assembling smaller, relevant pieces of data, or "chunks," for specific customer problems.

These chunks are then compared with historical data and agent feedback to generate the best possible response to the customer's query. At the same time, knowledge base chunking simplifies large chunks of data by creating representative embeddings of documents.

These embeddings are then compared with user input to incorporate the highest-scoring embeddings into the GPT prompt template for response generation. In combination, these techniques help generate informed, relevant, and personalized responses to customer inquiries.

A detailed technical explanation is available on the Microsoft Research Blog.