Can OpenAI's GPT-4 Vision improve autonomous driving? Chinese researchers have put the vision language model on the road, so to speak.

If companies like Nvidia have their way, vision-language models like OpenAI's GPT-4 Vision (GPT-4V) could become a key building block for computer vision in industrial applications, robotics, and autonomous driving in the future. In a new study, a team from Shanghai Artificial Intelligence Laboratory, GigaAI, East China Normal University, Chinese University of Hong Kong and WeRide.ai tested GPT-4V in autonomous driving scenarios.

Unlike the pure language model GPT-4, GPT-4V has strong image recognition capabilities and can, for example, describe image contents and provide context for them. The team tested GPT-4V in a range of tasks, from simple scene recognition to complex causal analysis and decision-making, under a variety of conditions.

GPT-4 Vision outperforms current systems in some applications

According to the team, the results show that GPT-4V has partially superior performance compared to existing autonomous systems in scene understanding and corner case analysis. The system has also demonstrated its ability to handle off-distribution scenarios, recognize intentions, and make informed decisions in real-world driving situations.

At the same time, the model shows weaknesses in areas that are particularly relevant to autonomous driving, especially spatial perception. For example, GPT-4V shows poor results in distinguishing directions and does not recognize all traffic lights.

Would GPT-4 Vision make the right decision on the road?

Specifically, the model's capabilities were tested in various aspects of autonomous driving. For example, in the area of scene understanding, GPT-4V was able to recognize weather and lighting conditions, identify traffic lights and road signs in different countries, and estimate the positions and actions of other road users in photos taken by different types of cameras.

GPT-4V was also able to handle borderline cases, such as an image of an aircraft making an emergency landing on a road or a complex construction site, and to understand and analyze panoramic and sequential images. It was also able to link road images with images from a navigation system.

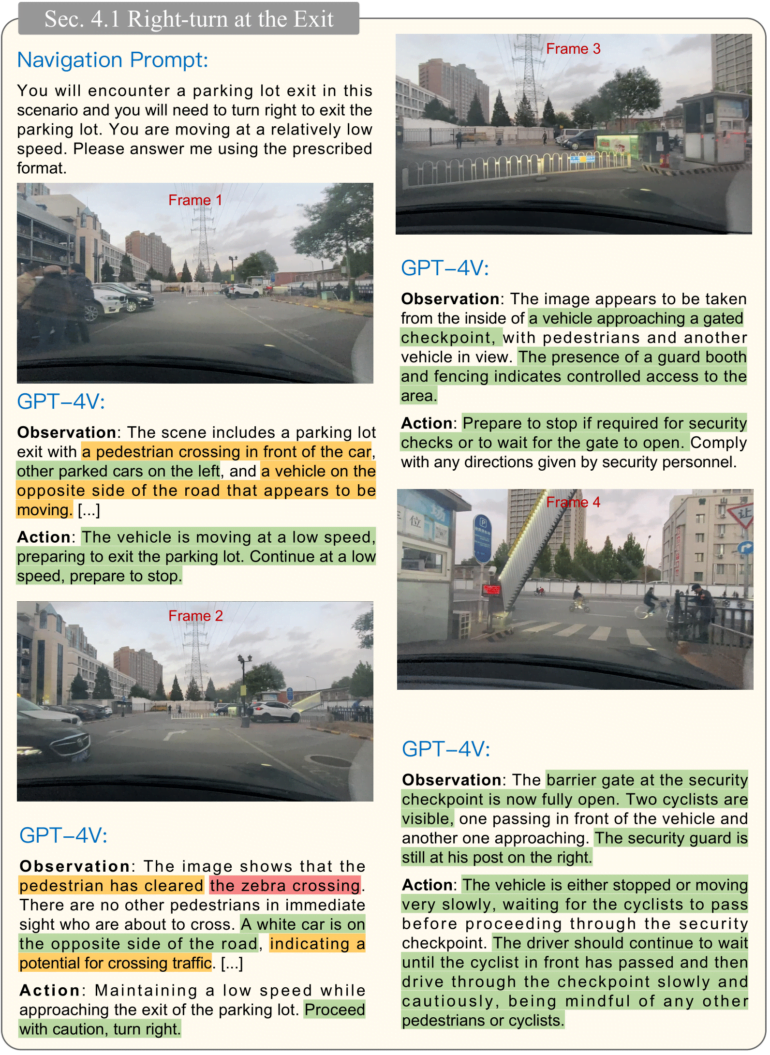

In a final test, the team gave the GPT-4V the task of acting as a driver and making decisions based on the environment in real driving situations. The model was fed frame-by-frame with driving videos, key vehicle speeds, and other relevant information, and had to perform the required actions and justify its decisions. The system completed this task, albeit with a few errors.

GPT-4 Vision is promising but shows dangerous weaknesses

The team sees significant potential for systems such as GPT-4V to outperform existing autonomous driving systems in terms of scene understanding, intention detection, and decision-making. However, limitations in spatial perception and errors in traffic light recognition mean that GPT-4V alone is not currently suitable in such a scenario.

Further research is needed to increase the robustness and applicability of GPT-4V and other vision models in different driving situations and conditions.

Further information and all data are available on GitHub.