AI startup Reka unveils Yasa-1, a multimodal AI assistant that could rival OpenAI's ChatGPT.

AI startup Reka, founded by researchers from DeepMind, Google, Baidu, and Meta, has announced Yasa-1, a multimodal AI assistant that can understand and interact with text, images, video, and audio.

The assistant is available in private beta and competes with, among others, OpenAI's ChatGPT, which has received its own multimodal upgrades with GPT-4V and DALL-E 3. Reka's team says it has been involved in the development of Google Bard, PaLM, and Deepmind Alphacode, to name a few.

Reka uses proprietary AI models

Yasa-1 was built from the ground up, including pre-training base models, customization, and optimization of the training and service infrastructure. The assistant can be customized to understand private data sets of any kind. This should enable companies to implement a wide range of applications.

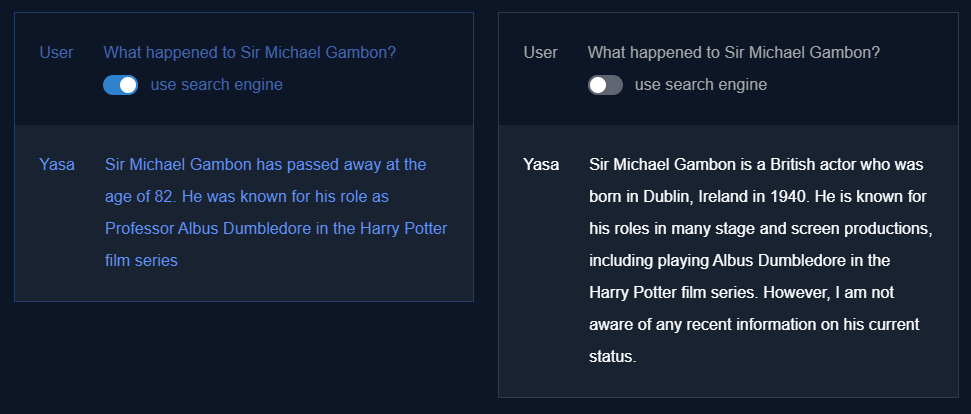

Yasa-1 supports 20 languages and can provide answers with context from the web via commercial search engines, process long context documents with up to 100,000 tokens (24K is standard), and execute code. Anthropic's Claude 2 can also process 100,000 tokens, but Reka claims to be up to eight times faster with comparable accuracy. OpenAI's most powerful model, GPT-4, can handle 32,000 tokens.

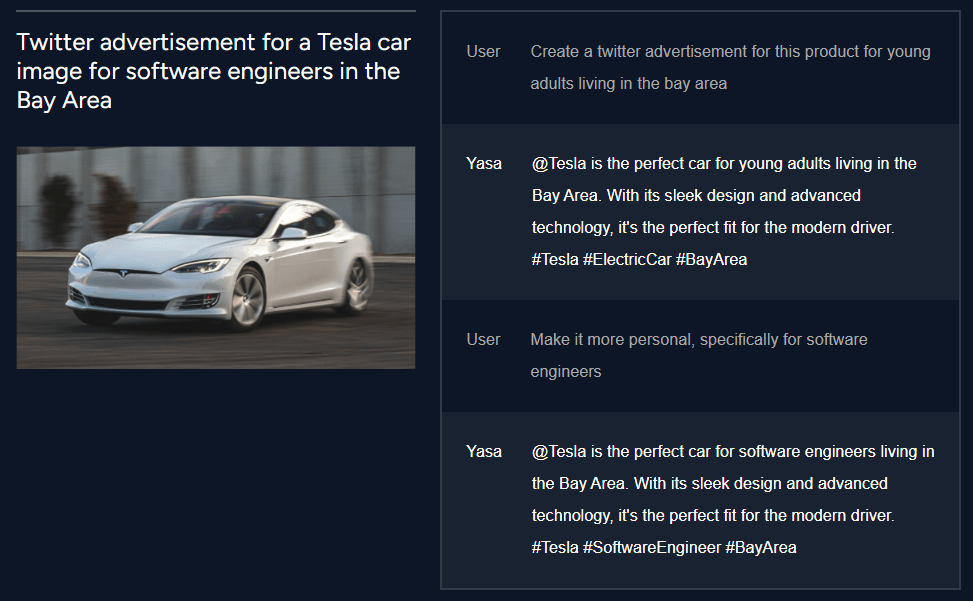

Yasa-1's multimodal capabilities allow users to combine text-based prompts with multimedia files to get more specific answers. For example, the assistant can use an image to create a social media post promoting a product or identify a specific sound and its source.

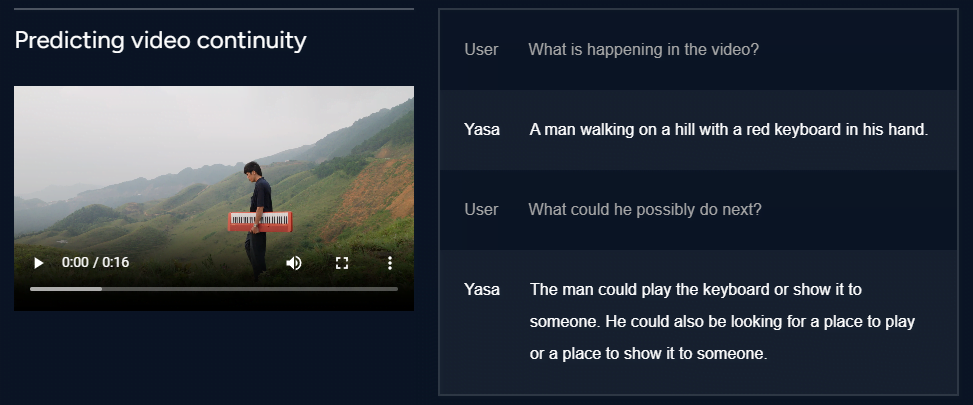

Yasa-1 can also understand what is happening in a video, including what topics are being discussed, and predict the next possible actions in a video.

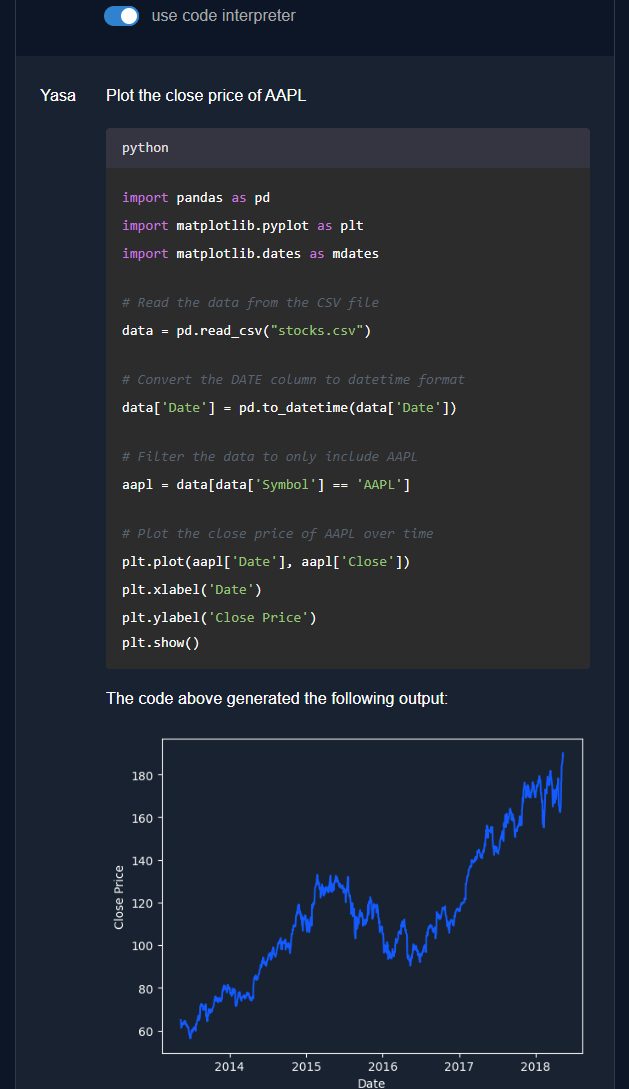

In addition to its multimodal capabilities, Yasa-1 supports programming tasks and can execute code to perform arithmetic operations, analyze tables, or create visualizations for specific data points.

As with all large language models, Yasa-1 can talk nonsense and should not be relied on exclusively for critical advice, Reka writes. In addition, while the assistant can provide excellent descriptions of images, videos, or audio content, it has limited ability to recognize complex details in these media without further customization.

Reka plans to expand access to Yasa-1 to more companies in the coming weeks. The goal is to improve the agent's capabilities while addressing its limitations.

Reka's first public appearance was in late June 2023. It is funded with $58 million. The startup says it focuses on universal intelligence, universal multimodal and multilingual agents, self-improving AI, and model efficiency.