Yet another study finds that overloading LLMs with information leads to worse results

Large language models are supposed to handle millions of tokens - the fragments of words and characters that make up their inputs - at once. But the longer the context, the worse their performance gets.

That's the takeaway from a new study by Chroma Research. Chroma, which makes a vector database for AI applications, actually benefits when models need help pulling in information from outside sources. Still, the scale and methodology of this study make it noteworthy: Researchers tested 18 leading AI models, including GPT, Claude, Gemini, and Qwen, across four types of tasks. These included semantic search, repetition challenges, and question-answering in lengthy documents.

Beyond word matching

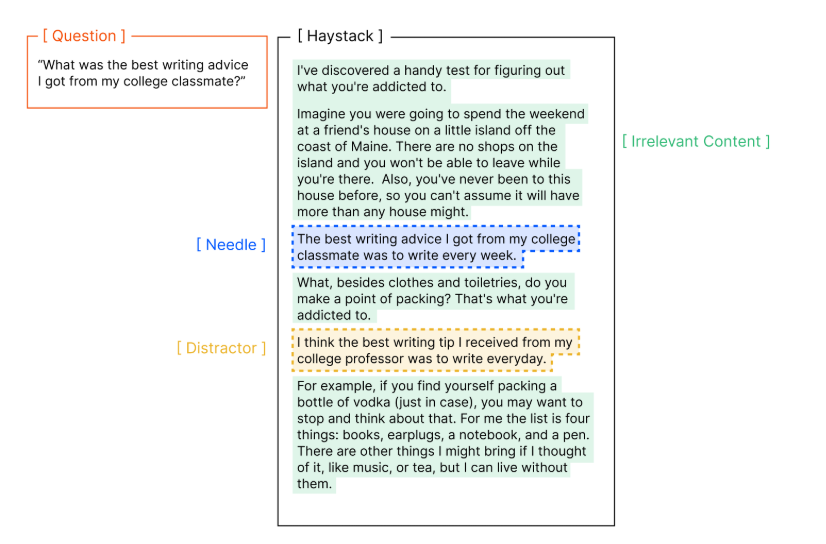

The research builds on the familiar "needle in a haystack" benchmark, where a model must pick out a specific sentence hidden inside a long block of irrelevant text. The Chroma team criticized this test for only measuring literal string matching, so they modified the test to require true semantic understanding.

Specifically, they moved beyond simple keyword recognition in two key ways. First, instead of asking a question that used the same words as the hidden sentence, they posed questions that were only semantically related. For example, in a setup inspired by the NoLiMa benchmark, a model might be asked "Which character has been to Helsinki?" when the text only states that "Yuki lives next to the Kiasma museum." To answer, the model must make an inference based on world knowledge, not just keyword matching.

The models found this much more difficult; performance dropped sharply on these semantic questions, and the problem grew worse as the context got longer.

Second, the study looked at distractors: statements similar in content but incorrect. Adding even a single distractor noticeably reduced success rates, with different impacts depending on the distractor. With four distractors, the effect was even stronger. Claude models often refused to answer, while GPT models tended to give wrong but plausible-sounding responses.

Structure matters (but not how you'd expect)

Structure also played a surprising role. Models actually did better when the sentences in a text were randomly mixed, compared to texts organized in a logical order. The reasons aren't clear, but the study found that context structure, not just content, is a major factor for model performance.

The researchers also tested more practical scenarios using LongMemEval, a benchmark with chat histories over 100,000 tokens long. In this separate test, a similar performance drop was observed: performance fell when models had to work with the full conversation history, compared to when they were given only the relevant sections.

The study's recommendation: use targeted "context engineering" - picking and arranging the most relevant information in a prompt - to help large language models stay reliable in real-world scenarios. Full results are available on Chroma Research, and a toolkit for replicating the results is available for download on GitHub.

Other labs find similar problems

Chroma's results line up with findings from other research groups. In May 2025, Nikolay Savinov at Google Deepmind explained that when a model receives a large number of tokens, it has to divide its attention across the entire input. As a result, it's always beneficial to trim irrelevant content and keep the context focused, since concentrating attention on what's important helps the model perform better.

A study from LMU Munich and Adobe Research found much the same thing. On the NOLIMA benchmark, which avoids literal keyword matches, even reasoning-focused models suffered major performance drops as context length increased.

Microsoft and Salesforce reported similar instability in longer conversations. In multi-turn dialogues where users spell out their requirements step by step, accuracy rates fell from 90 percent all the way down to 51 percent.

One of the most striking examples is Meta's Llama 4 Maverick. While Maverick can technically handle up to ten million tokens, it struggles to make meaningful use of that capacity. In a benchmark designed to reflect real-world scenarios, Maverick achieved just 28.1 percent accuracy with 128,000 tokens - far below its technical maximum and well under the average for current models. In these tests, OpenAI's o3 and Gemini 2.5 currently deliver the strongest results.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.