A survey of 2,778 researchers shows how fragmented the AI science community is

The "2023 Expert Survey on Progress in AI" shows that the scientific community has no consensus on the risks and opportunities of AI, but everything is moving faster than once thought.

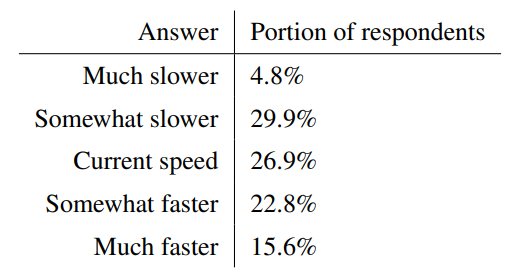

On the much-discussed question of whether the development of AI needs a pause, the survey reveals an undecided picture: about 35% support either a slower or a faster development compared to the current pace.

However, at 15.6%, the "much faster" group is three times larger than the "much slower" group. 27% say the current pace is appropriate.

AI development will continue to accelerate

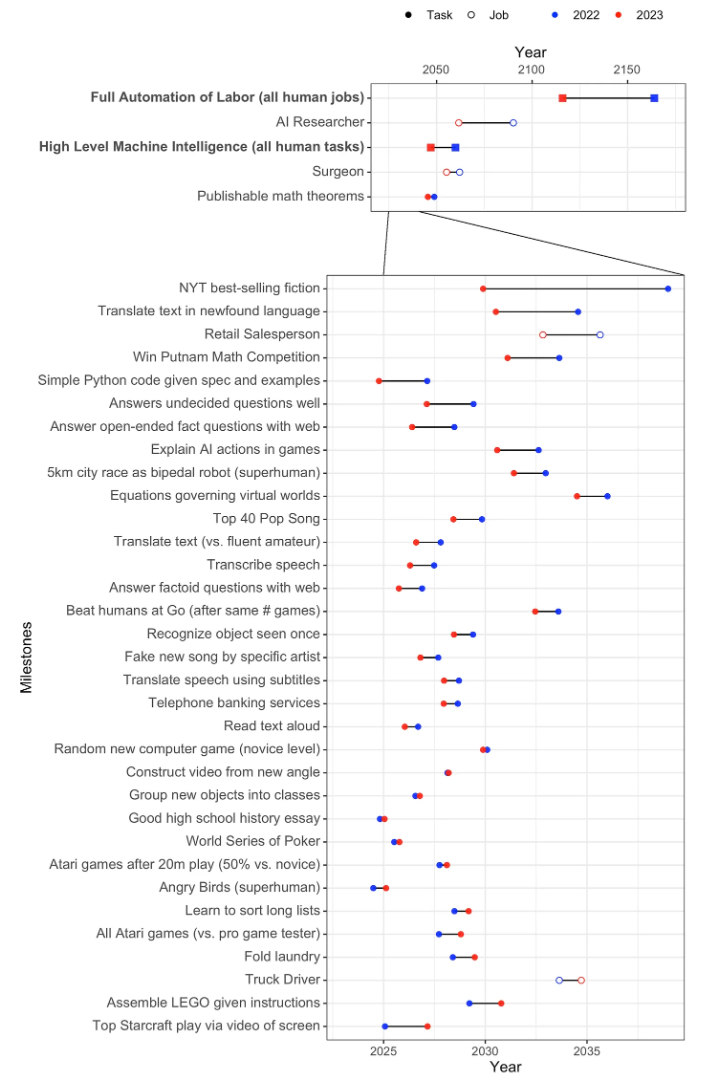

The survey found that the pace of AI development will continue to accelerate. The overall forecast revealed a probability of at least 50 percent that AI systems will reach several milestones by 2028, many significantly earlier than previously thought.

These milestones include autonomously creating a payment processing website from scratch, creating a song indistinguishable from a new song by a well-known musician, and autonomously downloading and refining a comprehensive language model.

A fictional New York Times bestseller is expected to be written by AI around 2030. In the last survey, this estimate was around 2038.

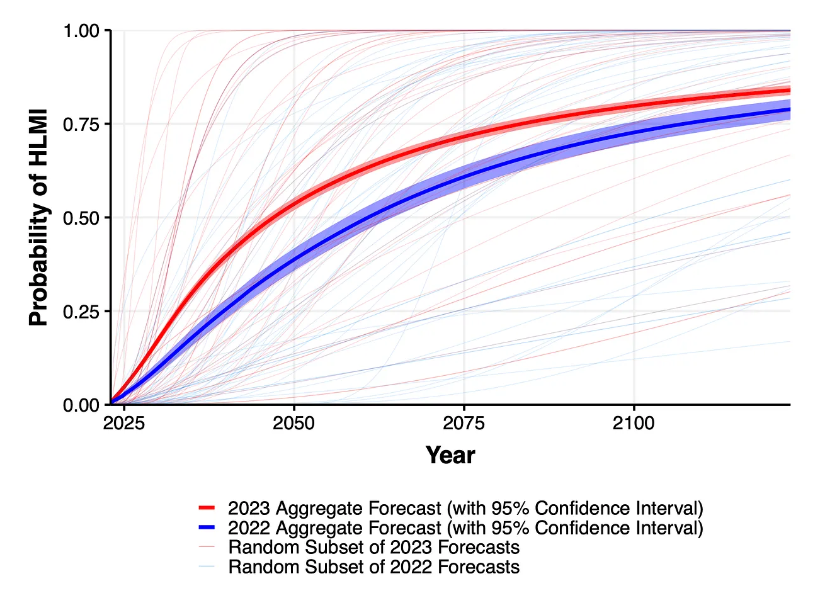

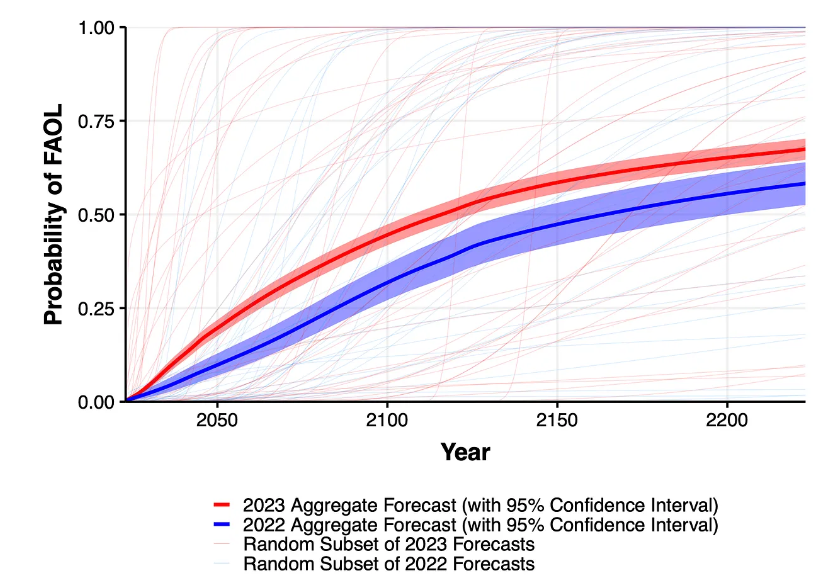

Answers to the questions about "high-level machine intelligence" (HLMI) and "full automation of work" (FAOL) also varied widely in some cases, but the overall forecast for both questions points to a much earlier occurrence than previously expected.

If scientific progress continues unabated, the probability that machines will outperform humans in all possible tasks without outside help is estimated at 10 percent by 2027 and 50 percent by 2047. This estimate is 13 years ahead of a similar survey conducted just one year earlier.

The likelihood of all human occupations being fully automated was estimated at 10 percent by 2037 and 50 percent by 2116 (compared to 2164 in the 2022 survey).

Existential fears also exist in AI science, but they are becoming more moderate

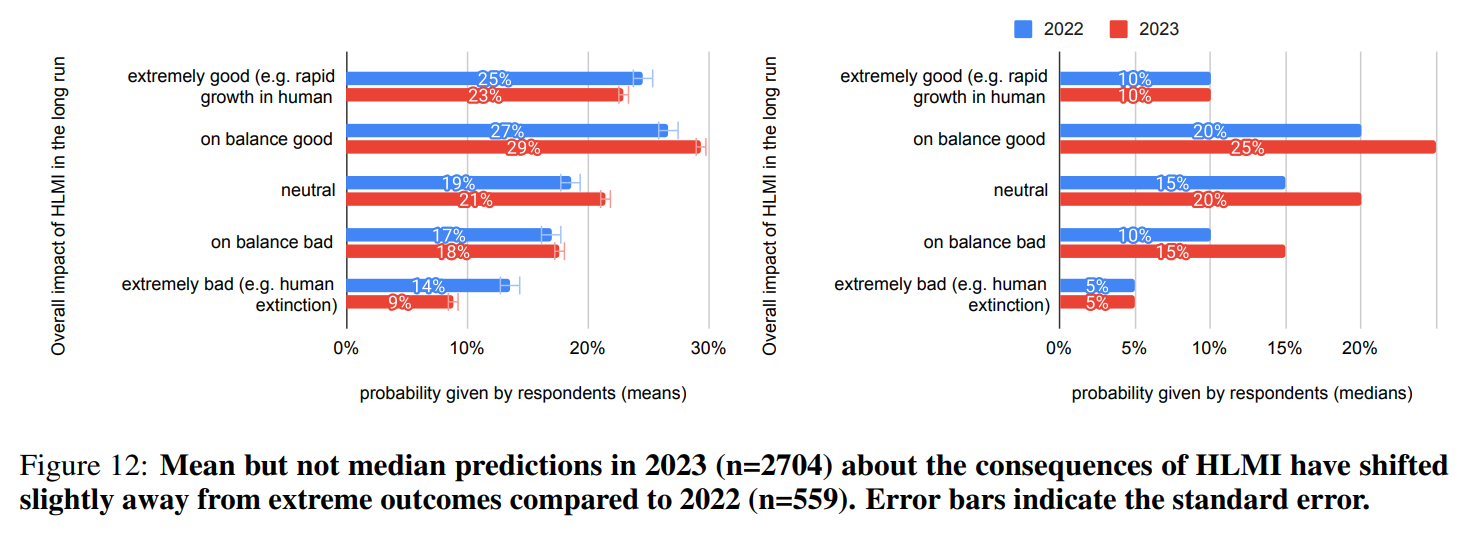

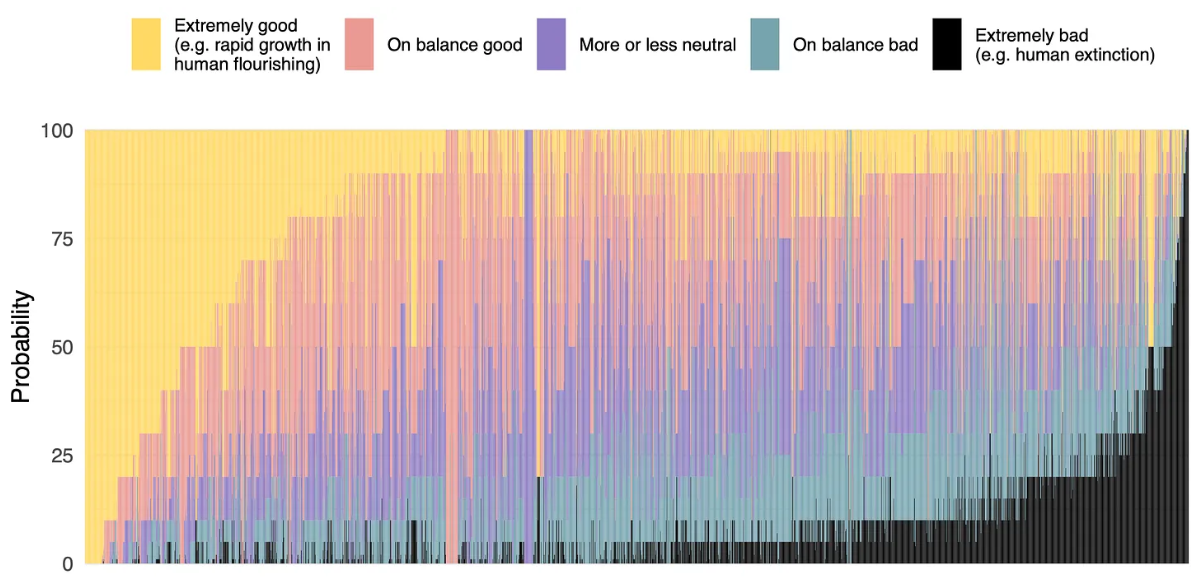

High hopes and gloomy fears often lie close together among the participants. More than half of the respondents (52%) expect positive or even very positive (23%) effects of AI on humanity.

In contrast, 27 percent of respondents see more negative effects of human-like AI. Nine percent expect extremely negative effects, including the extinction of humanity. Compared to last year's survey, the extreme positions have lost some ground.

While 68.3 percent of respondents believe that good consequences of a possible superhuman AI are more likely than bad consequences, 48 percent of these net optimists give a probability of at least 5 percent for extremely bad consequences, such as the extinction of humanity. Conversely, 59 percent of net pessimists gave a probability of 5 percent or higher for extremely good outcomes.

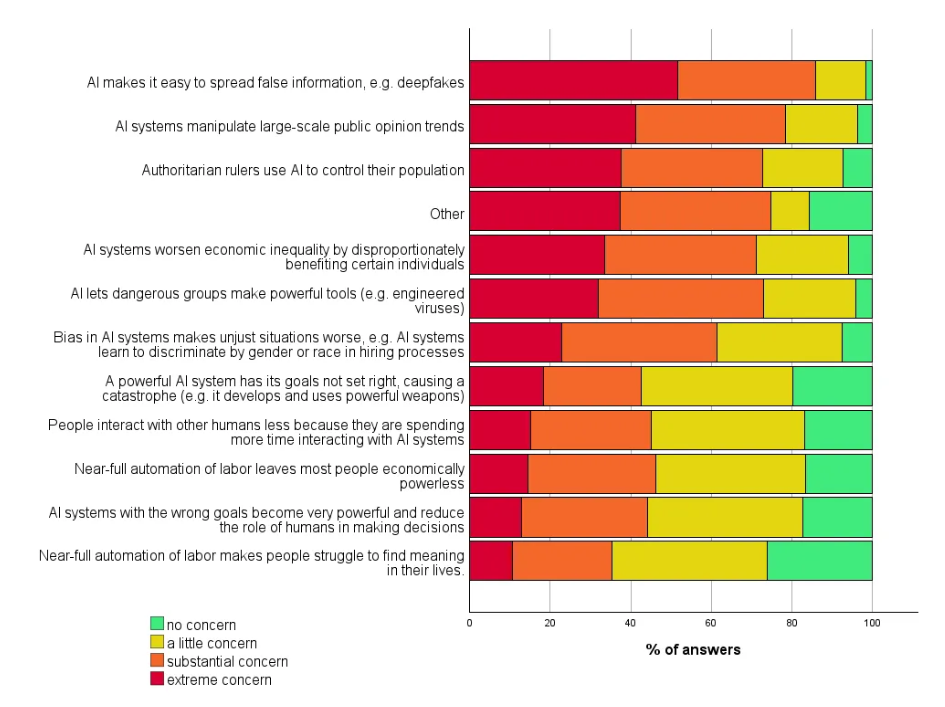

In terms of specific risks, disinformation and deepfakes are considered particularly threatening. This goes hand in hand with mass manipulation and AI-assisted population control by authoritarian rulers. By comparison, disruptions to the labor market are deemed less risky.

There was broad consensus (70 percent) that research into mitigating the potential risks of AI systems should be a higher priority.

The survey is based on responses from 2,778 attendees at six leading AI conferences. It was conducted in October 2023 and is the largest of its kind, according to the initiators. Compared to last year, more than three times as many attendees were surveyed across a broader range of AI research areas.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.