The race to create more powerful artificial intelligence is "a race to the bottom", according to Max Tegmark, a professor of physics at the Massachusetts Institute of Technology and co-founder of the Future of Life Institute.

Tegmark, who in March garnered more than 30,000 signatures for an open letter calling for a six-month pause in the development of robust AI systems, is now expressing concern about tech companies' reluctance to pause.

Citing intense competition, the physicist highlights the dangers of this runaway race. Despite the lack of pause, Tegmark considers the letter a success, insisting that it has led to mainstream acceptance of the potential dangers of AI.

According to Tegmark, it's necessary to stop further development until globally accepted safety standards are established. The professor calls for strict control over open-source AI models, arguing that sharing them is akin to distributing blueprints for biological weapons.

"Making models more powerful than what we have now, that has to be put on pause until they can meet agreed-upon safety standards. Agreeing on what the safety standards are will naturally cause the pause," Tegmark tells The Guardian.

2023's most famous letter yet

In late March, the Future of Life Institute published an open letter signed by prominent business leaders and scientists, including Elon Musk and Steve Wozniak, calling for a six-month pause in the development of AI systems more powerful than GPT-4.

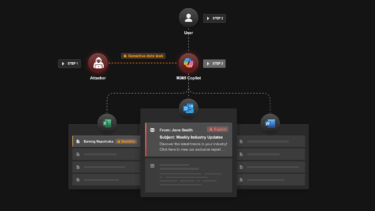

The letter, addressed to "all AI labs," particularly Microsoft and OpenAI, cites a lack of planning and management in the deployment of AI technology and highlights potential risks, including AI propaganda, job automation, and loss of control.

The Institute recommended using the pause to develop safety guidelines that have been overlooked by independent experts, and to coordinate with lawmakers for better oversight and control of AI systems.

The letter garnered a lot of attention and certainly sparked discussion, but ultimately didn't lead to any serious plans for a pause. Google is releasing its next-generation Gemini model this fall, OpenAI is planning visual upgrades for GPT-4, and it just introduced DALL-E 3.

Tegmark and researcher Steve Omohundro recently proposed the use of mathematical proof and formal verification as a means of ensuring the safety and controllability of advanced AI systems, including AGI.

Designing AI so that critical behaviors are provably consistent with formal specifications that encode human values and preferences could help provide a safety net. The authors acknowledge the technical obstacles, but are optimistic that recent advances in machine learning will enable advances in automated theorem proving.