Meta plans to deploy its Artemis AI chip this year to reduce reliance on Nvidia GPUs

Key Points

- Meta plans to deploy a custom AI chip called "Artemis" in its data centers to reduce reliance on Nvidia chips and control the cost of AI workloads.

- The new chip is expected to go into production later this year and will be used in Meta's data centers along with Nvidia and non-Nvidia GPUs to run AI models (inference).

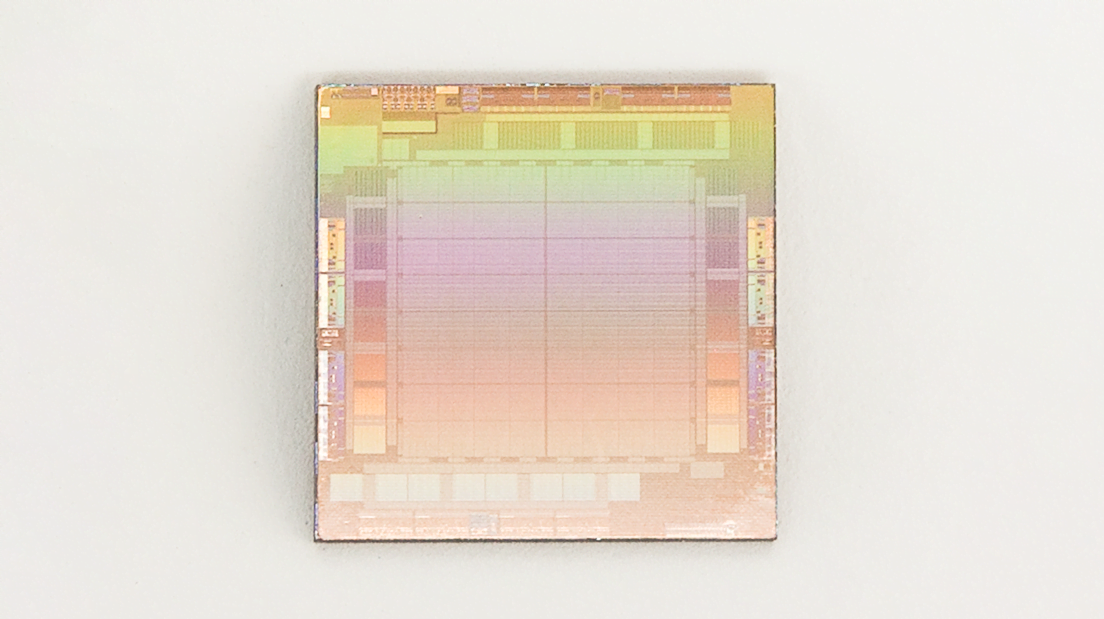

- Meta first unveiled a new chip family called the Meta Training and Inference Accelerator (MTIA) in May 2023, which is designed to speed up and reduce the cost of running neural networks.

Meta plans to deploy a customized second-generation AI chip in its data centers this year.

The new chip, code-named "Artemis," will go into production this year and be used in Meta's data centers for "inference," a fancy term for running AI models.

The goal is to reduce reliance on Nvidia chips and control the cost of AI workloads, reports Reuters.

The new chip will work with the GPUs Meta buys from Nvidia and other suppliers. For instance, it could be more efficient in running Meta's recommendation models for social networks.

Meta also offers generative AI applications in its services and is training Llama 3, an open-source model that aims to reach GPT-4 levels.

A Meta spokesperson confirmed the plan to bring the chip into production in 2024, saying, "We see our internally developed accelerators to be highly complementary to commercially available GPUs in delivering the optimal mix of performance and efficiency on Meta-specific workloads."

Meta's a big Nvidia buyer

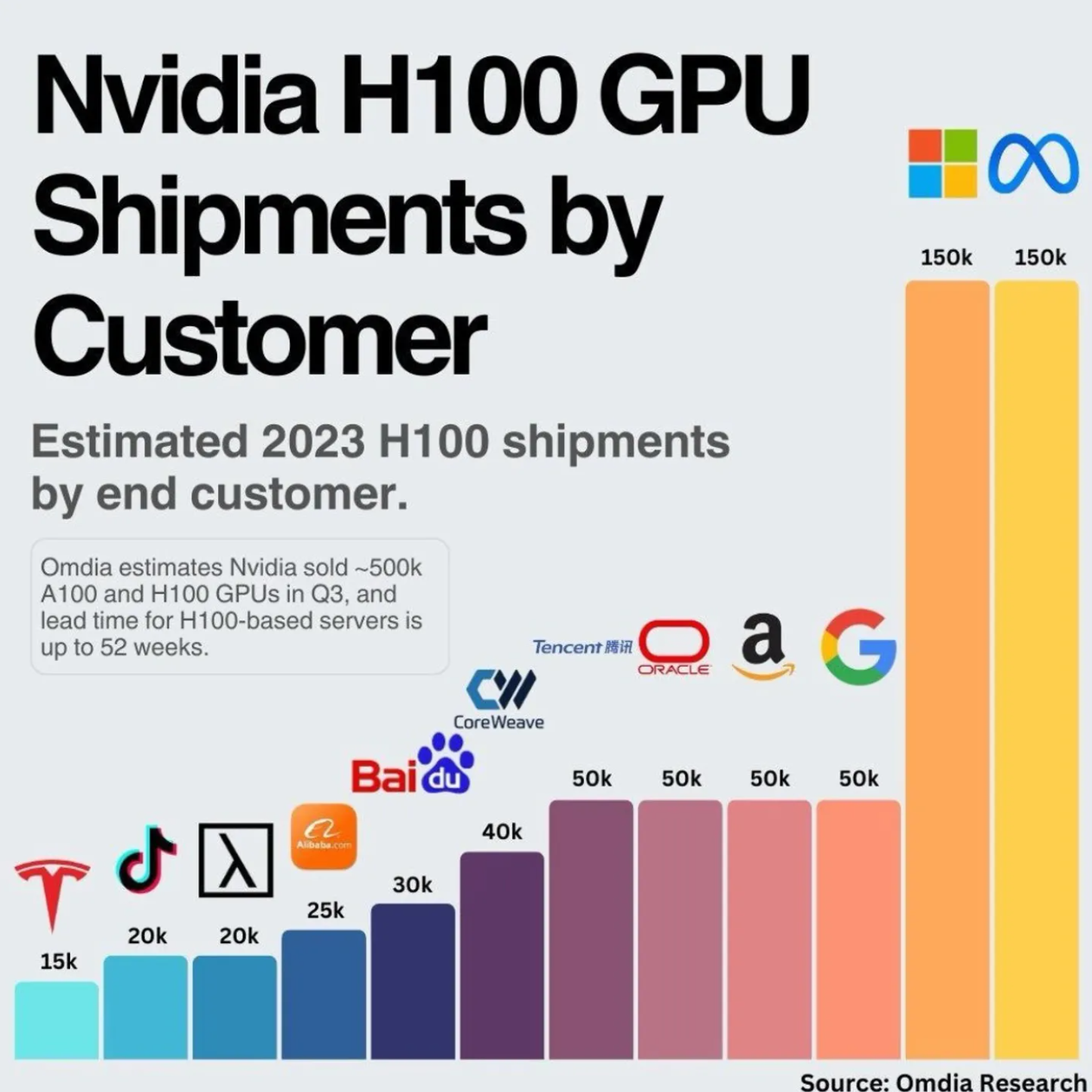

Meta CEO Mark Zuckerberg recently announced that he plans to have 340,000 Nvidia H100 GPUs in use by the end of the year, for a total of approximately 600,000 GPUs running and training AI systems. This makes Meta Nvidia's largest publicly known customer after Microsoft.

More capable and larger models lead to higher AI workloads and spiraling costs. In addition to Meta, companies like OpenAI and Microsoft are trying to break this cost spiral with proprietary AI chips and more efficient models.

In May 2023, Meta first unveiled its new chip family, called the Meta Training and Inference Accelerator (MTIA), which is designed to speed up and lower the cost of running neural networks.

According to the announcement, the first chip (see cover image) should be in use by 2025 and was being tested in Meta's data centers at the time. Reuters reports that Artemis is already a more advanced version of the MTIA.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now