Meta's "Make-a-Video" turns texts into short videos

Update –

- Added information on data used for training and video resolution.

Update, October 1, 2022:

British computer scientist Simon Willison, who recently described a security flaw in GPT-3, has analyzed the video data Meta used to train the text-to-video AI. According to Willison, Meta used about ten million preview clips, complete with watermarks, from the stock platform Shutterstock. Willison makes the videos searchable in an online database.

Another ten million or so clips came from 3.3 million YouTube videos from a dataset compiled by Microsoft Research Asia, writes Andy Baio at Waxy. The two authors assume that Meta did not have the consent of the video creators to use the videos for AI training.

Baio explicitly criticizes an "academic-commercial pipeline" in which "data laundering" style datasets would be created under academic pretense and released under open-source license. These datasets would then be used in corporate research to train AI models that would later be used commercially.

Original article dated September 29, 2022:

With "Make-a-Video" Meta introduces a new AI system that can generate new videos from text, images, or videos.

In June, Meta introduced "Make-a-Scene," a multimodal AI system that can generate images from very rough sketches describing the scene layout together with a text description.

Make-a-Video is the further development of this system for moving images: In addition to text-image pairs, Meta trained the AI for this with unlabeled video data.

"The system learns what the world looks like from paired text-image data and how the world moves from video footage with no associated text," Meta writes.

Eliminating labeled video data reduced training overhead, according to Meta. The combination with text-image pairs allows the system to retain the visual diversity of current generative image models and understand how individual objects look and are referred to. Meta used publicly available image and video datasets.

Video generation via text, image, or video

Like AI image generators, Make-a-Video supports different styles, such as the ability to create stylized or photorealistic videos. The following example shows a video created with the text input "A teddy paints a portrait" and some other examples with different styles.

The system can also process individual images as input and set them in motion, or create motion between two similar images. For example, a rigid family photo becomes a (very) short family video.

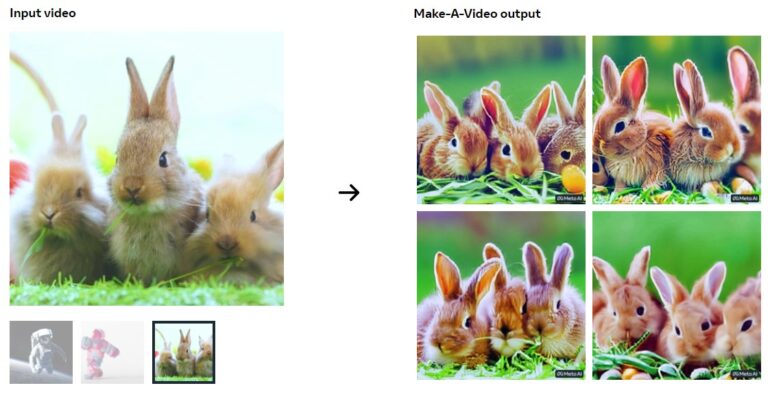

From existing videos, Make-a-Video can generate variants that approximate the motif and animation of the original, but ultimately differ significantly in details.

Qualitatively, the videos still have clearly visible weaknesses such as blurring or distortions on objects. But the first motifs created with generative image AIs also had such flaws. And we all know how that went over the last few years. In initial user tests, Make-a-Video was rated three times better than (barely existing) comparable systems in both text input representation and image quality, according to Meta. The resolution in the rated videos was 256 x 256 pixels high, the maximum resolution mentioned by Meta is 768 x 768 pixels.

Meta plans to release a public demo once the system is more secure

According to Meta's research team, Make-a-Video cannot yet make associations between text and phenomena that can only be seen in videos. As an example, Meta cites the generation of a person waving their hand from left to right or vice versa. In addition, the system cannot yet generate longer scenes that tell detailed, coherent stories.

Like all large generative AI systems, Make-a-Video also inherited social and sometimes harmful biases from training data and would likely amplify them, the researchers write. Meta said it removed NSFW content and toxic terms from the data and employs filtering systems. It also said all training data is publicly available for maximum transparency. All scenes generated with Make-a-Video carry a watermark, so it is always clear that it is an AI-generated video.

Meta announces a public demo but does not give a time frame. Currently, the model would be analyzed and tested internally to ensure that every step of a possible release is "safe and intentional".

Interested parties can express their interest in further information and access to the model here.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.