Nvidia Blackwell GPU can run GPT-4 level models up to 30x faster

Nvidia's GTC 2024 is all about generative AI - and the hardware that makes the current boom possible. With the next generation of Blackwell Nvidia plans to set new standards.

According to Nvidia CEO Jensen Huang, Blackwell will be the driving force behind the new industrial revolution. The platform promises to enable generative AI with large language models of up to several trillion parameters.

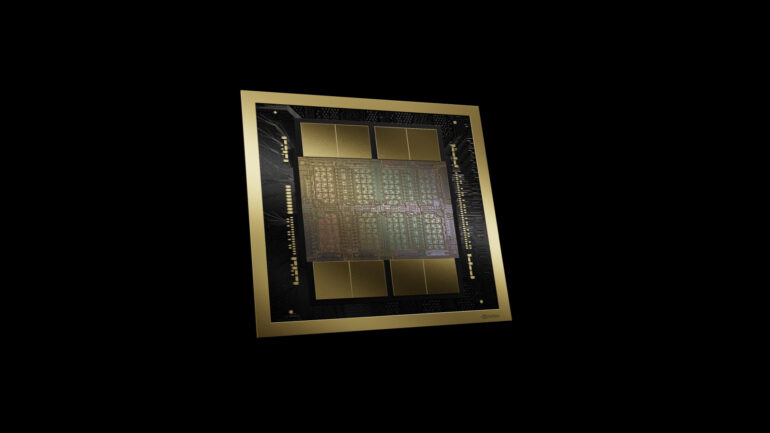

According to Nvidia, the architecture includes the world's most powerful chip with 208 billion transistors. Specifically, Blackwell combines two dies manufactured using TSMC's 4NP process with a connection speed of 10TB/second, allowing them to operate as a single CUDA GPU. In addition, Blackwell includes a second-generation Transformer Engine that enables AI applications with FP4 accuracy, enhanced NVLink communication technology for data exchange between up to 576 GPUs, and a new RAS Engine that enables AI predictive maintenance, among other features. A dedicated decompression engine is also designed to accelerate database queries.

Blackwell GPU will provide an AI computing power of 10 petaFLOPS in FP8 and 20 petaFLOPS in FP4. When using the new Transformer Engine with so-called "Micro Tensor Scaling", twice the computing power, twice the model size, and twice the bandwidth are possible. The chip is also equipped with 192 gigabytes of HBM3e memory.

Compared to the H100 GPU, Blackwell is said to offer four times the training performance, up to 25 times the power efficiency, and up to 30 times the inference performance. The latter is a clear sign that Nvidia is taking on the competition from inference-focused chips that are currently trying to steal market share from the top dog. However, this performance is only achieved with so-called mixture-of-expert models such as GPT-4; with classical large transformation models such as GPT-3, the leap is 7x. However, MoE models are becoming more and more important, Google's Gemini is also based on this principle. This significant leap is made possible by the new NVLink and NVLink Switch 7.2, which enable more efficient communication between GPUs - previously a bottleneck in MoE models.

Nvidia expects the Blackwell platform to be used by nearly all major cloud providers and server manufacturers. Companies such as Amazon Web Services, Google, Meta, Microsoft, and OpenAI will be among the first to deploy Blackwell.

New DGX SuperPOD with 11.5 ExaFLOPs

The new generation also includes a new version of the DGX SuperPOD. The DGX SuperPOD features a new, highly efficient, liquid-cooled, rack-scale architecture and delivers 11.5 ExaFLOPS of FP4-precision AI supercomputing performance and 240 terabytes of fast memory. The system can scale to tens of thousands of chips with additional racks.

At the heart of the SuperPOD is a GB200 NVL72, which connects 36 Nvidia GB200 supercomputer chips, each with 36 Grace CPUs and 72 Blackwell GPUs via Nvidia's fifth-generation NVLink to form a supercomputer. According to Nvidia, the GB200 Super Chips deliver up to 30 times the performance of the same number of Nvidia H100 Tensor Core GPUs for inference workloads with large language models.

One DGX GB200 NVL72 is - thanks to the new NVLink chip - basically "on giant GPU", Huang said. It delivers 720 PetaFLOPS for training FP8, and 1,44 ExaFLOPS for inference in FP4.

Nvidia also introduced the DGX B200 system, a platform for AI model training, tuning, and inference. The DGX B200 is the sixth generation of the air-cooled DGX design and connects eight B200 Tensor Core GPUs to CPUs. Both systems will be available later this year.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.