Sakana AI's evolutionary algorithm creates capable AI models by merging existing ones

Japanese AI startup Sakana AI has developed a method for automatically generating new AI models by combining existing models using an evolutionary algorithm. Initial results are promising.

The core idea of Sakana AI is to use natural principles such as evolution and collective intelligence to create new AI models.

The goal is to develop a machine that automatically generates customized AI models for user-defined application domains, rather than developing new models each time.

The Tokyo-based startup has now released its first AI models based on a new evolution-inspired method called "Evolutionary Model Fusion".

This uses evolutionary techniques to efficiently find the best ways to combine different models from a large pool of open-source models with different capabilities.

Video: Sakana AI

The approach uses two methods: First, the layers of different models are recombined in flow space. Second, it remixes the weights of different models in the parameter space. The evolutionary algorithm searches the vast space of possible combinations to find new and unintuitive solutions that would be difficult to discover using conventional methods and human intuition.

Combined AI Models achieve SOTA results in some benchmarks

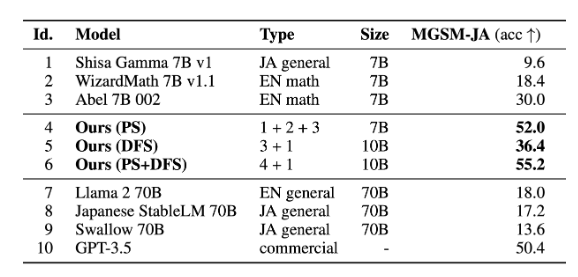

To test the method, Sakana AI automatically developed a Japanese Large Language Model (LLM) with mathematical capabilities and a Japanese Vision Language Model (VLM).

Surprisingly, both models achieved state-of-the-art results on several LLM and vision benchmarks, even though they were not explicitly optimized to perform well on these benchmarks.

In particular, the 7-billion-parameter Japanese mathematical LLM even outperformed some previous 70-billion-parameter Japanese SOTA LLMs on a number of Japanese LLM benchmarks. Sakana AI believes that this experimental Japanese mathematical LLM is good enough to be used as a general purpose Japanese LLM.

The Japanese LLM is also remarkably good at handling culture-specific content, and achieves excellent results on a Japanese dataset of image-text pairs.

The method can also be applied to diffusion models for image generation. Sakana AI reports preliminary results from the development of a high-quality, lightning-fast Japanese SDXL model that uses only four diffusion steps.

Sakana AI releases three Japanese foundation models on Hugging Face and GithHub.

- Large language model (EvoLLM-JP)

- Vision language model (EvoVLM-JP)

- Image generation model (EvoSDXL-JP, coming soon)

Sakana AI sees the combination of neuroevolution, collective intelligence and foundation models as a promising long-term research approach. It could enable large organizations to develop custom AI models faster and more cost-effectively by leveraging the growing number of open-source AI models before investing massive resources in fully proprietary models, according to the startup.

Sakana AI co-founded by AI celebrity

Sakana AI is a Tokyo-based startup founded by former Google AI experts Llion Jones and David Ha to develop generative AI models inspired by nature. These models are designed to generate various forms of content such as text, images, code, and multimedia.

The founders aim to create AI systems that are sensitive and adaptable to changes in their environment, similar to natural systems with collective intelligence. This represents a departure from traditional AI models, which are often designed as immutable structures.

Co-founder Jones is the author of the well-known 2017 research paper "Attention Is All You Need," which introduced the "transformer" architecture for deep learning behind many of today's AI successes. The startup, which has raised $30 million in seed funding from investors including Lux Capital and Khosla Ventures, aims to turn Tokyo into an AI hub similar to San Francisco's OpenAI and London's Deepmind.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.