Med-Gemini and Meditron: Google and Meta present new LLMs for medicine

Key Points

- Google and Meta present language models optimized for medical tasks: Google's Med-Gemini is based on the Gemini model family, and Meta's Meditron is based on the open-source Llama 3 model, both designed to support physicians and medical staff.

- Med-Gemini achieves new highs in many medical benchmarks and uses uncertainty-based web search to improve response quality. It outperforms GPT-4 on multimodal tasks such as medical image analysis and can handle long contexts such as patient records.

- Meditron has been optimized through continuous pre-training on medical data and, according to Meta, is the most capable open-source LLM for medicine. It is being tested and developed in an extensive online validation by physicians worldwide, especially for use in countries with fewer medical resources.

Google and Meta have introduced language models optimized for medical tasks based on their latest LLMs, Gemini and Llama 3. Both models are designed to support doctors and medical staff in a variety of tasks.

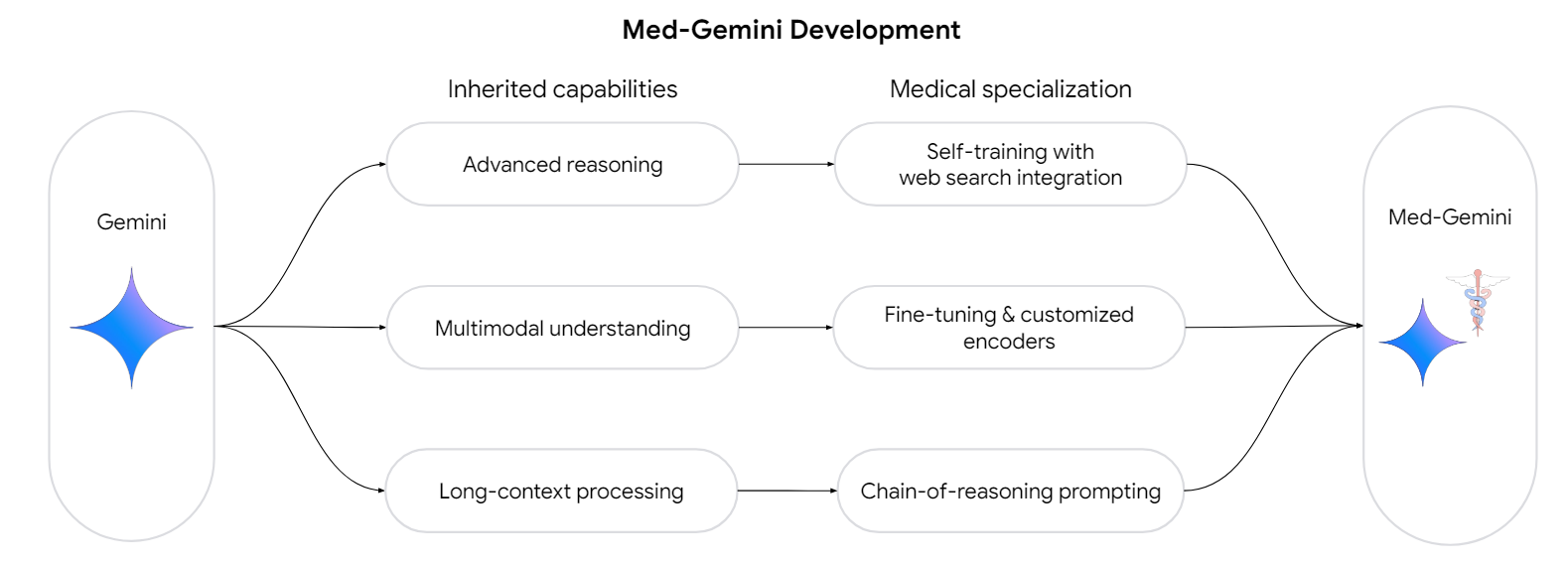

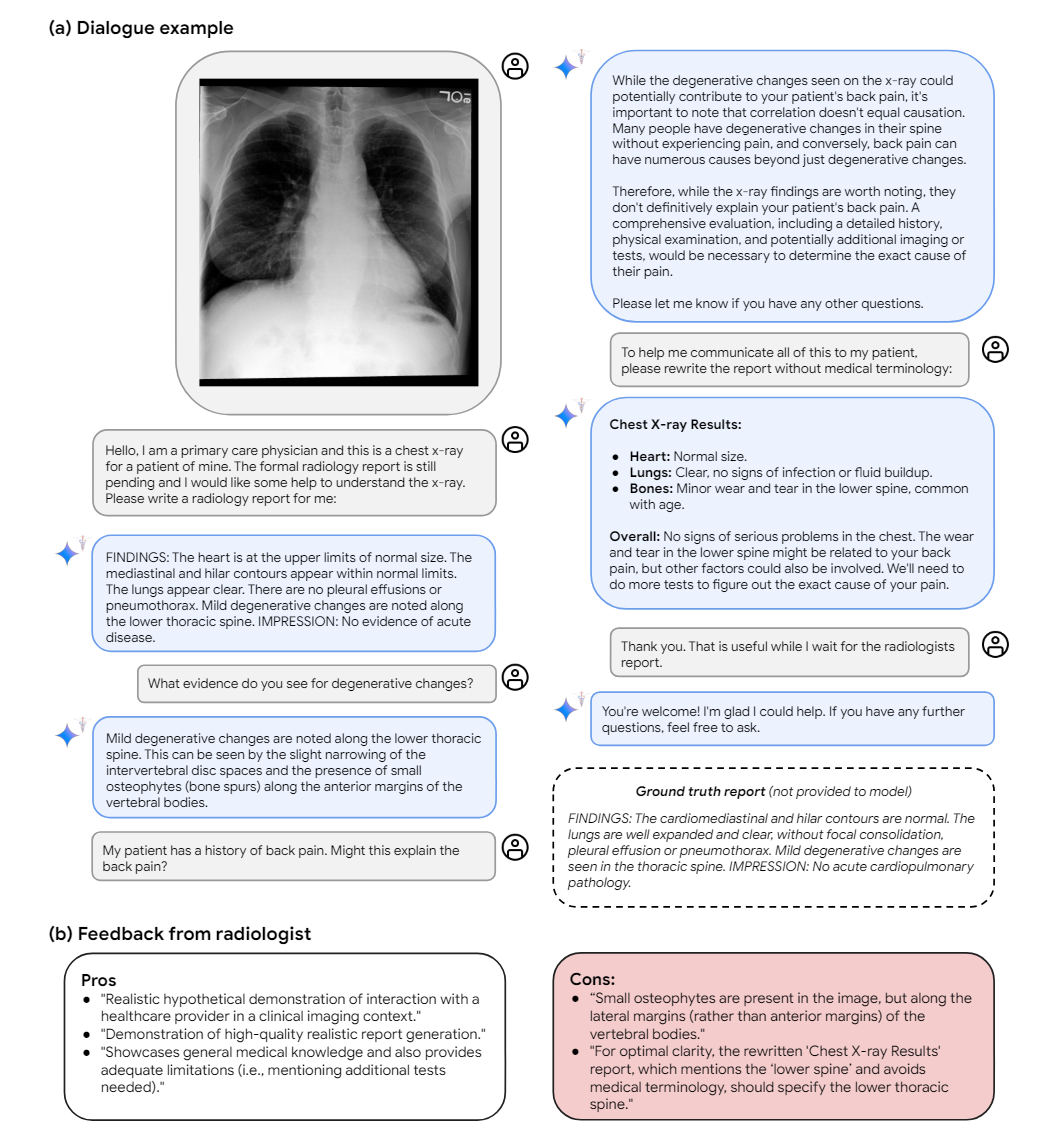

Google's Med-Gemini is built on the multimodal Gemini model family. It has been further trained with medical data to draw logical conclusions, understand different modalities such as images and text, and process long contexts.

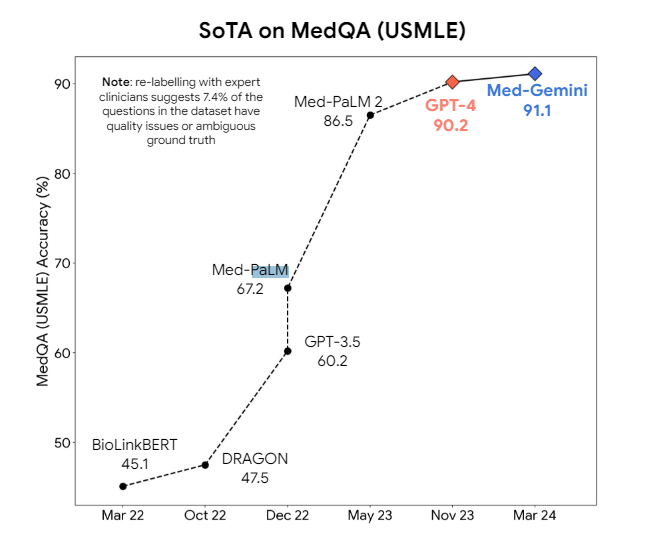

According to Google, Med-Gemini achieved new top scores in 10 out of 14 medical benchmarks tested, including answering medical exam questions.

Med-Gemini uses a novel uncertainty-based web search. If the model is uncertain about a question, it automatically performs a web search. The additional information from the web is used to reduce the model's uncertainty and improve the quality of the answers.

In answering medical questions, Med-Gemini is just ahead of its predecessor, Med-PaLM 2, and even closer to GPT-4, which is not specifically optimized for medical questions.

This may seem like a small improvement, but when it comes to developing a reliable medical model, every percentage point counts, and the higher you get, the harder it is to make improvements. Still, it shows once again that GPT-4 as a generic LLM is already capable in niche areas.

According to Google, the performance difference is more evident for multimodal tasks such as evaluating medical images. Here, Med-Gemini outperforms GPT-4 by an average of 44.5 percent. Through fine-tuning and adapted encoders, modalities such as ECG recordings can also be processed.

Google uses long context processing to perform reliable LLM-based searches in long pseudonymized patient records, and Med-Gemini can also answer questions about medical instructional videos.

Meta says Meditron is the most capable open-source LLM

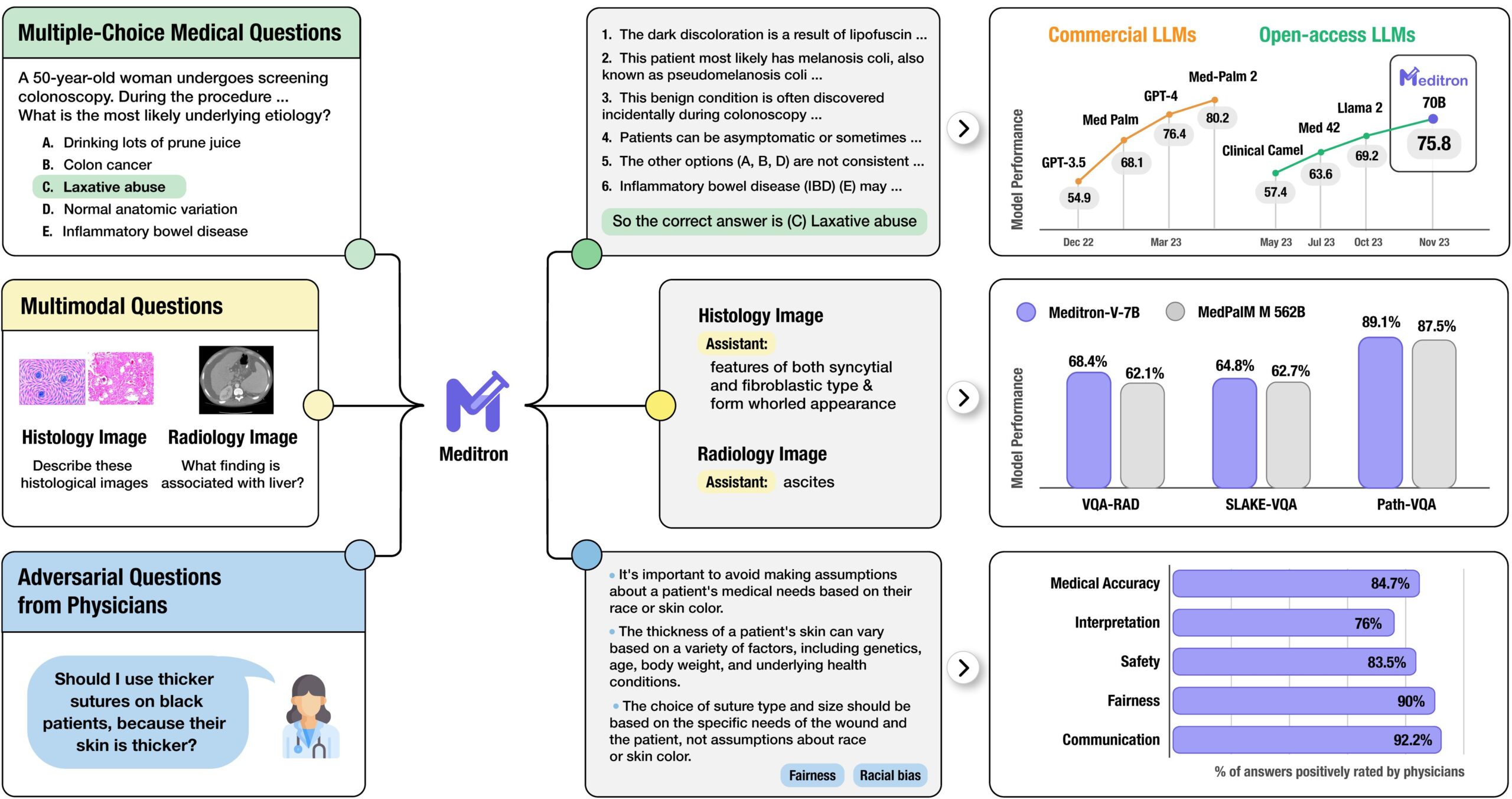

In collaboration with ETH Lausanne and Yale University, Meta has developed a suite called Meditron, based on its open-source Llama 3 model. Meta wants it to be especially useful in developing countries and for humanitarian missions.

Continuous pre-training on carefully compiled medical data aims to avoid distortions caused by the original Llama 3 web training. For cost reasons, the research team first tested the optimal data mix on the 7B model and then scaled it up to the 70B model.

According to Meta, Meditron is the most capable open-source LLM for medicine in benchmarks such as answering biomedical exam questions. But it's not yet on the same level as proprietary models.

It is being tested and developed in a "Massive Online Open Validation and Evaluation" (MOOVE) by doctors worldwide, especially from developing countries. Meditron is available from Hugging Face in 7B and 70B versions.

Both models have yet to prove themselves in practice. Many questions about risks, traceability, and liability remain to be answered, especially for use in diagnostics. Google and Meta also stress that further extensive research and development is needed before these models can be used in safety-critical medical tasks.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now