Researchers explore how generative AI could help you feel better

A new study shows how people integrate generative AI systems like ChatGPT into their self-care practices. The researchers identified five practices ranging from seeking advice to creative self-expression.

Researchers from the University of Edinburgh, Queensland University of Technology and King's College London have looked at how people use AI tools such as ChatGPT and DALL-E in their self-care routines.

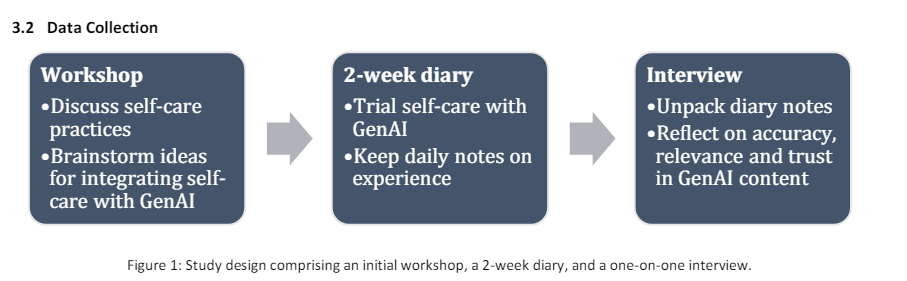

They held workshops, diary studies and interviews with five researchers and 24 participants who regularly practiced self-care.

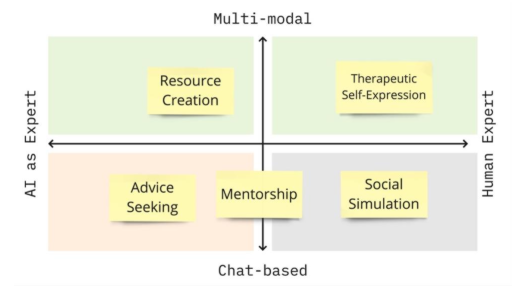

The study found five ways people use AI-generated content for self-care: seeking advice, mentoring, making resources, doing social simulations, and self-expression. These practices differ in where the knowledge comes from and what modalities are used.

Seeking advice was the most common practice. Participants asked ChatGPT a question, for example about dealing with stress, nutrition, or sleep habits. Unlike general internet searches, the AI answers were more personal and written as suggestions rather than facts.

The mentoring took it a step further: participants talked to the AI system over days and weeks on different parts of the same topic, for example as a personal trainer or sleep coach.

The participants liked the AI mentor because it was always there, and they felt it wouldn't judge them. But they didn't see it as a replacement for human mentors.

When making resources, participants used the AI's ability to work with different types of content to make things like guided meditations, coloring pictures or quizzes, which they then used for their self-care - sometimes also to help family and friends.

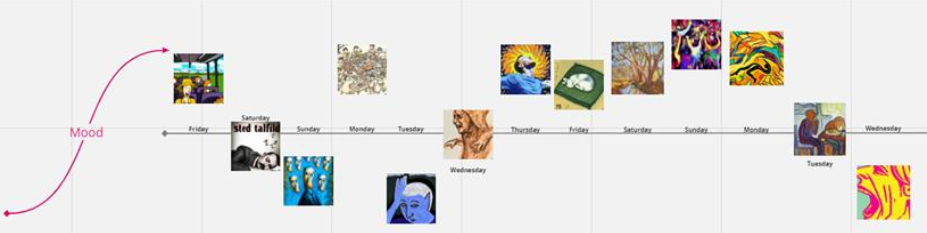

One person created a mood-diary by using an AI-image generator to express his feelings and mapping these on an online whiteboard.

In social simulations, participants used ChatGPT to play out social situations and scenarios. This let them try different approaches in a safe space before using them in real life.

Lastly, therapeutic self-expression included creative activities to show feelings, for example through images or music made by the AI. The AI's ability to work with different content types was essential here, as it let participants show parts of their feelings that they couldn't easily put in words.

Listening without reservations

Trust in the AI systems was an important consideration. For the most part, participants found the AI-generated content to be accurate and appropriate for their self-care, although they sometimes doubted the information or found it too general. Some participants showed a willingness to share intimate personal information with the AI because it was perceived as nonjudgmental.

"I felt like I could be more honest with the AI than I would at a psychologist. Just because, like, when I'm in a real person situation, it's still a person that's there. So you're still saying it in a way that you're being respectful towards the person and stuff, whereas in here [on ChatGPT], you don't have to care about anything. You're just saying your raw, honest emotions. And you know that it's not gonna offend anyone because there's no one to offend."

Opinion of a 25-year-old study participant

The researchers see potential here, but also point out the risk of getting too emotionally attached to AI systems. More research is needed to better understand the opportunities and risks of these technologies for self-care.

The study gives a framework for new self-care practices with generative AI and offers starting points for designing new self-care technologies.

"We argue that content from GenAI not only offers important self-care information (as illustrated through advice-seeking and mentoring) but that it allows people to be creative through the ability to build resources and simulations, and to express themselves," the researchers write.

Most participants used free tools such as ChatGPT-3.5 or Bing Image Creator. It is likely that with new voice modes and more multimodal capabilities, self-care opportunities will increase as the model can easily switch between spoken language and image generation, and the images will more closely match the prompts.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.