Meta takes on OpenAI's GPT-4o with Llama 3 405B, its largest open-source LLM to date

Key Points

- Meta has released Llama 3.1, the largest open-source language model to date, with 405 billion parameters. The model outperforms GPT-4o in benchmarks on both English-language tasks and tasks that require knowledge of multiple languages.

- The Llama 3.1 models support eight languages and have a context length of 128,000 tokens, significantly larger than the previous Llama 3 models. The smaller Llama 3.1 models, with 70 and 8 billion parameters, have been optimized using data generated by the 405B model.

- In addition to Llama 3.1, Meta is introducing new security tools, including Llama Guard 3 for input and output moderation, Prompt Guard for prompt injection protection, and CyberSecEval 3 for cybersecurity risk assessment. The company has also built an extensive partner ecosystem to deploy, optimize and customize Llama 3.

Meta has released the largest model in its open-source Llama language model series to date: Llama 3.1 405B, which boasts 405 billion parameters.

The entire Llama 3 family has also been updated to version 3.1, bringing support for eight languages and a significantly expanded context length of 128,000 tokens compared to the Llama 3 models released in April.

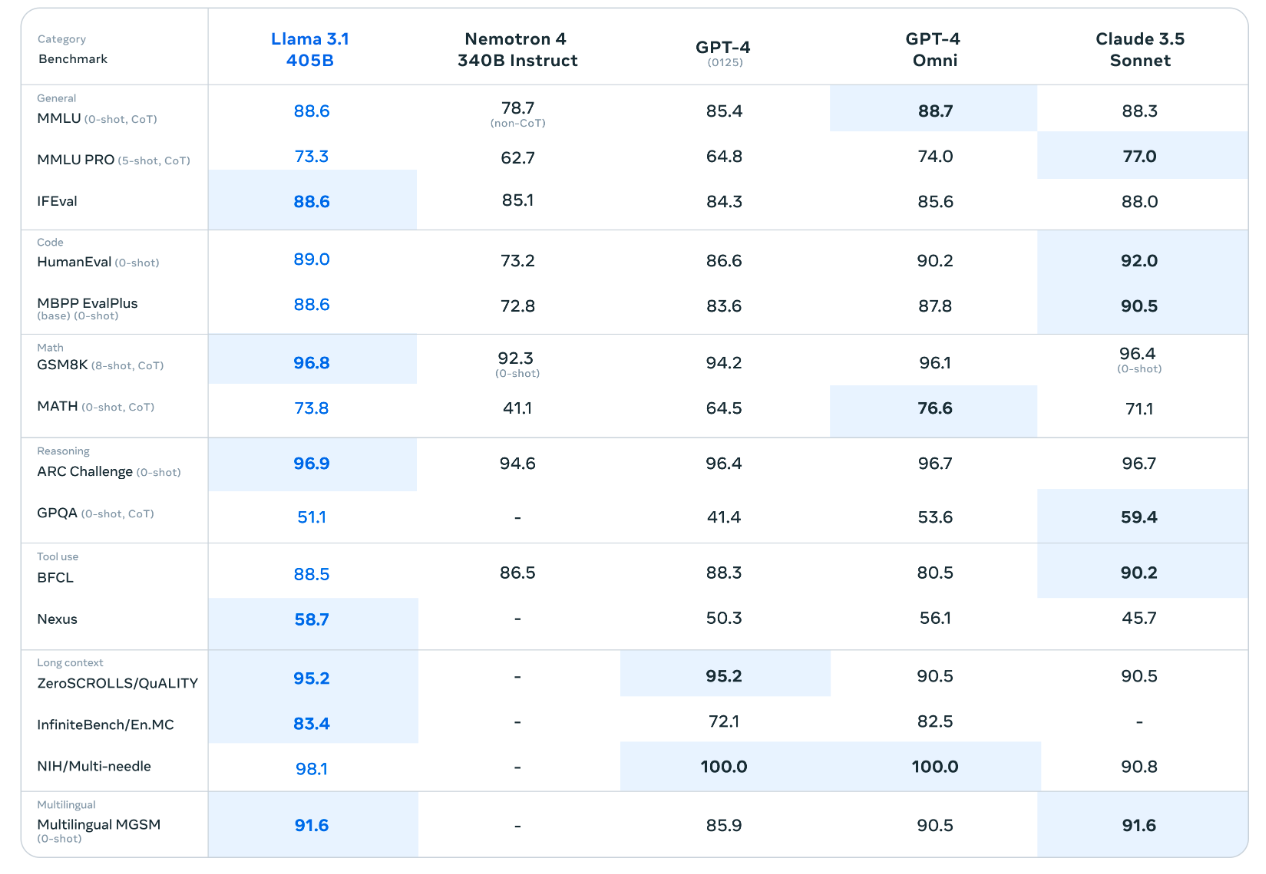

According to Meta, Llama 3.1 405B is the first open-source frontier-level AI model. In common benchmarks, it outperforms GPT-4o and an earlier version of GPT-4 on both English-language tasks and tasks requiring knowledge of multiple languages. It's also on a similar level to Anthropic's Claude 3.5 Sonnet.

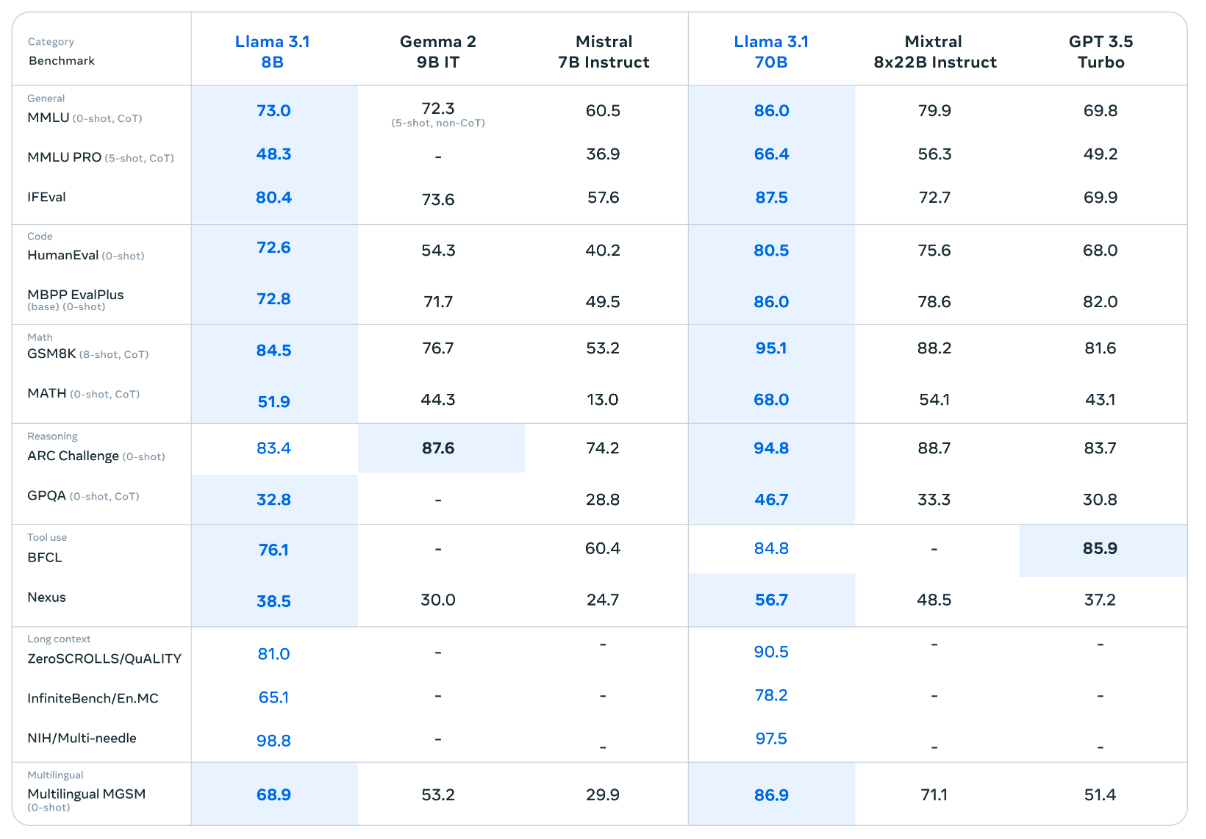

The smaller 3.1 Llama models with 70 and 8 billion parameters were optimized using data generated by the 405B model and are at least on par with comparable open-source models and GPT-3.5 Turbo, which was recently replaced by the much more powerful and cheaper GPT-4o mini.

While these benchmark results provide an indication that Llama 3 405B is generally on par with commercial models, though real-world performance may vary.

Meta is also introducing new security tools alongside the release, including Llama Guard 3 for input and output moderation, Prompt Guard for prompt injection protection, and CyberSecEval 3 for cybersecurity risk assessment.

Meta releases the largest Llama model with code and weights, allowing commercial use under the Llama license. The model can be refined, distilled into other models, and its generations can be used for AI training. Meta has built an extensive partner ecosystem to deploy and optimize Llama 3.

A commercial license from Meta is only required for companies with more than 700 million users. Given that Meta has invested billions of dollars in developing and training its AI model, why is the company doing this?

The company likely hopes to attract developers to its own "AI ecosystem," much like Google did with Android. Meta is also integrating the models into its own AI products, such as its "Meta AI" assistant. Meta's products will improve as the community improves the models.

Meta is also partially undermining the business models of Microsoft, Google, and others who could overtake Meta in AI because they have better infrastructure and complementary business models, such as cloud growth. But right now, it's hitting OpenAI and, more generally, pure-play model providers the hardest.

In an open letter, Meta CEO Mark Zuckerberg makes the case for open-source AI, comparing it to the rise of Linux over closed Unix systems. He predicts that open models like Llama will lead the industry starting next year because of their adaptability and cost-effectiveness.

"Last year, Llama 2 was only comparable to an older generation of models behind the frontier. This year, Llama 3 is competitive with the most advanced models and leading in some areas. Starting next year, we expect future Llama models to become the most advanced in the industry," Zuckerberg writes.

The release of Llama 3 may push OpenAI and others to catch up more quickly with more powerful models, if technically possible. However, recent advances in language models have been incremental, ignoring cost and efficiency benefits, and Llama 3 doesn't appear to bring significant advances to the current holy grail of the AI industry: combining logical reasoning with the knowledge and language capabilities of large multimodal models.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now