Researchers compress 3D object data into 64x64 pixel images for 3D content creation

Key Points

- Researchers at Simon Fraser University and the City University of Hong Kong have developed a method called "Object Images" (Omages) to create 3D models with textures from 64x64 pixel 2D images.

- The omages consist of 12 channels with information on geometry or texture. Complete 3D models with PBR materials can be reconstructed from these compact images.

- In tests with a diffusion model trained on 8,000 3D models, the method achieved a quality comparable to current 3D generation methods.

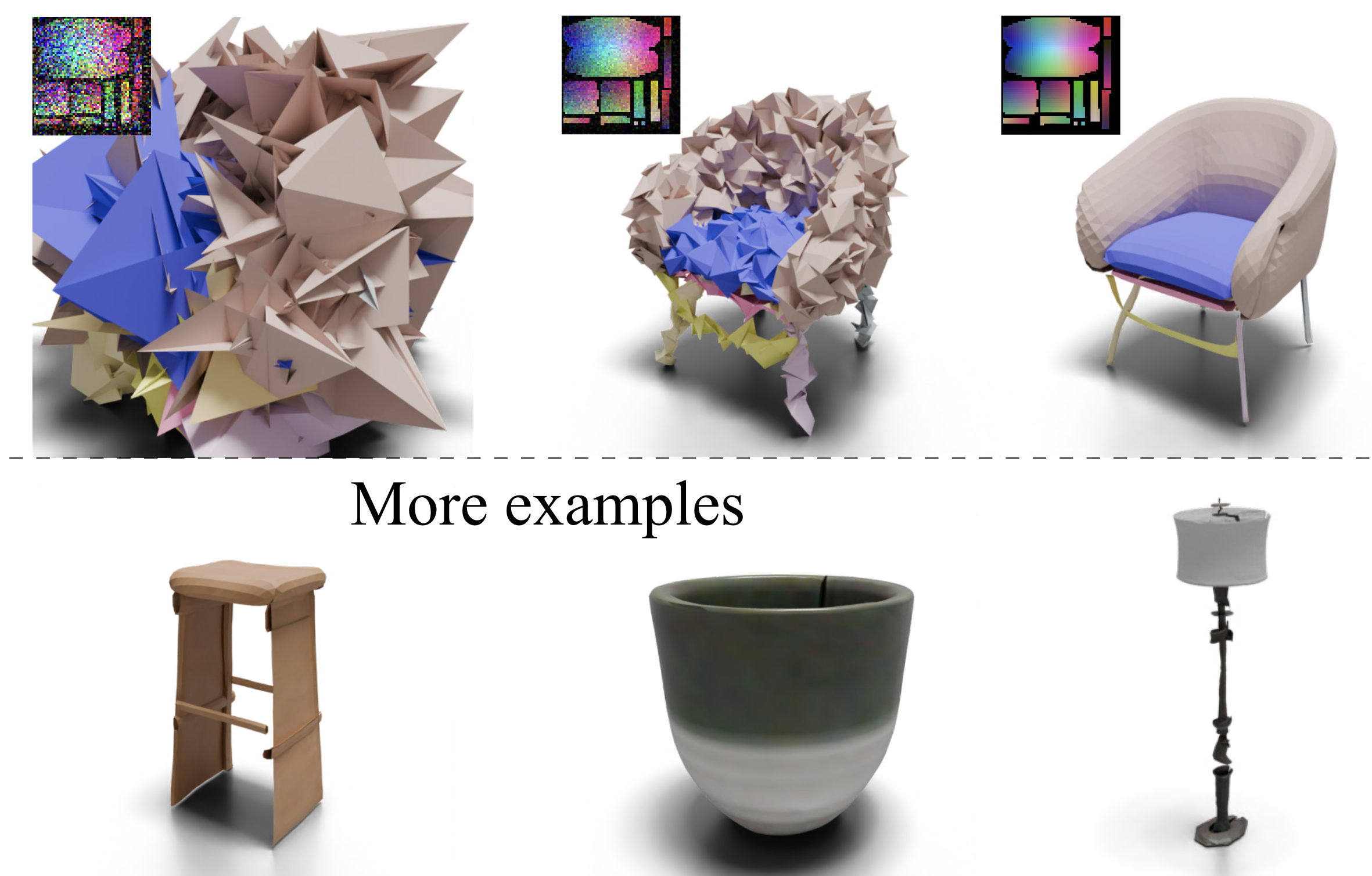

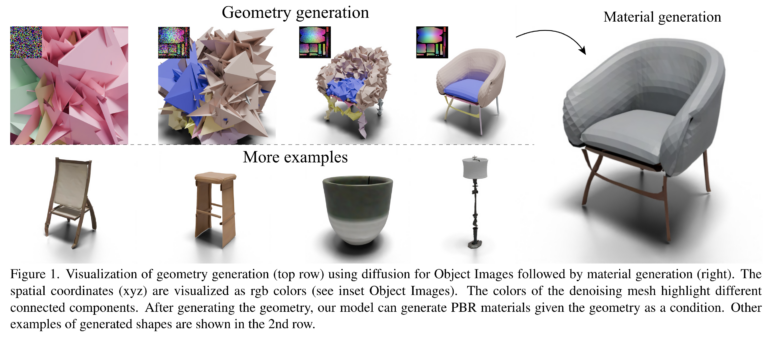

Researchers have developed a new technique to create realistic 3D models with textures from small 2D images. This approach could change how 3D content is created.

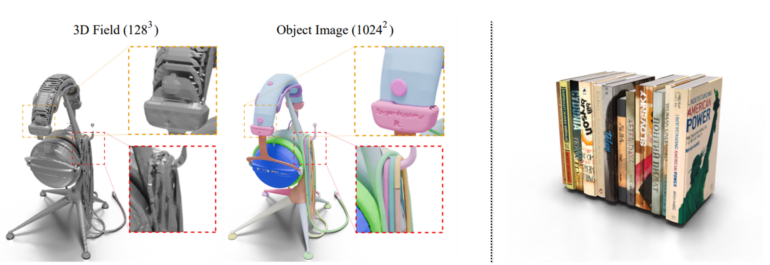

A team from Simon Fraser University and the City University of Hong Kong introduced a method called "Object Images" (Omages) that can efficiently generate 3D models with UV textures from 2D images. The technique condenses the geometry, appearance, and surface structure of a 3D object into a 64x64 pixel image.

This compact representation allows complex 3D shapes to be converted into a more manageable 2D format. Complete 3D models can then be reconstructed from these images.

Video: Yan, Lee et al.

Object images enable 3D models from tiny images

The Object Images contain 12 channels with information about the object like geometry, texture or normal maps. By processing the 2D image, a full 3D model with PBR materials can be generated.

To test their method, the researchers trained an AI model on the Amazon Berkeley Objects dataset, which includes about 8,000 high-quality 3D models. The team reports that the quality of the generated 3D shapes and textures is comparable to current 3D generation models.

However, the approach has some limitations: The generated 3D models still have gaps, the training requires high-quality 3D data, and the current 64x64 pixel resolution limits the possible quality of the final results.

Despite these challenges, the researchers view their work as an important step toward efficient generation of high-quality 3D assets. They plan to address the existing limitations of the method in future work.

More information and the code will soon be available on the project's website.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now