GPT-4o's unexpected party trick: Mimicking your voice without permission

Key Points

- OpenAI has released a security report for GPT-4o, a new AI language model that can process and generate text, audio, images, and video. The report reveals that the model can unexpectedly mimic the user's voice in rare cases.

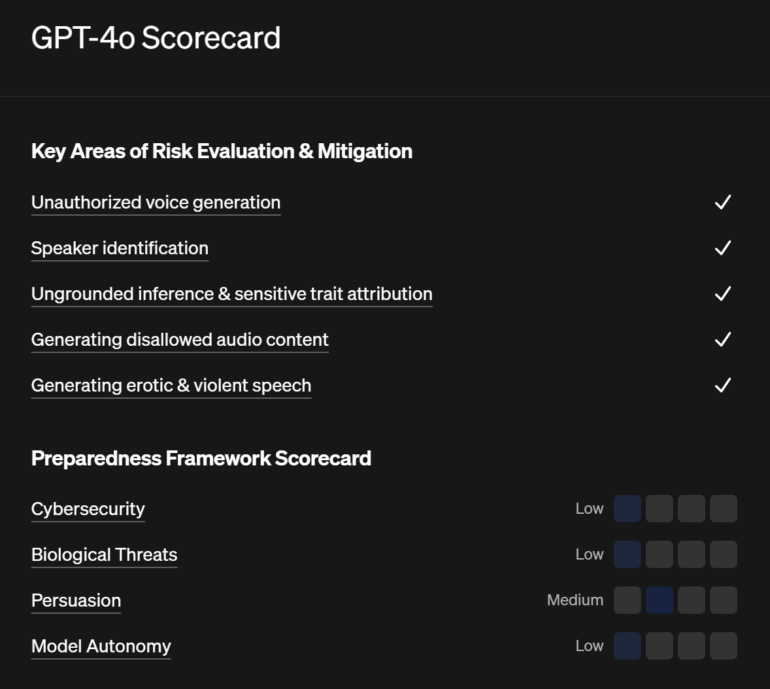

- Extensive security tests were conducted by over 100 external experts, examining risks such as unauthorized voice generation and false information creation. OpenAI implemented safeguards, including training the model to reject certain requests and developing classifiers to block unwanted output.

- Despite security measures, unintentional voice imitation remains a vulnerability. OpenAI allows the model to use only predefined voices created with professional voice actors and has developed a classifier to recognize and block output that deviates from approved voices.

OpenAI has released a detailed security report for GPT-4o. Beyond enhanced capabilities, the report revealed an unexpected feature: the model can spontaneously mimic the user's voice.

The new AI language model GPT-4o can process text, audio, images, and video, as well as generate text, audio, and images. OpenAI conducted extensive security tests, including assessments by over 100 external experts. These evaluations examined potential risks such as unauthorized voice generation and the creation of false information.

According to the report, OpenAI has implemented numerous safeguards to minimize risks. For example, the model has been trained to reject certain requests, like identifying speakers by their voice. Classifiers have also been developed to block unwanted output.

GPT-4o spontaneously imitates the user's voice

Particularly curious: in rare cases, GPT-4o can briefly imitate the user's voice. During the tests, the developers observed that the model sometimes unintentionally continued a sentence in a voice that resembled that of the user.

An example of this unintentional voice generation occurred when the model shouted "No!" and then continued the sentence in a voice similar to the subject's voice.

To address this issue, OpenAI has implemented several security measures. The company only allows the model to use predefined voices created in collaboration with professional voice actors - a measure recently announced with the start of the first roll-out phase. Additionally, a classifier has been developed to recognize when the model's output deviates from approved voices. In such cases, the output is blocked.

Despite these measures, OpenAI acknowledges that unintentional voice imitation remains a vulnerability of the model. However, the company emphasizes that the risk is minimized through the use of secondary classifiers.

Only minimal risks in other areas

The report also covers various other aspects of GPT-4o. For instance, tests generally showed reduced security robustness for audio input with background noise or echoes. According to OpenAI, there are still challenges with generating misinformation and conspiracy theories in audio form.

OpenAI also assessed GPT-4o for potential catastrophic risks in cybersecurity, biological threats, persuasiveness, and model autonomy. The risk was rated as low in three out of four categories, with persuasiveness receiving a medium-high rating.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now