ChatGPT's automatic prompt rewriting reduces DALL-E 3's performance, study finds

Research from the University of California, Berkeley shows that automatic prompt revision by a large language model significantly reduces the quality of images generated by DALL-E 3. This could limit users' ability to take full advantage of the model's capabilities.

UC Berkeley researchers conducted an online experiment with 1,891 participants to examine how automatic prompt rephrasing by a large language model (LLM) affects the image quality of DALL-E 3.

The results showed LLM-based prompt revision reduced DALL-E 3's advantages over DALL-E 2 by nearly 58 percent. While DALL-E 3 users with prompt rewriting still outperformed DALL-E 2 users, the improvement was less than when prompts written for DALL-E 2 were passed directly to DALL-E 3.

The study suggests AI-assisted prompt rewrites in their current form are not a cure-all. They may even hinder users from realizing a model's full potential if they don't align with the end user's goals. OpenAI uses ChatGPT's "prompt transformation" as a safety and moderation feature.

People prompt advanced AI more thoroughly

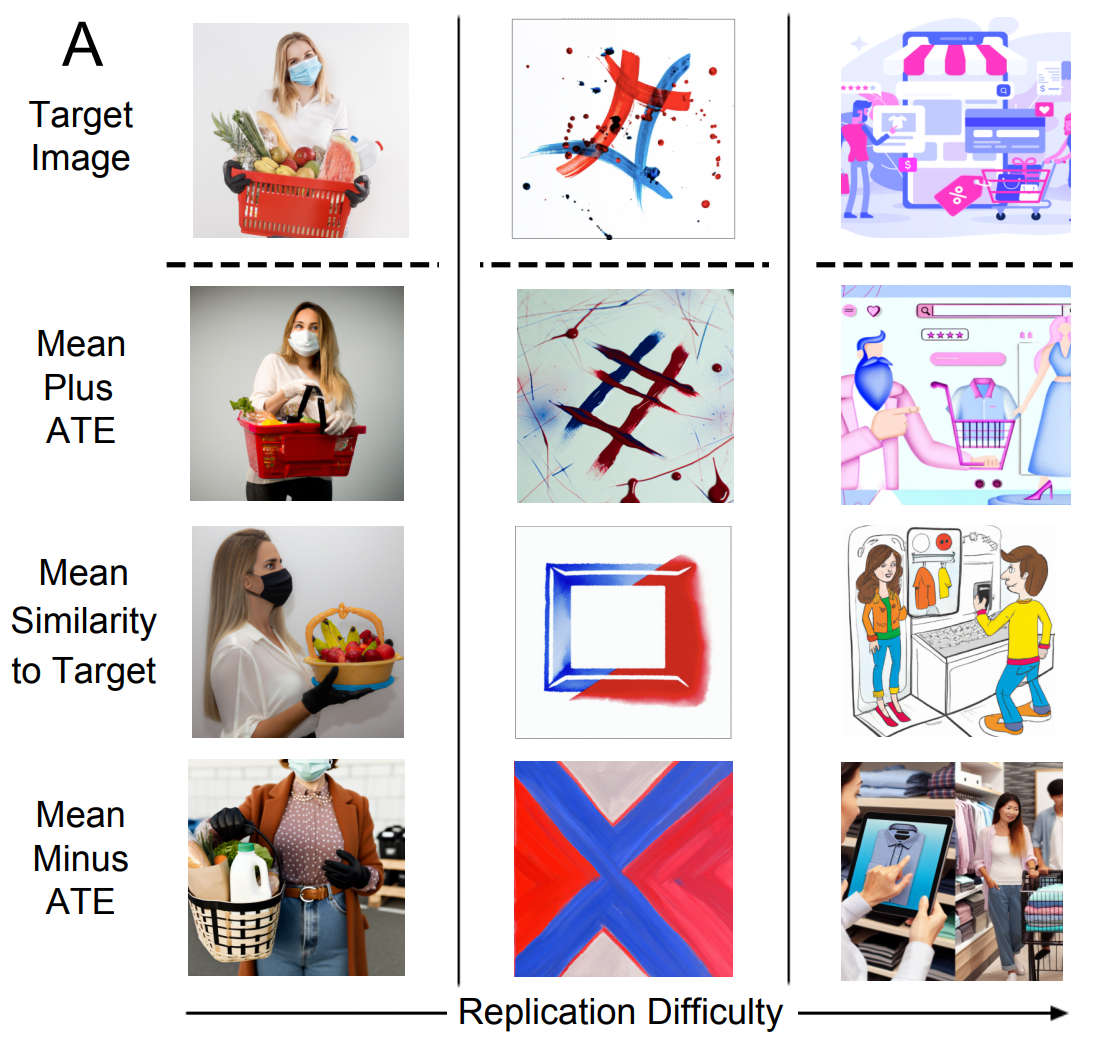

In the experiment, each participant was randomly assigned to one of three text-to-image models: DALL-E 2, the more capable DALL-E 3, or a version of DALL-E 3 with automatic prompt revision. The task was to write ten consecutive prompt attempts to reproduce a target image as accurately as possible.

Results showed DALL-E 3 outperformed DALL-E 2, with a significant difference in how closely the generated images matched the targets.

The researchers identified two main reasons for the performance gap between DALL-E 2 and DALL-E 3: improved technical capabilities of DALL-E 3 and users adapting their prompting strategies. Notably, DALL-E 3 users wrote longer prompts with greater semantic similarity and more descriptive words, even though they didn't know which model they were using.

The researchers suspect an interplay could develop as models advance: As the models improve, people continuously adapt their prompts to best utilize the latest model's capabilities. This suggests newer models won't make prompting obsolete. Instead, prompting will be the mechanism by which people tap into new models' capabilities.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.