At its first developer conference, OpenAI confirmed its ambition to build a new chatbot ecosystem.

The most important news is undoubtedly GPT-4 Turbo, including numerous API innovations such as assistants and custom ChatGPTs that users can feed with their data and program in natural language. But beyond these two major announcements, OpenAI had more in store.

Speech to text: Whisper v3 available as open source

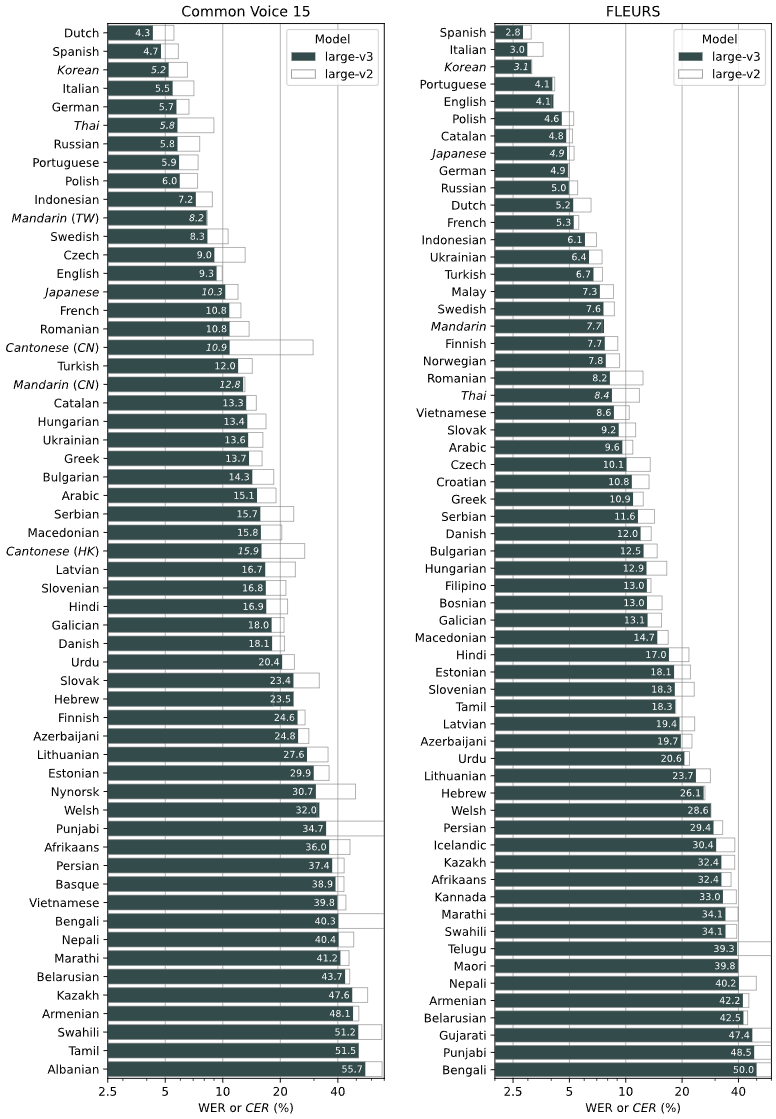

Whisper is OpenAI's open-source speech-to-text model. The new model v3 has been trained on 1 million hours of weakly labeled audio and 4 million hours of pseudolabeled audio collected with Whisper v2. Also, Cantonese has been added as a language. In benchmarks, model v3 significantly outperforms its predecessor in terms of error rate.

Performance varies by language, but in general, the largest version of Whisper v3 has an error rate of less than 60 percent for Common Voice 15 and Fleurs, which OpenAI says is a 10 to 20 percent error reduction over Whisper large-v2.

Whisper v3 is available on Github, with an OpenAI API implementation coming soon.

Text to speech: OpenAI's synthetic voices sound human

OpenAI also announced the other way around: a text-to-speech model that could give Elevenlabs and Co. a headache. With the TTS model, you can have your texts read aloud by up to six human-sounding synthetic voices. The voices are familiar from the ChatGPT application and have a credible intonation.

OpenAI's TTS is available in a high-quality version and a version trimmed for speed. The company charges $0.015 per 1000 spoken characters, which is significantly cheaper than ElevenLabs if you exceed the flat rate (up to $0.30 per 1000 characters).

GPT-4 fine-tuning is costly

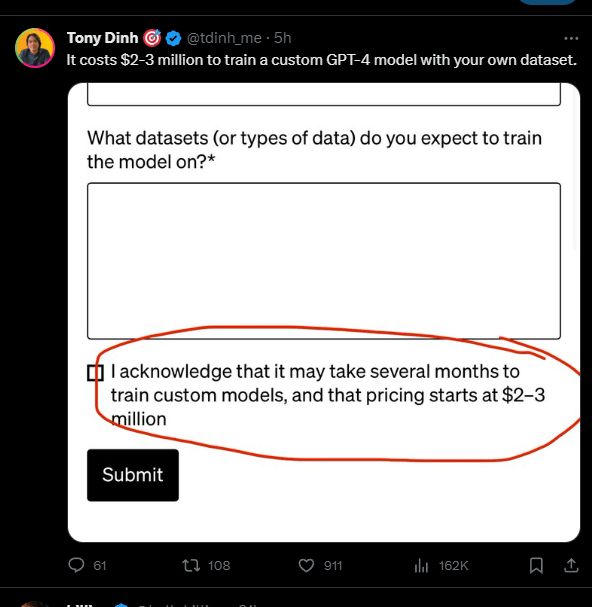

Also at the developer show, OpenAI announced a "very limited" program for initial GPT-4 fine-tuning projects. GPT-3.5 has had fine-tuning options for some time, which can be done directly in the web interface.

However, GPT-4 fine-tuning appears to be much more complex, with prices starting at two million US dollars and a data volume of at least one billion tokens in the company's database. OpenAI offers fine-tuning only to selected companies, which then have exclusive access to their model.

OpenAI protects against copyright infringement

Following in the footsteps of Microsoft and Google, OpenAI has announced a form of legal protection against generative copyright claims. Companies that are sued under copyright law for content generated with OpenAI models can have OpenAI reimburse them for the costs of a potential lawsuit. This only applies to ChatGPT Enterprise and the developer platform. The copyright shield does not cover standard ChatGPT.

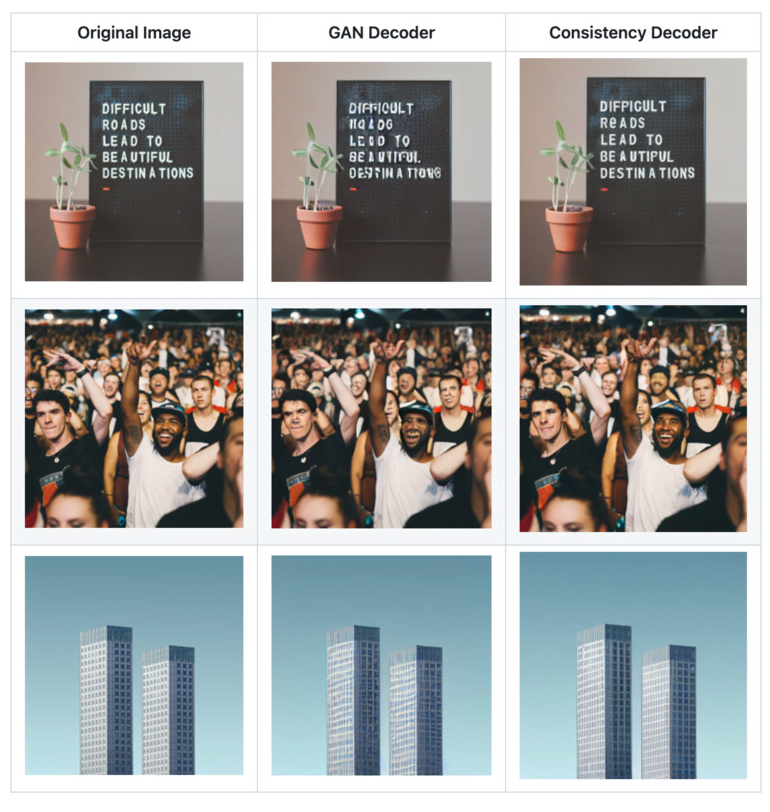

New Stable Diffusion Decoder

The OpenAI Consistency Decoder is an open-source upgrade to the decoder used in the Stable Diffusion Variational Autoencoder (VAE). It uses consistency training to improve image generation, especially for text, faces, and straight lines. It is fully compatible with the Stable Diffusion 1.0+ VAE. Improvements with the new decoder can be viewed here or here.

ChatGPT is more up-to-date and gets more frequent updates

With the GPT-4 Turbo model, ChatGPT will also receive a content update until April 2023. But that's not all: According to OpenAI CEO Sam Altman, the most annoying thing about ChatGPT is that it is not up-to-date, and OpenAI agrees. That is why the company is planning more regular content updates in the future.

Payment for GPTs is based on ChatGPT revenue

A big announcement at the developer conference was "the GPTs", ChatGPT instances that users can customize and optimize for their purposes and then offer on a marketplace. OpenAI announced that successful chatbot publishers will also be paid, but the mode was still unclear.

Altman told The Verge's Alex Heath that the initial plan is to take a cut of ChatGPT's subscription revenue. There will be different tiers based on the number of chatbot users, as well as special bonuses for categories. Altman does not give specific numbers and expects the whole thing to "evolve a lot."

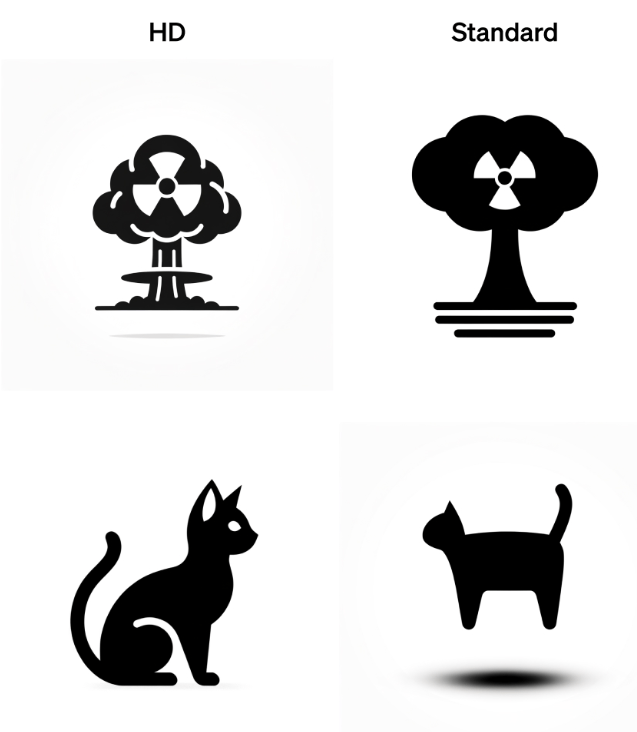

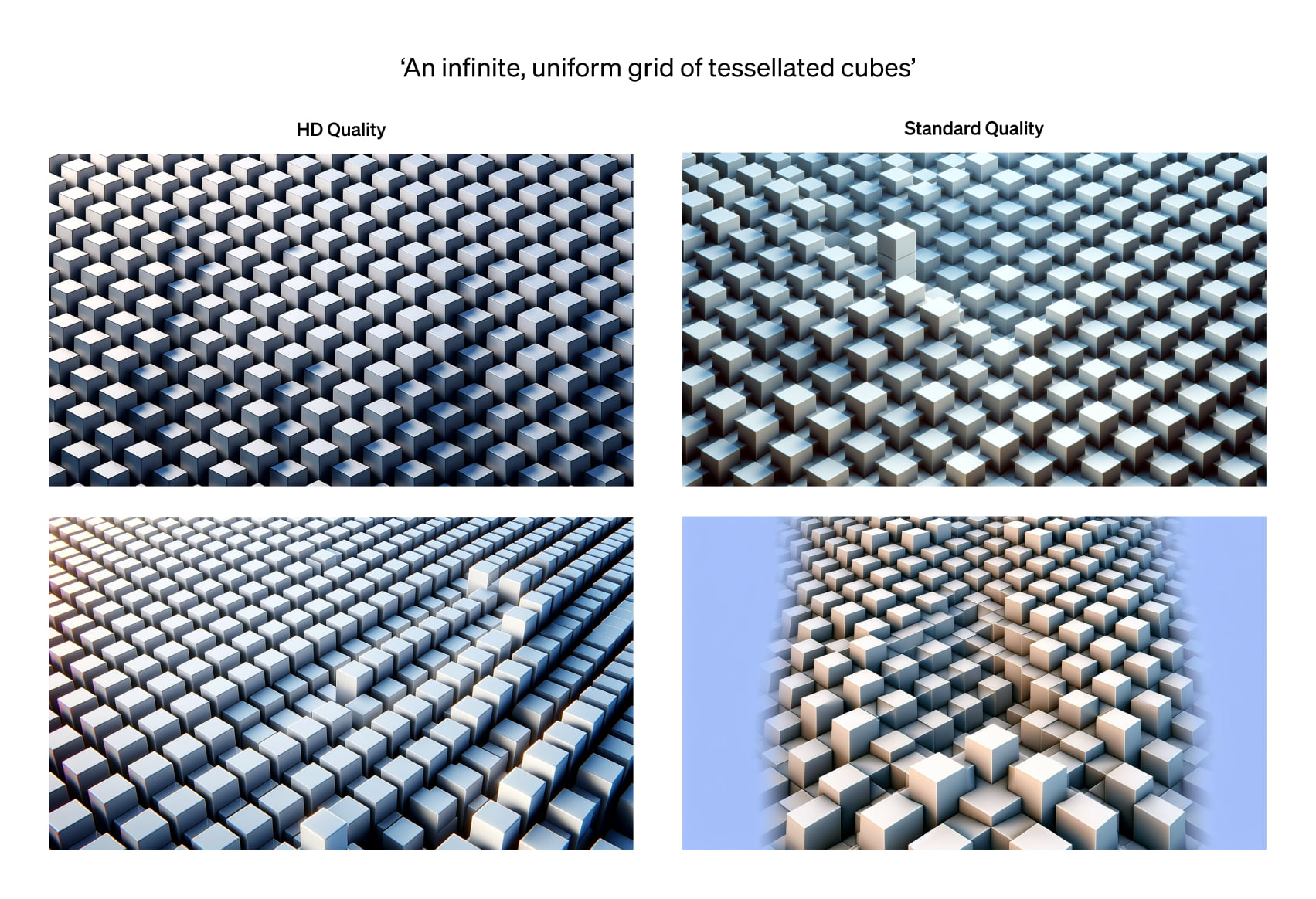

Natural and HD: DALL-E 3 has two additional modes

In its developer's cookbook, OpenAI shows some details about DALL-E 3 when the model is driven via the API. DALL-E 3 offers two basic modes, "natural" and "vivid," which, as the name suggests, produce more natural, realistic, or hyperrealistic, dramatic images. DALL-E 3 is set to "vivid" in ChatGPT. "Natural" should be more like DALL-E 2 and is suitable for photos, for example.

Two other quality modes are "HD" and "Standard", the latter known from ChatGPT. HD is supposed to show more detail and follow the prompt more accurately. However, HD is pricier and takes an average of ten seconds longer to generate. Still, it is interesting for developers that they may be able to achieve slightly better quality for their apps than what DALL-E 3 offers in ChatGPT.

ChatGPT still has incredible user numbers

ChatGPT has achieved incredible growth in less than a year, as OpenAI CEO Sam Altman reiterated at the developer conference: the platform currently has 100 million weekly active users.

In addition, two million developers have access to the API, and their applications are used by millions of users as well. According to Altman, 92% of Fortune 500 companies are using OpenAI technologies. It's easy to say that OpenAI is dominating generative AI right now.

According to Similarweb, ChatGPT's growth slowed down a bit during the summer months. Given the numbers above, this should not diminish the overall success of the platform.