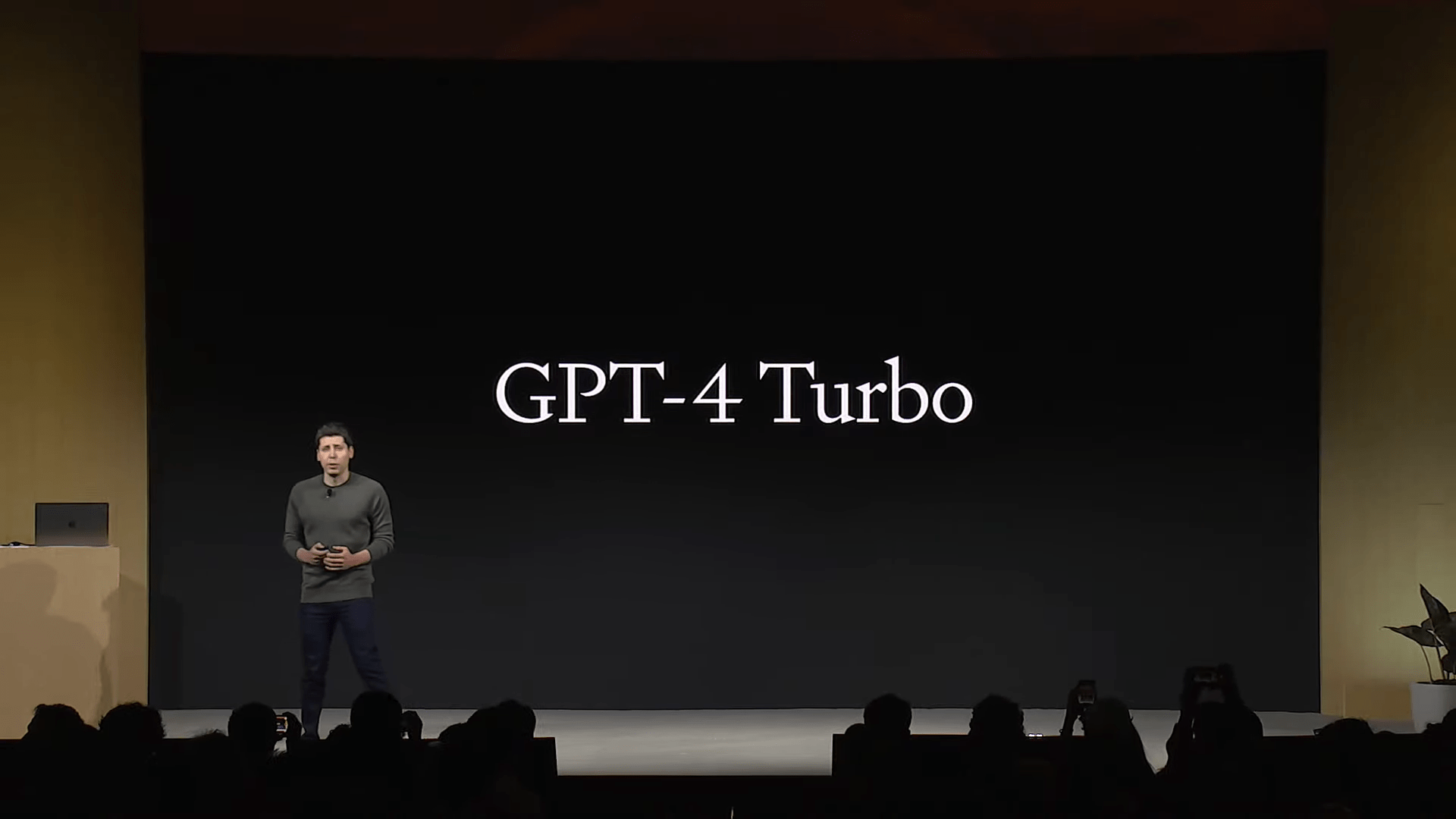

At its developer conference, OpenAI announced GPT-4 Turbo, a cheaper, faster and smarter GPT-4 model. Developers get plenty of new API features at a much lower cost.

The new GPT-4 Turbo model is now available as a preview via the OpenAI API and directly in ChatGPT. According to OpenAI CEO Sam Altman, GPT-4 Turbo is "much faster" and "smarter".

The release of Turbo also explains the rumors about an updated ChatGPT training date: GPT-4 Turbo is up-to-date until April 2023. The original ChatGPT only had knowledge until September 2021. Altman said that OpenAI plans to update the model more regularly in the future.

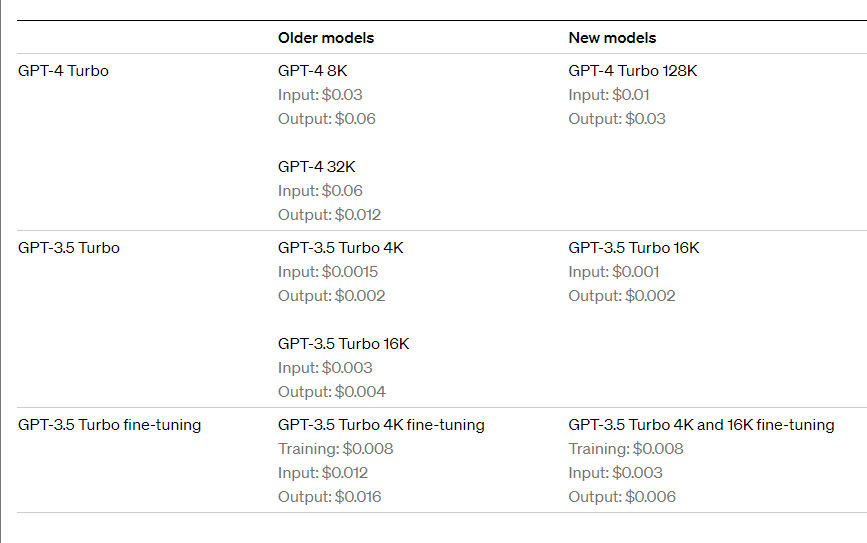

Probably the biggest highlight for developers is the significant price reduction that comes with GPT-4 Turbo: input tokens (text processing) for Turbo are three times cheaper, and output tokens (text generation) are two times cheaper.

The new Turbo model costs $0.01 per 1000 tokens compared to $0.03 for GPT-4 for input tokens and $0.03 for output tokens compared to $0.06 for GPT-4. It's also much cheaper than GPT-4 32K, even though it has a four times larger context window (see below).

Another highlight for developers: OpenAI is extending the GPT-4 Turbo API to include image processing, DALL-E 3 integration, and text-to-speech. The "gpt-4-vision-preview" model can analyze and generate images and create human-like speech from text.

OpenAI is also working on an experimental GPT-4 tuning program and a custom model program for organizations with large proprietary datasets. GPT-3.5 fine-tuning will be extended to the 16K model.

GPT-4 Turbo has much more attention

Probably the most important technical change is an increase of the so-called context window, i.e. the number of words that GPT-4 Turbo can process at once and take into account when generating output. Previously, the context window was a maximum of 32,000 tokens. GPT-4 Turbo has 128,000 tokens.

This is the equivalent of up to 100,000 words or 300 pages in a standard book, according to Altman. He also said that the 128K-GPT-4 Turbo model is "much more accurate" in terms of overall context.

OpenAI also confirms the GPT-4 All model, which is also available now and has been seen in the wild prior to the conference. The All model automatically switches between the different GPT models for program code (Advanced Data Analysis) or image generation (DALL-E 3), depending on the user's requirements. Previously, users had to manually select the appropriate model before entering data.

The fact that GPT-4 Turbo is now official is also interesting because it was part of an earlier rumor that OpenAI had dropped a GPT-4 level sparse model called "Arrakis", which was supposed to be more efficient but didn't work out, in favor of Turbo.

Assistants API and Copyright Protection

OpenAI also introduced the Assistants API to help developers integrate assistive AI functionality into their applications. The API enables persistent and infinite threads, allowing developers to overcome the limitations of the context window.

Assistants have access to new tools such as the Code Interpreter, which writes and executes Python code in a compartmentalized environment; Retrieval, which enriches the assistant with external knowledge; and Function Call, which allows assistants to call custom functions.

Video: OpenAI

The Assistants API is based on the same functionality as OpenAI's GPT products. Developers can try the Assistants API beta in the Assistants Playground without writing any code. The beta is available now.

According to Sam Altman, CEO of OpenAI, assistants are a first step toward full-fledged AI agents and will add new capabilities in the future.

Another new service is the "Copyright Shield", which defends customers against and pays for legal claims related to copyright infringement of content generated with OpenAI models. This protection applies to publicly available features of ChatGPT Enterprise and the developer platform.

Microsoft and Google have also announced such protections. They signal Big AI's confidence in winning current and future lawsuits.

You can watch the full keynote in the video below.