OpenAI creates a new standard in AI art with DALL-E 2. The multimodal model generates impressive, versatile and creative motifs and can modify existing images to match the style. As a description one sentence is enough, several sentences work even better and create a more detailed picture.

In January 2021, OpenAI unveiled DALL-E, a multimodal AI model that generates images to text input, which are then sorted by quality by the CLIP model developed in parallel.

The results were impressive and triggered a whole series of experiments in the following months, combining CLIP with Nvidia's StyleGAN, for example, to also generate or modify images according to text descriptions.

Then, in December 2021, OpenAI reported back with GLIDE, a multimodal model that uses so-called diffusion models. Diffusion models gradually add noise to images during their training and then learn to reverse that process. After AI training, the model can then generate arbitrary images from pure noise with objects seen during training.

DALL-E 2 relies on GLIDE and CLIP

GLIDE's results outperform DALL-E and also leave other models behind. Unlike DALL-E, however, GLIDE does not rely on CLIP. A corresponding prototype that combined CLIP and GLIDE did not achieve the quality of GLIDE without CLIP.

Now OpenAI demonstrates DALL-E 2, which relies on an extended diffusion model in the style of GLIDE, but combines it with CLIP. For this, CLIP does not generate an image from a text description, but an image embedding - a numerical image representation.

The diffusion decoder then generates an image from this representation. In this, DALL-E 2 differs from its predecessor, which used CLIP exclusively to filter the generated results.

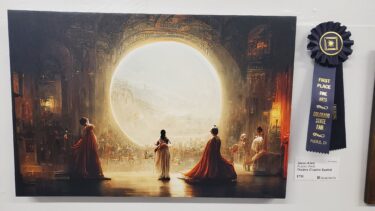

The images produced are again impressive and clearly surpass the results of DALL-E and GLIDE.

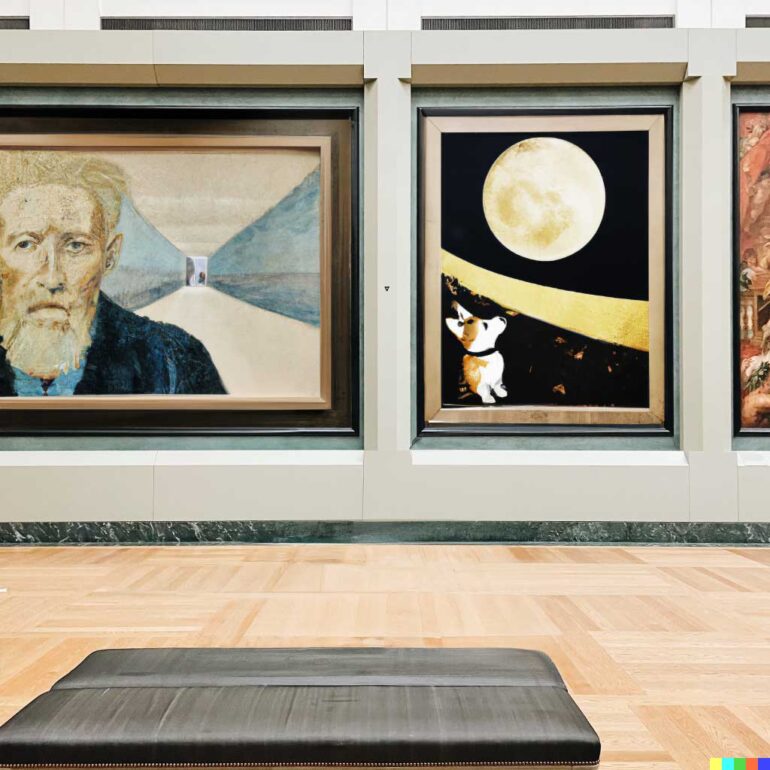

The integration of CLIP into DALL-E 2 also allows OpenAI to more precisely control image generation through text. This allows certain elements to be added to an image, such as a flamingo swimming hoop into a pool or a corgi into or onto an image.

Particularly impressive is DALL-E 2's ability to adapt the result to the immediate environment: The newly added corgi adjusts to the respective painting style or becomes photorealistic if it is supposed to sit on a bench in front of the painting.

The generated images are also upscaled to 1,024 by 1,024 pixels by two additional models. Thus, DALL-E 2 achieves an image quality that could enable use in certain professional contexts.

DALL-E 2 only available on a limited basis for the time being

"DALL-E 2 is a research project which we currently do not make available in our API," OpenAI's blog post states. The organization wants to explore the limits and possibilities of DALL-E 2 with a select group of users.

Interested parties can apply for DALL-E 2 access on the website, the official market launch is planned for summer. OpenAI also pursued a similar approach with the release of GPT-3, but the speech AI is now available without a waiting list.

The ability of DALL-E 2 to generate violent, hateful or NSFW images is restricted. For this, explicit content was removed from the training data. DALL-E 2 is also said to be unable to generate photorealistic faces. Users must adhere to OpenAI's Content Policy, which prohibits the use of DALL-E 2 for numerous purposes.

DALL-E 2 is designed to help people express themselves creatively, OpenAI says. The model also helps understand advanced AI systems - which OpenAI says is critical to developing AI "for the benefit of humanity."

Further information is available on the DALL-E 2 GitHub page. For more examples, see OpenAI's blog post.