AI models struggle with complex table questions, lagging far behind humans in new benchmark

Researchers from Beihang University in China have developed a new dataset called TableBench to evaluate the performance of AI models when answering complex questions about tabular data. The benchmark reveals that even advanced systems perform significantly worse than humans in this area.

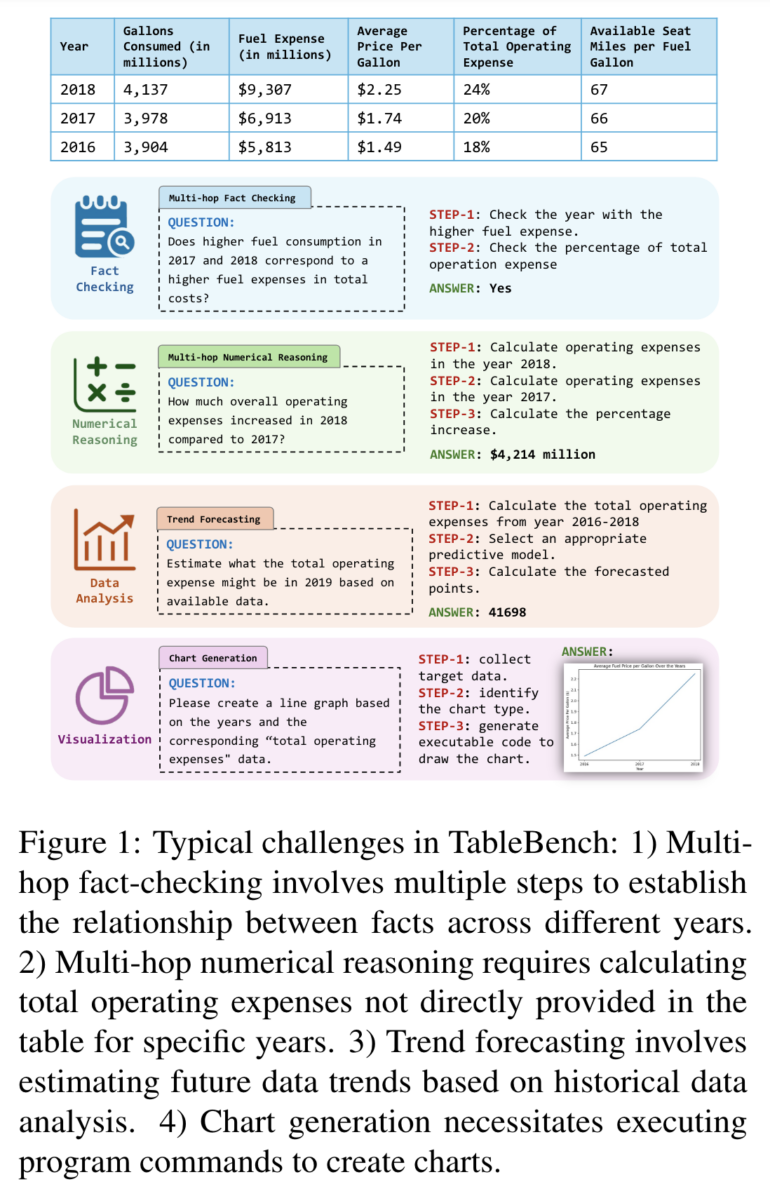

TableBench comprises 886 question-answer pairs from 18 different categories, covering a wide range of tasks such as fact checking, numerical calculations, data analysis, and visualization. The dataset aims to bridge the gap between academic benchmarks and real-world application scenarios, with the average number of "thinking steps" required to answer a question being 6.26 - significantly higher than comparable datasets.

The research team evaluated over 30 large language models on TableBench, including both open-source and proprietary systems. Even the powerful GPT-4o model only achieved around 54 % of human performance, highlighting the considerable room for improvement needed for AI models to meet the requirements of real-world applications.

Microsoft is working on solutions

Alongside TableBench, the researchers also presented TableInstruct, a training dataset with around 20,000 examples. They used it to train their own model called TABLELLM, which achieved a performance comparable to GPT-3.5.

Microsoft researchers have also recently introduced SpreadsheetLLM, a method that can improve the performance of language models in table processing.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.