RunwayML's Act-One model turns smartphone videos into lifelike facial animations

Key Points

- RunwayML launches Act-One, an AI model that can transfer an actor's performance directly to animated characters. Video and voice recordings are all that's needed, and RunwayML claims that smartphone recordings can do the job.

- The model should be able to capture the finest details of the actor's performance and transfer them to various reference images and character designs. According to RunwayML, realistic facial expressions remain intact even when the proportions of the target figure vary.

- In addition to applying it to animated characters, RunwayML also demonstrates its application to photorealistic avatars. In one demo, an actor plays two virtual characters in a scene.

RunwayML has introduced a new AI model called Act-One that aims to streamline the facial animation process. For film production, the technology could have far-reaching implications beyond motion capture.

Traditional facial animation methods often involve complex workflows with specialized equipment. Act-One aims to simplify this by transferring an actor's performance directly to an animated character using only video and voice recordings. According to RunwayML, the model can capture and transfer even subtle details of a performance, and that a smartphone is all that is needed.

Demo video for Act One. | Video: RunwayML

One actor playing multiple roles

RunwayML highlights the flexibility of Act-One. The model can be applied to different reference images and is designed to maintain realistic facial expressions even when the target character's proportions differ from the original video source.

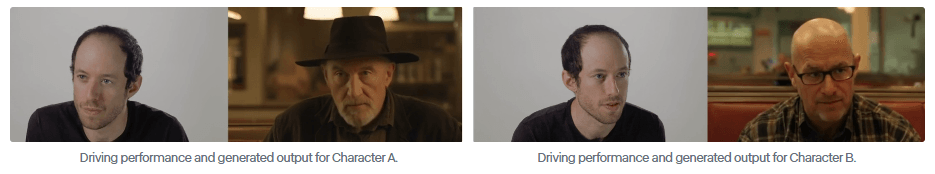

In a demonstration video, RunwayML shows how Act-One transfers a performance from a single input video to different character designs and styles. The company says the model produces cinematic, realistic results and works well with different camera angles and focal lengths.

While the obvious use case is transferring human facial expressions and voice to animated characters for games and animated movies, RunwayML also presents another scenario: applying acting performances to photorealistic avatars.

The company shows how a single actor can play two virtual avatars in a single scene, promising that "you can now create narrative content using nothing more than a consumer grade camera and one actor reading and performing different characters from a script."

Video: RunwayML

RunwayML says access to Act-One is being phased in for users now and will soon be available to everyone.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now