Cohere's Embed 3 AI can now search text and images in one unified database

Key Points

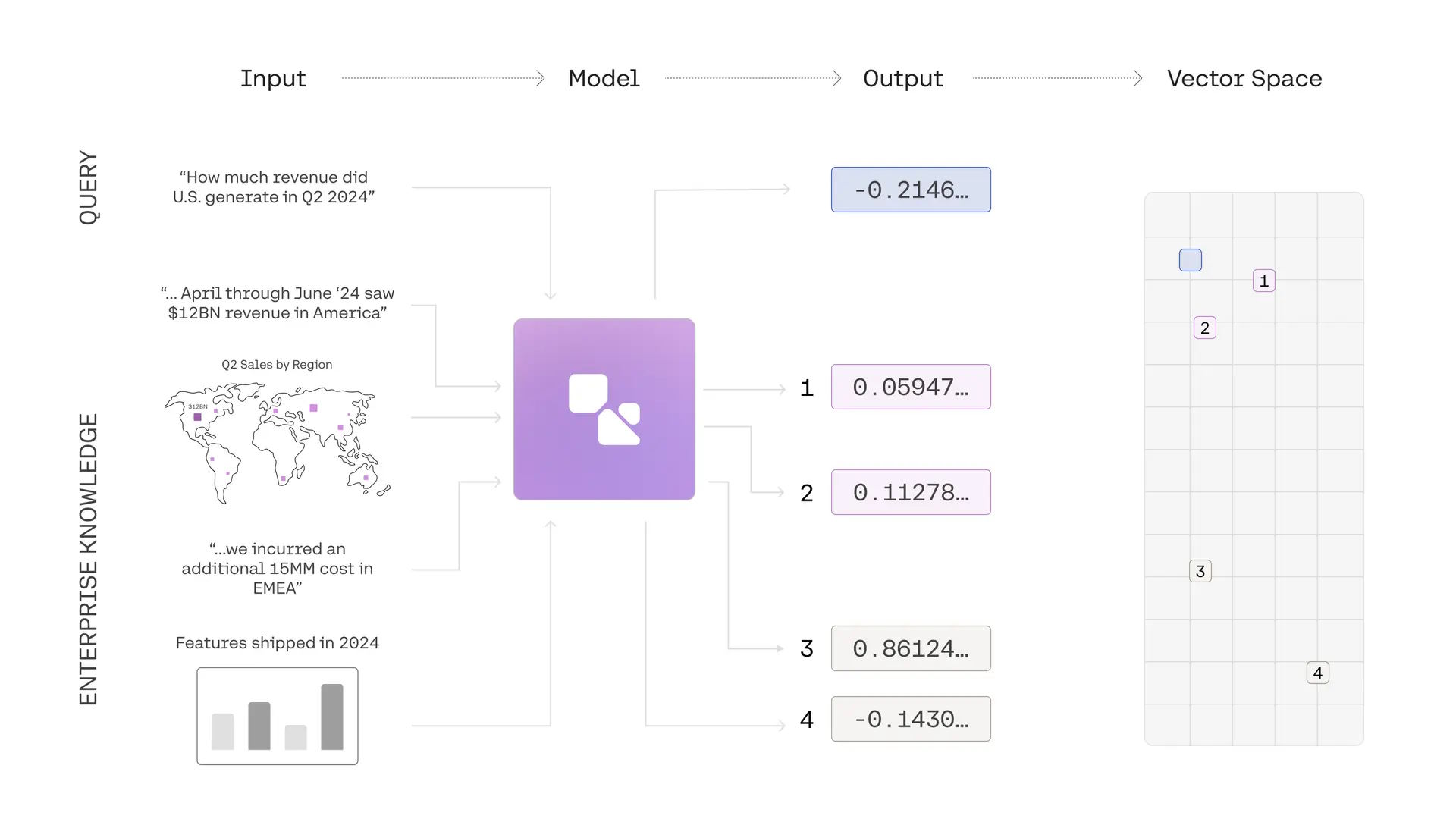

- Cohere has added image processing capabilities to its Embed 3 AI search model, enabling organizations to search both images and text within a single database.

- The update targets organizations that manage large collections of visual content, from product catalogs to technical documentation.

- The system now processes common image formats, including PNG, JPEG, WebP and GIF, with a 5-megabyte size limit per file. The current version handles one image per query.

AI company Cohere has added image search to its Embed 3 search model. According to the company, users can now search images and text in a shared database for the first time.

Cohere has updated its Embed 3 search model to process images alongside text, letting companies search both types of content in a single database. The update targets businesses managing large collections of product images, design files, and reports.

The system uses a unified storage space for text and visual content, eliminating the need for separate databases. Organizations can now search across their entire content library, regardless of format.

Images can be up to 5 megabytes in size

Image embedding works with PNG, JPEG, WebP, and GIF files up to 5 megabytes in size. Cohere kept the existing text processing unchanged, so current text embeddings remain compatible. However, the system can currently only handle one image per query, as batch processing isn't yet available.

Developers can access the new features through Cohere's existing Embed API, which now includes additional image handling parameters. Images must be submitted as Base64-encoded data URLs.

Cohere's updated model works in more than 100 languages and runs on the company's platform, Microsoft Azure, and Amazon SageMaker. Founded by researchers who helped develop the Transformer architecture, the company secured $500 million in funding last July.

The move comes as more companies explore how to search across multimodal content. Google and OpenAI already offer multimodal embedding models. The race is now on to see which system can deliver the speed, accuracy, and security that enterprises need.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now