Nvidia trains a tiny AI model that controls humanoid robots better than specialists

Key Points

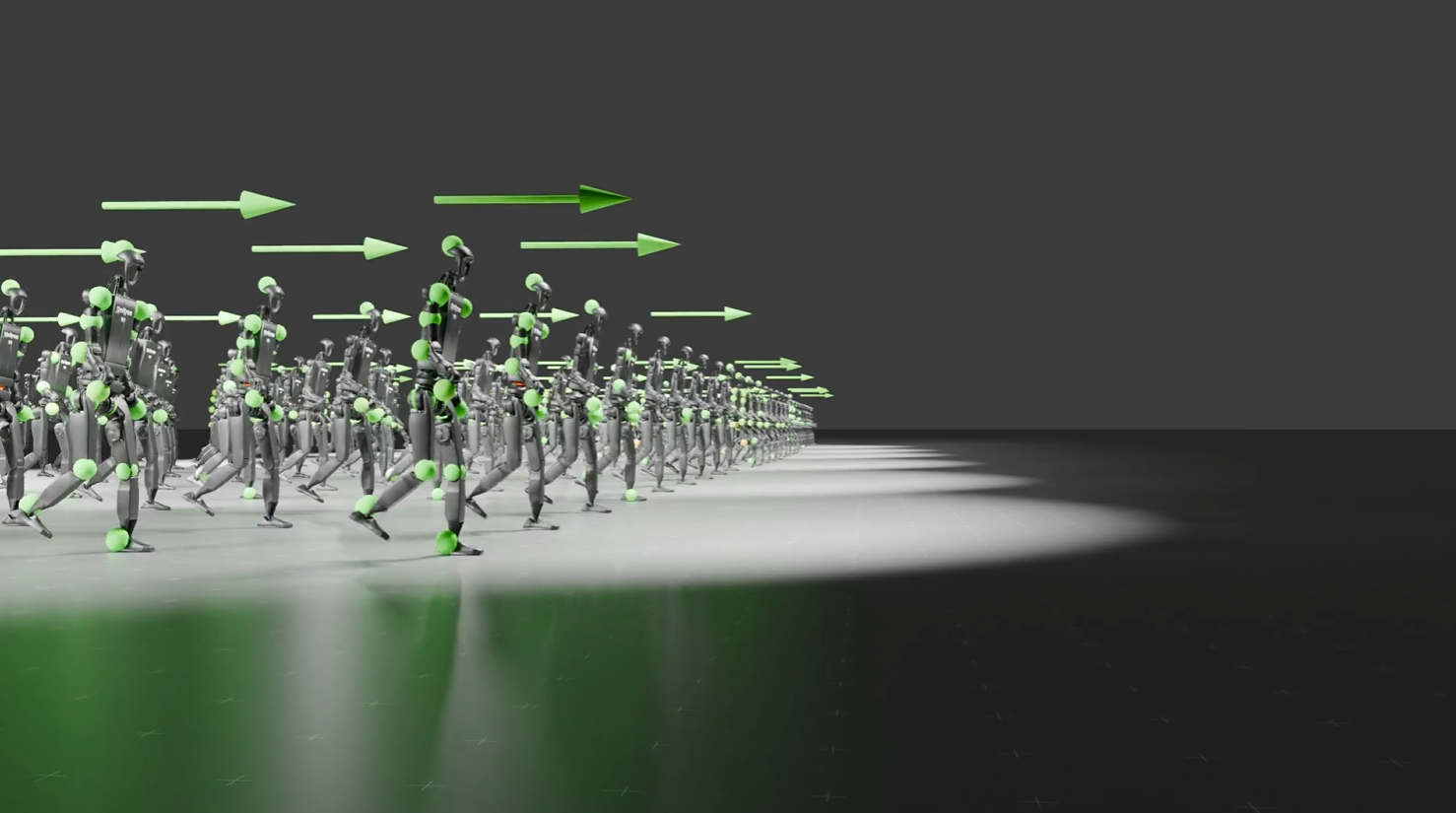

- Nvidia researchers have developed HOVER, a compact neural network with only 1.5 million parameters that can control complex movements of humanoid robots.

- The system supports multiple control modes, including head and hand movements of XR devices, full-body poses from motion capture or cameras, and joint angles of exoskeletons.

- HOVER was trained in Nvidia's Isaac GPU-accelerated simulation environment, where one year of intensive training equates to about 50 minutes of real time on a GPU, and can be applied directly to real robots without fine-tuning.

Nvidia researchers have built a small neural network that controls humanoid robots more effectively than specialized systems, even though it uses far fewer resources. The system works with multiple input methods, from VR headsets to motion capture.

The new system, called HOVER, needs only 1.5 million parameters to handle complex robot movements. For context, typical large language models use hundreds of billions of parameters.

The team trained HOVER in Nvidia's Isaac simulation environment, which speeds up robot movements 10,000 times. According to Nvidia researcher Jim Fan, this means that a full year of training in the virtual space takes just 50 minutes of actual computing time on one GPU.

Small and versatile

HOVER moves zero-shot from simulation to physical robots without the need for fine-tuning, says Fan. The system accepts input from multiple sources, including head and hand tracking from XR devices such as Apple Vision Pro, full-body positions from motion capture or RGB cameras, joint angles from exoskeletons, and standard joystick controls.

The Hover model allows a robot to be remotely controlled via a VR headset without any specific fine-tuning. | Video: Nvidia

The system performs better at each control method than systems built specifically for just one type of input. Lead author Tairan He speculates that this may be due to the system's broad understanding of physical concepts such as balance and precise limb control, which it applies across all control types.

The system builds on the open-source H2O & OmniH2O project and works with any humanoid robot that can run in the Isaac simulator. Nvidia has posted examples and code on GitHub.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now