Seattle startup shrinks computer vision AI to fit in your pocket

Moondream, a startup based in Seattle, has released moondream2, a compact vision language model that performs well in benchmarks despite its small size. The open-source model could pave the way for local image recognition on smartphones.

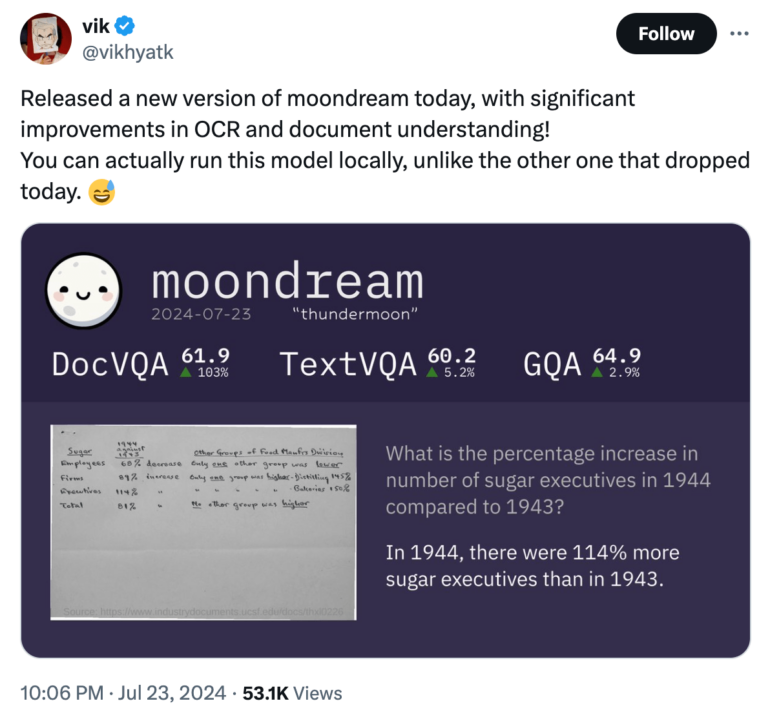

In March, the US startup Moondream released moondream2, a vision language model (VLM) that has attracted significant attention. The model can process both text and image inputs, enabling it to answer questions, extract text (OCR), count objects, and classify items. Since its release, regular updates have continued to improve its benchmark performance.

What makes moondream2 remarkable is its compact size: with only 1.6 billion parameters, the model can run not just on cloud servers but also on local computers and even less powerful devices like smartphones or single-board computers.

Despite its small size, it maintains strong performance capabilities, outperforming some competing models many times its size in certain benchmarks. In a comparison of VLMs on mobile devices, researchers highlighted moondream2's performance:

In particular, we note that although moondream2 has only about 1.7B parameters, its performance is quite comparable to the 7B models. It only falls behind on SQA, the only dataset that provides a related context besides the image and questions for the models to answer the questions effectively. This could indicate that even the strongest smaller models are not able to understand the context.

Murthy et al. in the paper "MobileAIBench: Benchmarking LLMs and LMMs for On-Device Use Cases"

According to developer Vikhyat Korrapati, the model builds on others like SigLIP, Microsoft's Phi-1.5, and the LLaVA training dataset.

moondream2 is developed as open source and is available for free download on GitHub or in a demo on Hugging Face. On the coding platform, it has generated significant interest in the developer community, earning more than 5,000 star ratings.

Millions invested in start-up

This success has attracted investor attention: In a pre-seed funding round led by Felicis Ventures, Microsoft's M12 GitHub Fund, and Ascend, the operators recently raised $4.5 million. CEO Jay Allen, who previously worked at Amazon Web Services for many years, leads the growing company Moondream.

Moondream2 joins a series of specialized and optimized open-source models that deliver similar performance to larger, older models while requiring fewer resources to run. With GOT, researchers recently presented a model trained for OCR tasks. Recently, the startup Useful Sensors also released Moonshine, a promising solution for speech transcription.

This is particularly relevant for smartphones, and open-source advances like moondream2 prove technical feasibility. However, practical application remains complicated for consumers. While there are small on-device models for Apple Intelligence or Google's Gemini Nano, both manufacturers still outsource more complex tasks to the cloud.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.