Apple's new LLM-powered Siri for human-like conversations reportedly debuts in 2026

Apple is developing a new version of Siri that will use large language models (LLMs) to enable more natural conversations, but users will need to wait until 2026 to try it, according to Bloomberg.

The project, known internally as "LLM Siri," aims to handle complex queries and interact more naturally with users. While development has been underway for some time, Apple won't announce the technology until 2025, with a public release planned for early 2026, Bloomberg reports.

Apple's approach to AI: methodical, aka slow

Apple's comparatively slow AI implementation stands out compared to its peers. While Google and OpenAI already offer natural language conversations through their products, Apple's current AI capabilities rely on smaller, specialized models using task-specific "adapters" to handle specific tasks, rather than using a large multimodal model like GPT-4o.

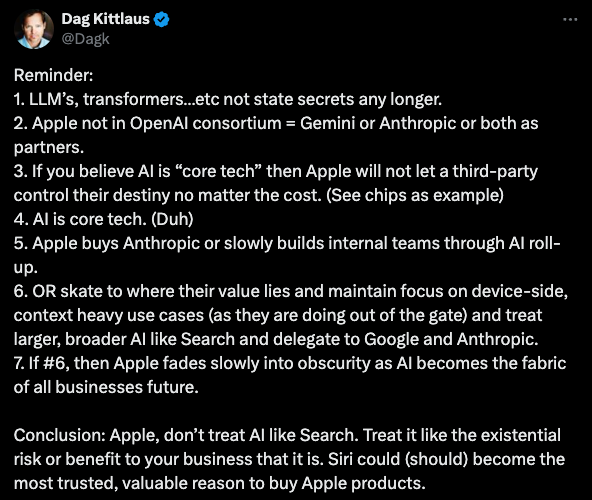

The company's cautious strategy has spurred debate over whether Apple should acquire AI company Anthropic to accelerate its technological progress. Former Siri co-founder Dag Kittlaus expressed concern, calling AI development an "existential risk" for Apple.modal model like GPT-4o.

Apple's slower approach with LLM Siri may be due to technical limitations. While Google's and OpenAI's voice modes are good enough for a fluid conversation, they can't perform actions based on user commands - which is Siri's primary function.

AI systems that can handle more complex actions, sometimes described as agentic AI, aren't reliable enough yet. This is why Google still keeps both its Assistant and Gemini services running, which can be confusing for users.

Amazon's Alexa has reportedly hit similar limitations with current language models when it comes to executing commands. Apple might be waiting for the next generation of AI that can better manage these practical tasks.

Apple's AI rollout plan

Apple engineers are currently testing the new Siri as a separate application on iPhones, iPads and Macs, according to Bloomberg. The company plans to eventually replace the current Siri interface with this new technology.

The rollout is planned in several phases. Apple will start by integrating ChatGPT into Apple Intelligence next month. After that, the company will add other models such as Google Gemini. These capabilities will then be integrated directly into the new Siri, with a strong focus on privacy features.

The company may continue offering access to specialized third-party AI systems even after launching the new version. Apple declined to comment on Bloomberg's report.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.