Adobe's "MultiFoley" AI creates synchronized sound effects for video

Adobe Research and University of Michigan researchers have created an AI system that generates Foley sounds—the custom sound effects added to films and videos during post-production.

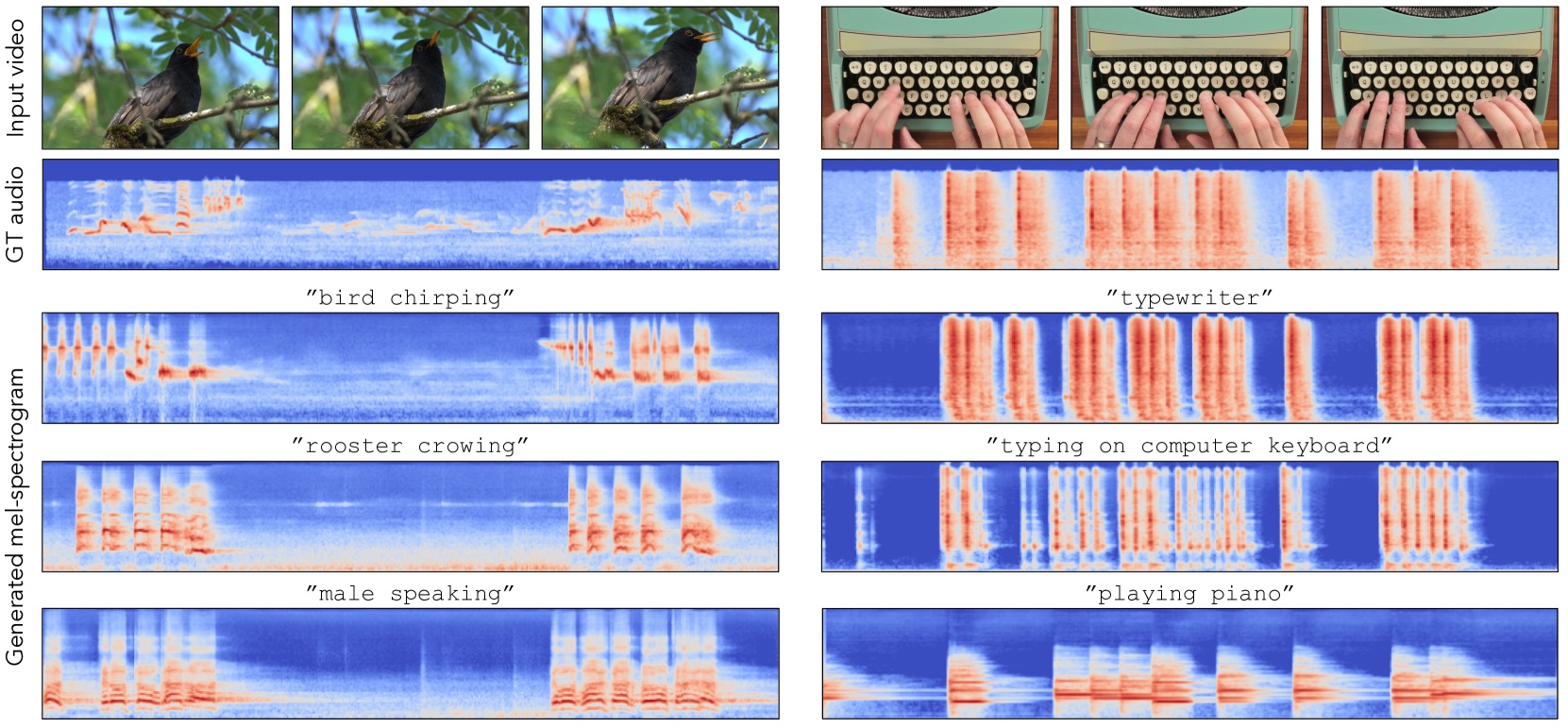

The system, called MultiFoley, lets users create sounds through text prompts, reference audio, or video examples. In demonstrations, the system transformed a cat's meow into a lion's roar and made typewriter sounds play like piano notes, all while maintaining precise synchronization with the video.

Video: Adobe

The system stands out for its ability to generate high-quality audio at 48kHz bandwidth. The researchers achieved this by training the AI on both internet videos and professional sound effect libraries.

MultiFoley is the first system to combine multiple input methods—text, audio, and video references—in a single model. It maintains tight synchronization between video and generated audio through a specialized mechanism that analyzes visual features at 8 frames per second, then scales them up to match the 40 Hz audio sampling rate.

The system achieves average synchronization accuracy of 0.8 seconds, significantly better than previous systems that typically lagged by more than a second.

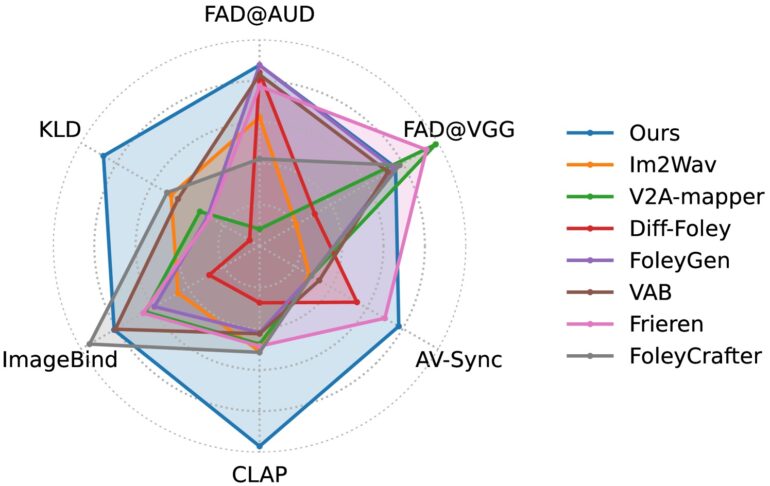

Testing shows major improvements in sound quality and timing

In tests against existing systems, MultiFoley showed superior performance in audio-video synchronization and matching generated sounds to text descriptions. A user study found that 85.8 percent of participants rated MultiFoley's semantic consistency better than the next-best system, while 94.5 percent preferred its synchronization.

The researchers note some current limitations. The system's training data was relatively small, limiting its range of sound effects. It also struggles with generating multiple simultaneous sounds.

The team plans to release the source code and models soon. While Adobe hasn't announced plans to add MultiFoley to its products, the technology would fit naturally alongside the AI capabilities already present in Adobe's Premiere Pro video editing software. The system could benefit individual creators as well as production companies looking to streamline their sound design process.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.