OpenAI takes another shot at building robots

Key Points

- OpenAI is rebuilding its robotics department and is looking to fill key positions, including an electrical sensor engineer, a robotics mechanical engineer, and a technical project manager.

- The company aims to develop flexible and versatile robotic systems that can function beyond simulation through continuous testing and iteration, marking a shift in strategy after it closes its robotics division in 2020.

- OpenAI's renewed interest in robotics could signify that a software-only approach may not be sufficient for developing AGI, that the company has found ways to overcome the data shortage previously cited as a reason for closing the division, or that new business opportunities have emerged from combining generative AI with robotics.

OpenAI is stepping back into robotics, about four years after shuttering its original robotics program.

The company is looking to build a new team from scratch and is advertising for three key roles: an electronic sensing engineer to work on robot sensors, a robotics mechanics engineer to work on the nuts and bolts such as gearboxes and motors, and a technical project manager to oversee product development and operations.

According to the job listings, these positions will help create what OpenAI calls the "next generation of embodied AI" and push toward "AGI-level intelligence," which sounds like corporate speak for "we want to build robots that can actually handle the real world." The goal is to move beyond simulations and create robots that can adapt to messy, unpredictable environments through constant testing and refinement.

"Working across the entire model stack, we integrate cutting-edge hardware and software to explore a broad range of robotic form factors," one listing reads.

A change of heart

This is quite a turnaround from October 2020, when OpenAI shut down its robotics division. At the time, Wojciech Zaremba, OpenAI's co-founder and head of robotics, said they needed to focus on large language models and human feedback training to pursue artificial general intelligence (AGI). Sam Altman, OpenAI's CEO, called their robotics research premature earlier this year.

The main issue at the time was data - or rather, the lack of it. The robotics team couldn't get their hands on the massive datasets needed to effectively pre-train AI models, which was OpenAI's main strategy back then. These days, it seems like they've switched mainly to test-time compute, which focuses on inference instead of pre-training.

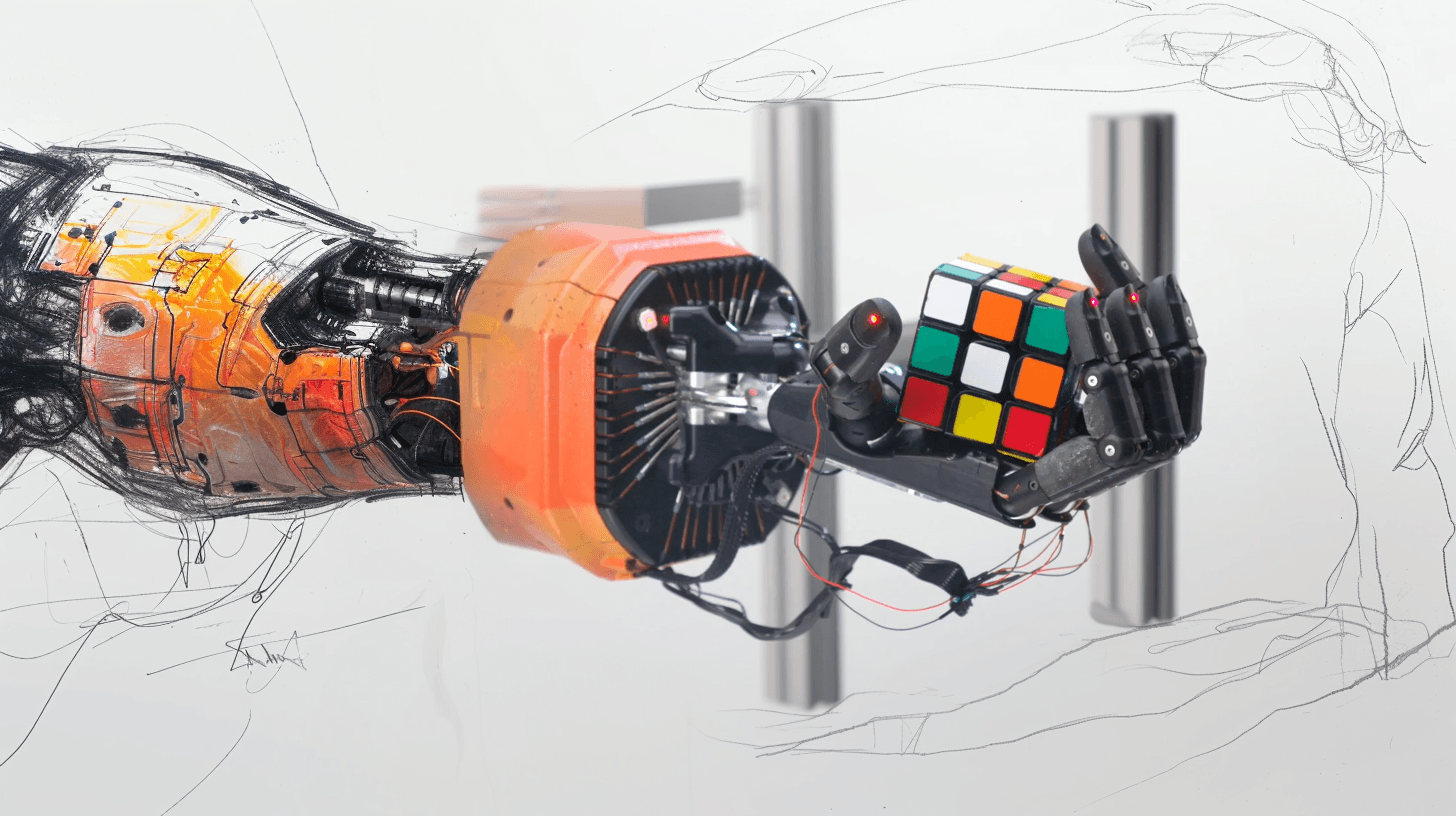

OpenAI shut down its robotics department despite some impressive achievements. Their Dactyl robot hand made headlines in 2019 by solving a Rubik's cube single-handedly, successfully bridging the notorious gap between simulation and reality.

Why now?

While we've known since May that OpenAI is planning a return to robotics, these job listings reveal that they're also getting back into hardware. This could mean several things.

Maybe they've realized that a software-only approach isn't enough to build AGI. Or maybe they've found ways around their old data problems, perhaps through synthetic data and better simulations.

There's also the simple explanation: they see a business opportunity, betting that generative AI can supercharge robot capabilities. The idea is that language models' broad knowledge of the world, combined with advanced vision systems, could help robots interact more naturally with their environment.

This vision is already taking shape through their partnership with Figure AI, a robotics startup that is currently testing its OpenAI-powered robots in BMW factories.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now