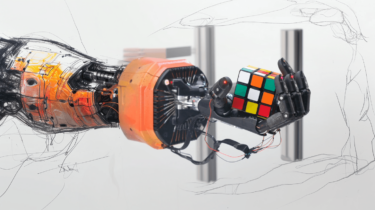

In collaboration with OpenAI, robotics company Figure has developed a robot that can hold full conversations and plan and execute its actions.

This is achieved by connecting the robot to a multimodal model trained by OpenAI that understands images and text.

By connecting to the model, Figure's robot, called "Figure 01," is able to describe its environment, interpret everyday situations, and perform actions based on highly ambiguous, context-dependent requests.

All actions in the video are learned, not remote-controlled, and executed at normal speed.

Corey Lynch, robotics and AI engineer at Figure, is excited about the progress: "Even just a few years ago, I would have thought having a full conversation with a humanoid robot while it plans and carries out its own fully learned behaviors would be something we would have to wait decades to see. Obviously, a lot has changed."

Similar robotics research has already been demonstrated by Google with its RT models, which allow a robot to navigate an everyday environment and plan and execute complex actions based on the input and output of language and image models. But Google's demo robots weren't so chatty.

A robot that listens, plans, thinks, reasons and acts

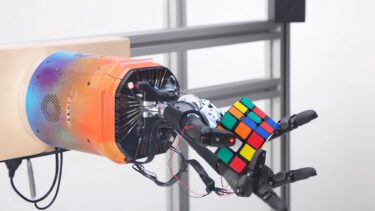

Lynch describes the robot's capabilities in detail. These include describing its visual experiences, planning future actions, reflecting on its memories, and verbally explaining its conclusions that lead to actions.

To accomplish this, OpenAI's multimodal model processes the entire conversation history, including past images, to generate spoken responses that a human can respond to. The same model also decides which learned behavior the robot should perform to execute a given command.

For example, the robot can correctly answer the question "Can you put that there?" by referring to previous parts of the conversation to determine what is meant by "that" and "put that there." In one example, it understands that the dishes lying around should probably go in the dish rack, something I couldn't figure out until I was over 40.

The robot's actions are controlled by what are called visuomotor transformers, which translate images directly into actions. They process the images from the robot's cameras at a frequency of 10 Hz and generate actions with 24 degrees of freedom (wrist positions and finger angles) at a frequency of 200 Hz.