Huginn: New AI model 'thinks' without words

Key Points

- Researchers have developed an AI language model called "Huginn" that uses a recursive architecture to flexibly adapt its computational depth and thus increase its performance.

- Training was performed on a supercomputer by randomly varying the number of repetitions of the central computational block. This taught the model to adapt its computational depth to the complexity of the task.

- Huginn performed particularly well in mathematical and programming tasks, outperforming some larger open-source models. The researchers see great potential in this approach as an alternative to classical reasoning models.

A research team from ELLIS Institute Tübingen, the University of Maryland, and Lawrence Livermore National Laboratory has developed a language model called "Huginn" that can deepen its reasoning processes through a recursive architecture.

Unlike conventional reasoning models like OpenAI's o3-mini that generate chains of thought through reasoning tokens, Huginn requires no specialized training and reasons in its neural network's latent space before producing any output.

The model was trained on the Frontier supercomputer using 4,096 AMD MI250X GPUs - one of the largest training runs ever conducted on an AMD cluster. The training concept was novel yet fundamentally simple: Unlike typical language models, Huginn was trained with a variable number of computational iterations.

For each pass, the system randomly determined how many times to repeat the central computation block - anywhere from once to 64 times. The special distribution of this random number ensured that while the model primarily trained with fewer repetitions, it occasionally performed many iterations.

Testing shows the model performs particularly well on mathematical tasks and programming challenges. On benchmarks like GSM8k and MATH, it outperforms several tested open-source models that have twice as many parameters and more training data.

Complex patterns emerge in mathematical processing

The researchers documented several emergent capabilities: Without specific training, the system can adjust its computational depth based on task complexity and develop chains of reasoning within its latent space.

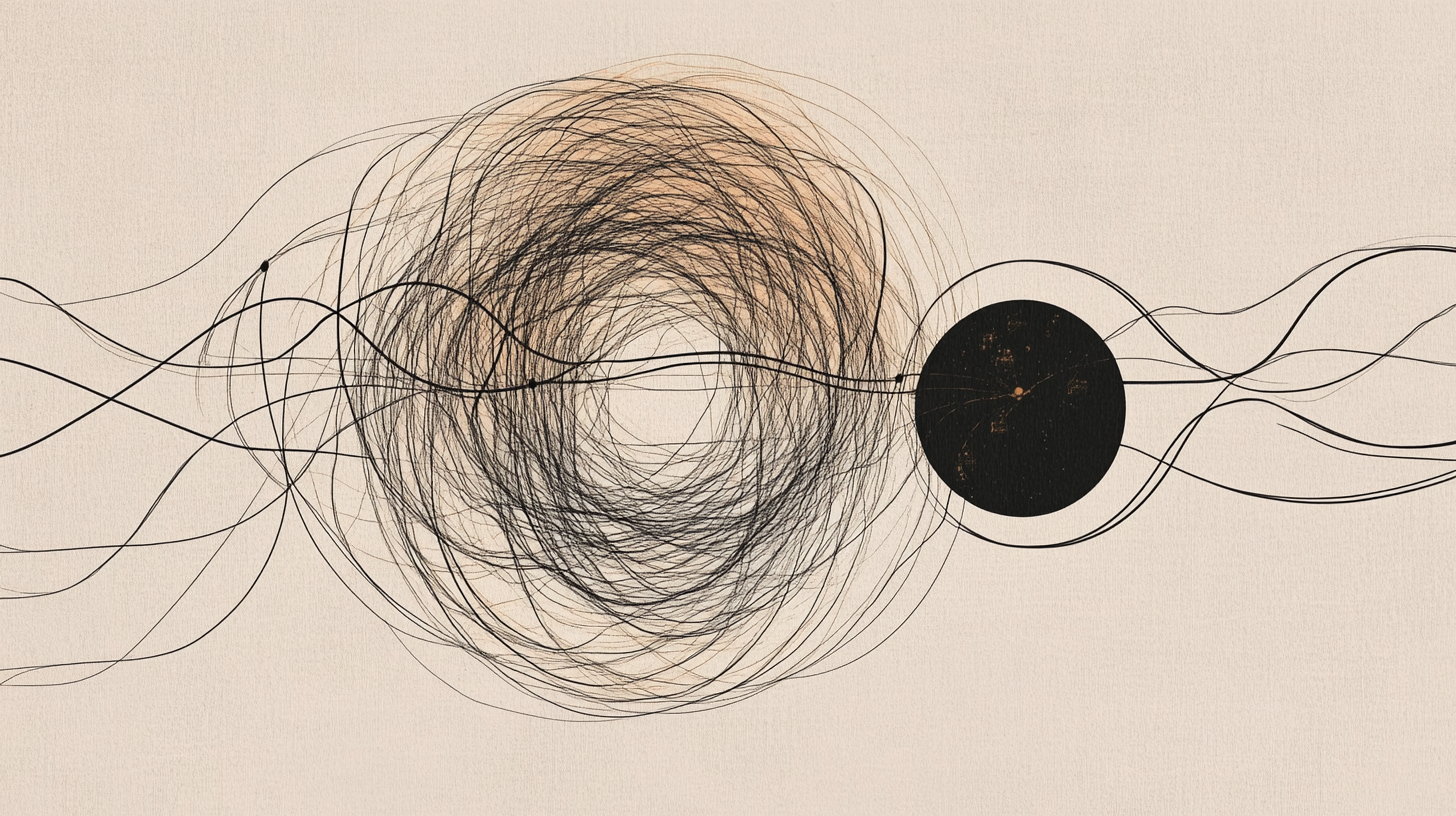

The research team's analysis reveals that the model develops sophisticated computational patterns in its latent space, including circular trajectories when solving mathematical problems. The team sees these examples as evidence that the model independently learns to "utilize the high-dimensional nature of its latent space to draw conclusions in novel ways."

A promising alternative to traditional approaches

While its absolute performance isn't yet groundbreaking, the researchers see significant potential: As a proof-of-concept, Huginn already demonstrates impressive capabilities despite its relatively small size and limited training data. The observed performance improvements with extended reasoning time and emergent capabilities suggest that larger models using this architecture could become a promising alternative to classical reasoning models.

The team particularly emphasizes that their method could capture types of reasoning that aren't easily expressed in words (specifically chains of thought). Further research - and performance improvements - are expected. The researchers suggest reinforcement learning as a possible extension, similar to its use in classical reasoning models.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now