Inception Labs introduces its Mercury Series of diffusion-based LLMs

Key Points

- Inception Labs has introduced its Mercury models, a new line of large language models that use diffusion technology to achieve a tenfold increase in speed over existing models.

- Unlike current large language models that generate text sequentially, diffusion models use a "coarse-to-fine" approach. Inception Labs claims that this non-sequential method allows for improved reasoning, structured responses, and error correction.

- In standard code generation benchmarks, Mercury Coder matches the performance of autoregressive models while demonstrating significantly faster speeds, even on standard Nvidia H100 GPUs. AI expert Andrej Karpathy sees the potential for a distinct and novel "model psychology."

Inception Labs has introduced Mercury, a new series of large language models that use diffusion technology rather than traditional autoregressive processing. The company reports these models can process tasks 10 times faster than current approaches, with initial releases focused on coding applications.

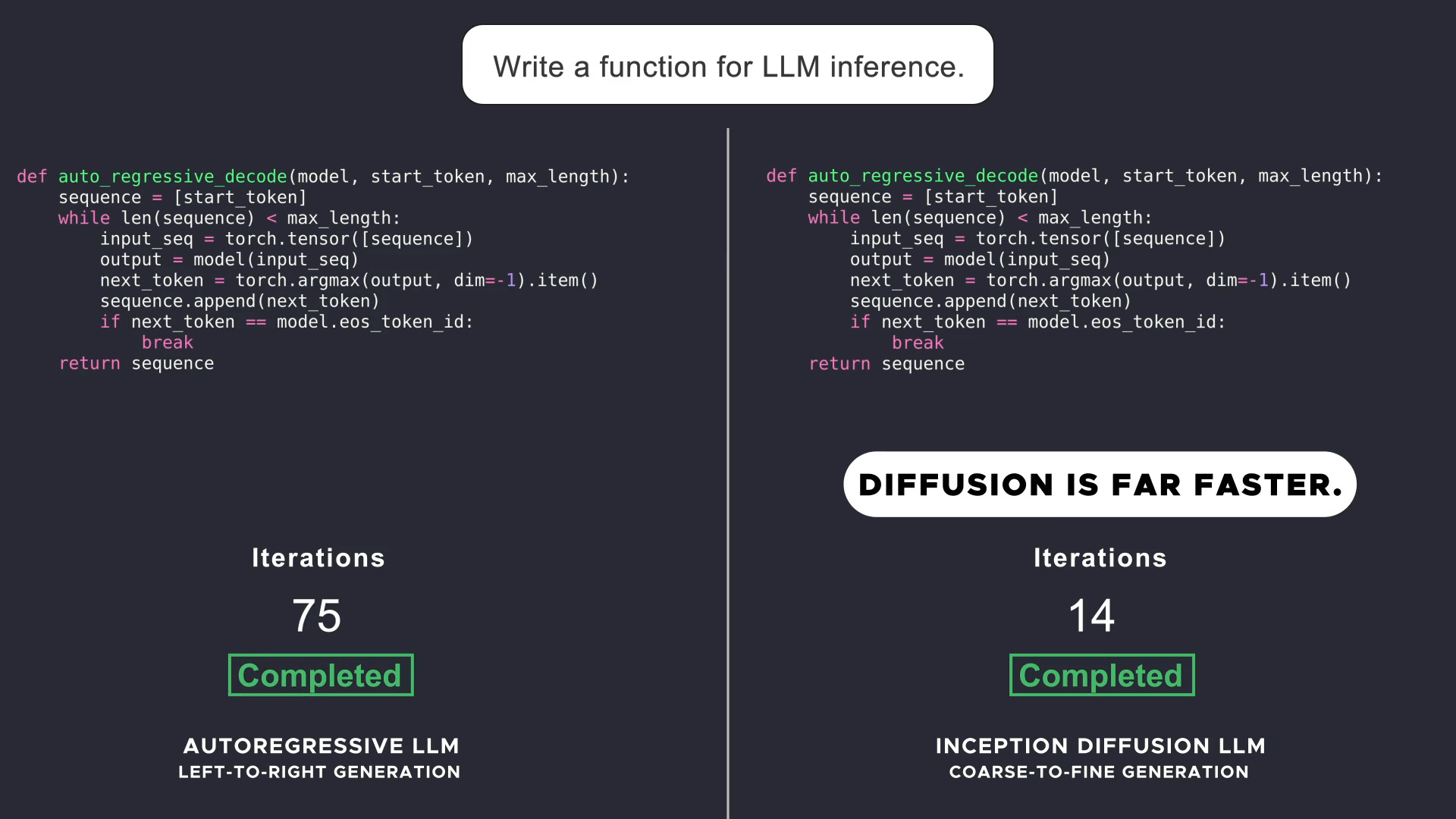

Unlike current large language models that generate text sequentially "from left to right" (autoregressive), Mercury's diffusion models use a "coarse-to-fine" approach. The system generates output through several refinement steps, starting from pure noise.

For the same task, Mercury Coder requires significantly fewer passes than an autoregressive model. | Video: Inception Labs

The non-sequential approach enables different handling of reasoning, response structure, and error correction. While diffusion technology is standard in image and video generation, it remains uncommon in text and audio applications.

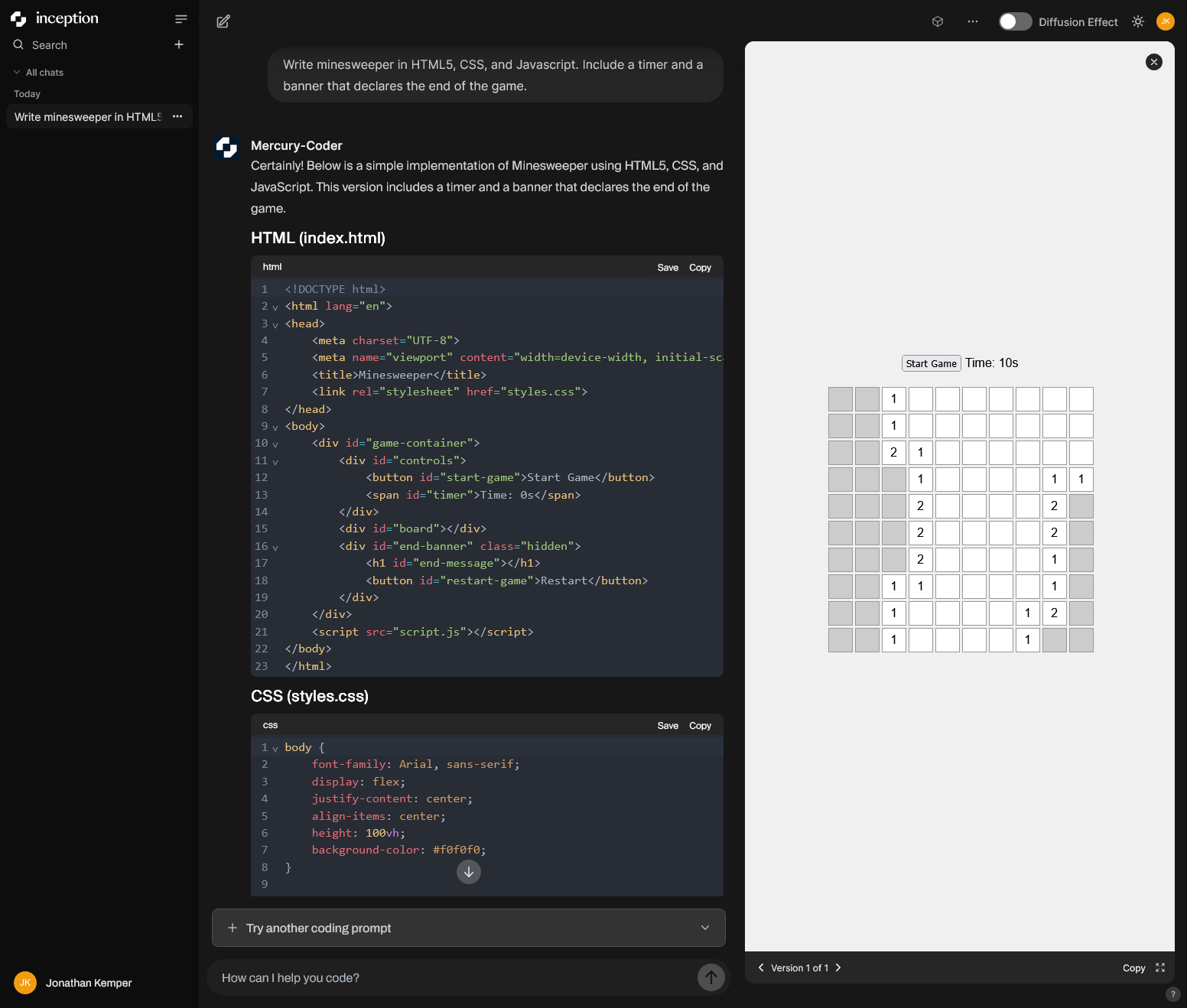

Mercury Coder is available for testing at chat.inceptionlabs.ai. The system processes prompts while showing an interactive preview of the generated software in a sidebar.

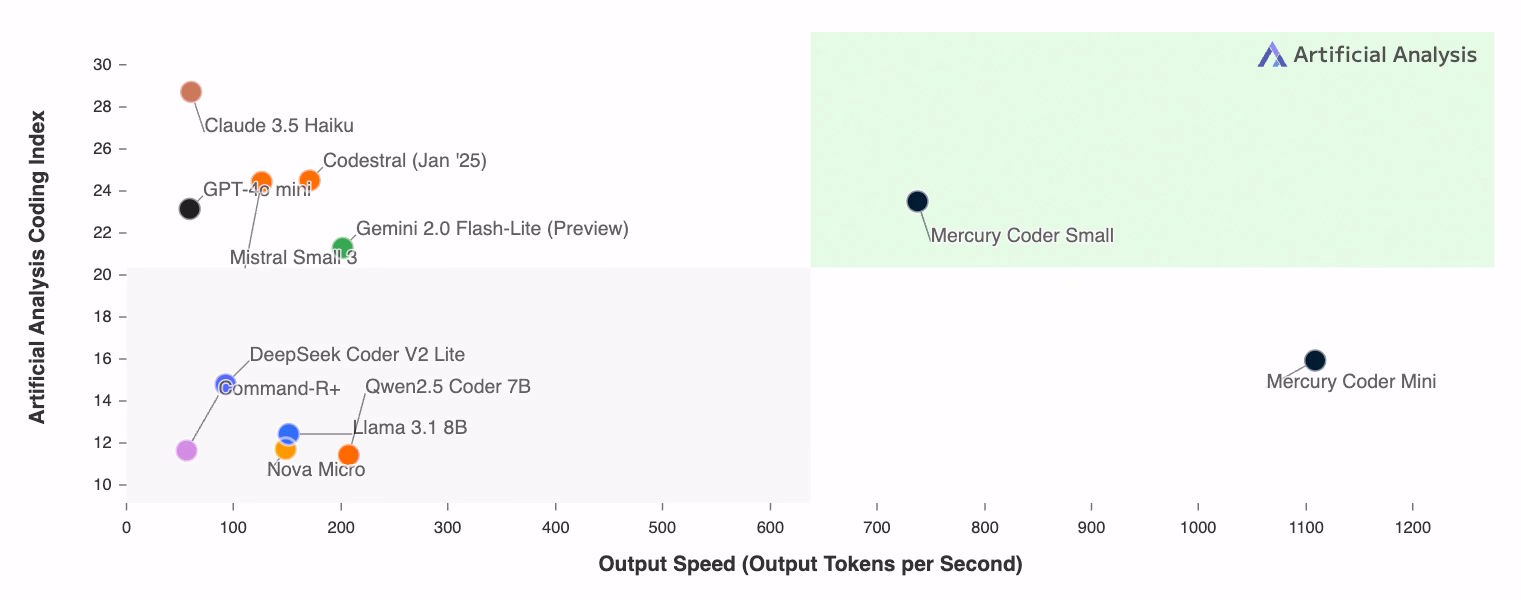

Performance comparisons

In standard code generation tests, Mercury Coder performs similarly to autoregressive models like Gemini 2.0 Flash-Lite and GPT-4o-mini, while achieving higher speeds on standard Nvidia H100 GPUs. The system generates more than 1,000 tokens per second - previously only possible with specialized AI inference chips like those from Groq.

Inception Labs is testing the technology for customer support, code generation, and business automation. Some of its customers have begun replacing autoregressive models with Mercury, and a chat model is in closed beta testing.

Former OpenAI researcher Andrej Karpathy discussed Mercury's approach on X, noting that the preference for autoregressive processing in text and audio, versus diffusion in images and videos, has been a persistent technical question and "a bit of a mystery to me and many others why, for some reason, text prefers Autoregression" over diffusion.

"If you look close enough, a lot of interesting connections emerge between the two as well," Karpathy writes, stating that Mercury may demonstrate "new, unique psychology, or new strengths and weaknesses."

Mercury Coder is available through a Playground. Enterprise customers can request access to Mercury Coder Mini and Mercury Coder Small via API or local infrastructure deployment. Pricing information has not been released.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now