Grok 3 Mini turns up the heat as AI price wars push model costs even lower

xAI is making a push on efficient AI with the release of Grok 3 Mini, its newest language model. Both Grok 3 and its Mini sibling are available through the xAI API.

The Grok 3 family currently includes six variants: Grok 3, Grok 3 Fast, and four versions of Grok 3 Mini—available in slow and fast, each with either low or high reasoning capacity.

According to xAI, Grok 3 Mini was purpose-built for speed and affordability while still packing an integrated reasoning process—a notable distinction from the larger Grok 3, which operates without explicit reasoning.

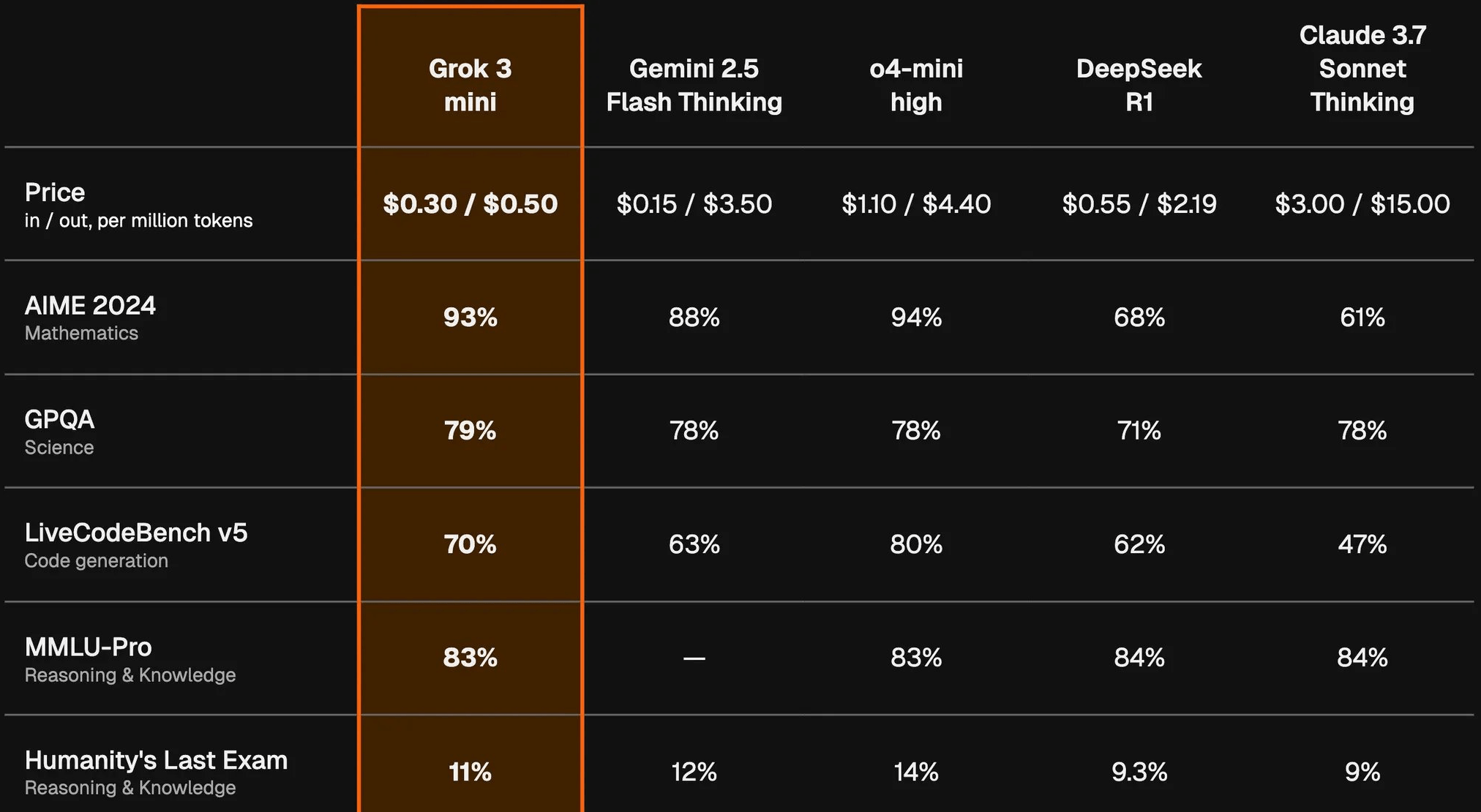

xAI claims Grok 3 Mini is topping leaderboard results in math, programming, and college-level science benchmarks—while coming in at up to five times cheaper than other reasoning models. Despite its smaller size, xAI says it’s even outperforming more expensive flagship models in several areas.

The pressure on pricing in the AI space isn’t letting up—especially after Google’s recent cost drop with Gemini 2.5 Flash. Grok 3 Mini only turns up the heat.

One noteworthy feature: xAI is shipping a full reasoning trace with every API response. This is meant to give developers more transparency into model behavior, though as ongoing research points out, these apparent "thought processes" can sometimes be misleading.

While Grok 3 Mini is the new addition to the model lineup, both Grok 3 and Mini are now accessible to developers via the xAI API, with integration into established toolchains to smooth the adoption process.

Grok 3 continues to be aimed at demanding tasks that require deep world knowledge and domain expertise, with xAI calling it the most powerful model available without a dedicated reasoning component.

Benchmarking Grok 3 Mini

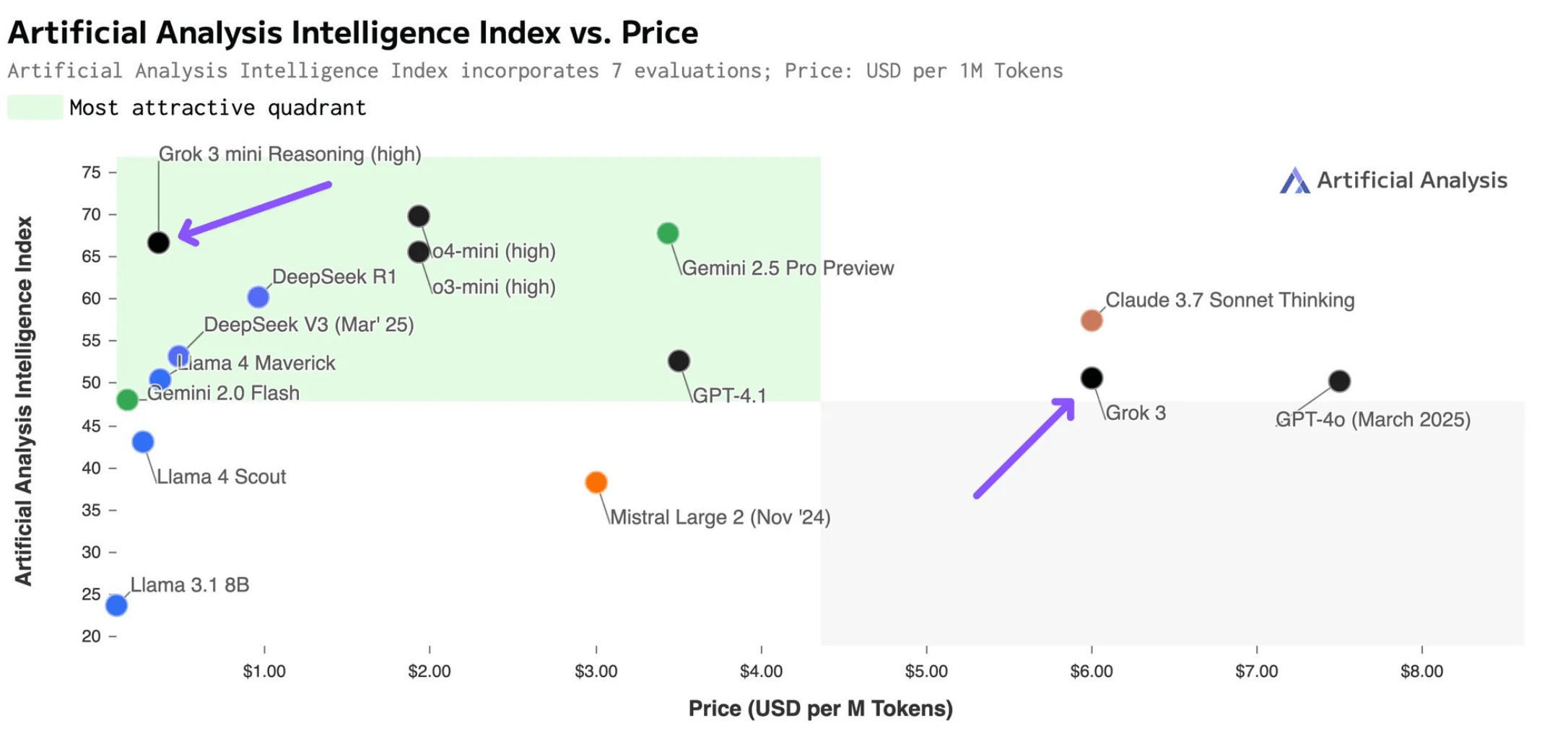

The Artificial Analysis team benchmarked the Grok 3 family and highlighted Grok 3 Mini Reasoning (high) for its price/performance ratio. According to their "Artificial Analysis Intelligence Index," Grok 3 Mini Reasoning (high) actually outperforms models like Deepseek R1 and Claude 3.7 Sonnet (64k reasoning budget)—all while maintaining a steep cost advantage.

With pricing at $0.3 per million input tokens and $0.5 per million output tokens, it’s almost an order of magnitude below models like OpenAI’s o4-mini or Google’s Gemini 2.5 Pro. For those who need more speed, a faster version is available at $0.6/$4 per million tokens.

The results here center on the "intelligence" metric, which combines six different benchmarks. A detailed breakdown for each is on the way, though—as always—benchmark scores don’t necessarily reflect real-world performance. Smaller models especially can put up impressive numbers that don’t always carry over to everyday use.

When it comes to raw speed, Grok 3 outpaces its more reasoning-focused Mini counterpart: on standard endpoints, Grok 3 generates 500 tokens in about 9.5 seconds, while Grok 3 Mini Reasoning takes 27.4 seconds.

Artificial Analysis ranks Grok 3 and Grok 3 Mini Reasoning (high) in the top five of their respective categories—non-reasoning and reasoning—and notes that, with these releases, xAI has firmly established itself among the leaders in the current AI model landscape.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.