Midjourney expands image control with Omni-Reference tool

Key Points

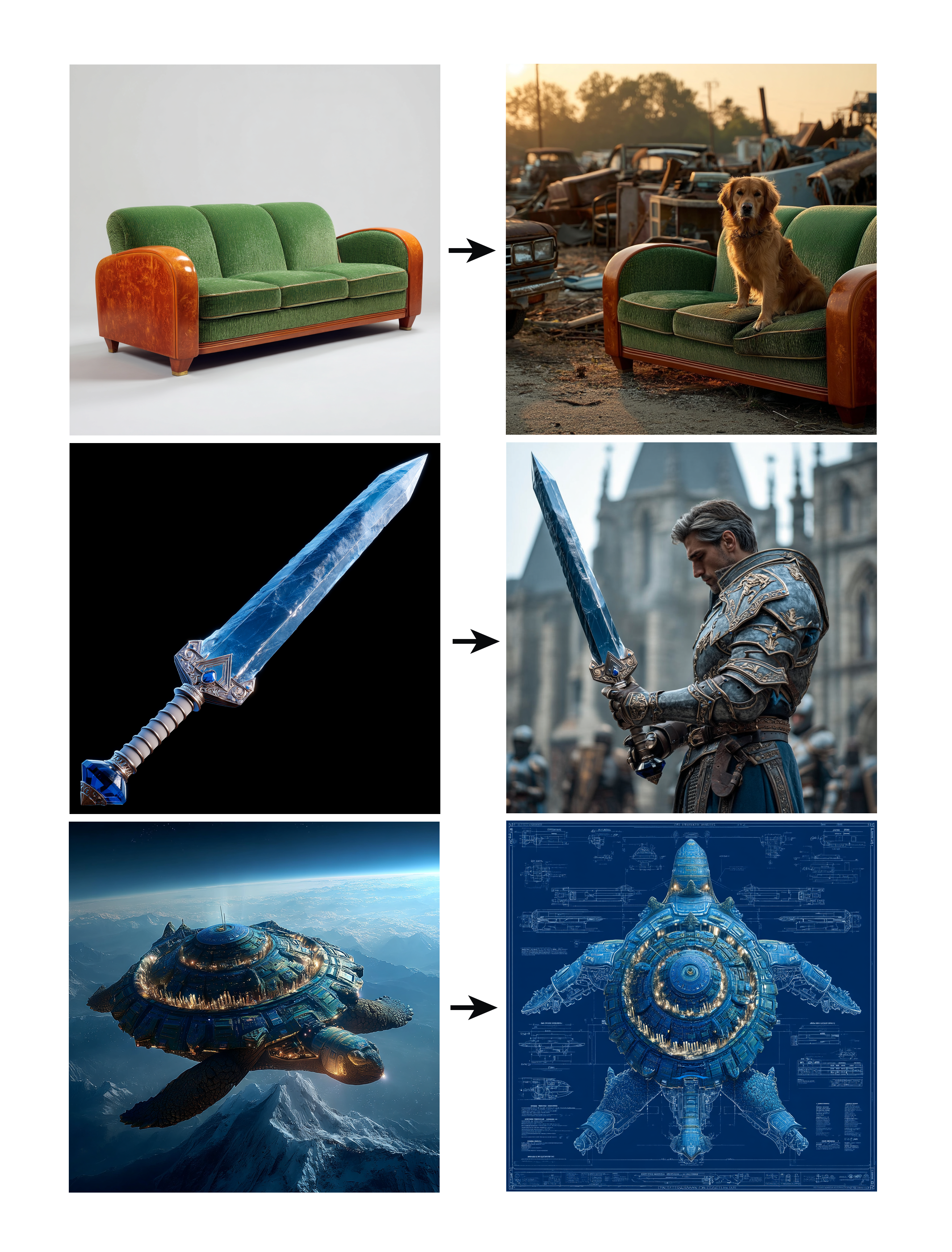

- Midjourney has released "Omni-Reference," a new feature that lets users incorporate specific visual elements like characters or objects from a reference image into their generated images.

- The tool is available via the web interface with drag-and-drop and sliders, or on Discord with the

--orefparameter for the image URL and--owto adjust how strongly the reference is applied, ranging from 0 to 1000 (default is 100). - Omni-Reference supports using multiple references and can be combined with style references, but it currently does not work in the new draft mode.

Midjourney is rolling out an experimental feature called Omni-Reference, designed to give users granular control over which visual elements appear in generated images.

The system replaces the older "Character Reference" tools from version 6 and significantly expands what can be directed—users can now specify anything from particular characters to individual objects. For example, prompts like "Place exactly this object in my image" are now possible.

According to Midjourney, Omni-Reference works with both human and non-human subjects. The feature is still in testing, with additional updates and improvements expected in future releases.

Using Omni-Reference on web and Discord

On the web interface, users can drag and drop an image into the prompt bar, placing it in the area labeled "omni-reference." A slider controls how strongly the system should use the reference. On Discord, the feature is accessed with the --oref parameter, followed by the image URL. Reference strength is adjusted using --ow.

The --ow (Omni-Weight) parameter determines how strongly Midjourney incorporates the specified image reference. This value ranges from 0 up to 1000, with a default of 100. For style transfers—such as turning a photo into an anime-style image—Midjourney suggests using lower values like --ow 25. To preserve more specific visual features, such as a particular face or outfit, higher values like --ow 400 are recommended.

Additional parameters, including --stylize and --exp, can also influence the final image. These settings interact with the Omni-Reference, so higher values for stylization or expression may require adjusting the Omni-Weight to maintain the desired results. Midjourney advises against exceeding medium values such as --ow 400 without a clear need, as image quality can degrade at higher settings.

Omni-Reference is compatible with style references, personalization features, and mood boards. To integrate specific actions or combinations—like a character interacting with an object—these elements need to be clearly named in the prompt. For instance, "a character holding a sword --oref sword.png" ensures both elements appear. If the reference weight is set low, additional descriptive details in the prompt help preserve key visual features.

The system supports multiple references simultaneously, such as including two characters from different images, as long as both are mentioned in the prompt. Omni-Reference is currently not available in the new draft mode for version 7.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now