Bytedance launches Agent TARS, an open-source AI automation agent

Key Points

- Bytedance has introduced Agent TARS, an experimental open-source automation tool that visually processes web pages and interacts with the command line and file system, currently limited to macOS.

- The system uses an event stream interface to provide real-time feedback and lets users intervene or add instructions while tasks are underway. It connects to tools like text editors using Anthropic's Model Context Protocol and supports exporting session data.

- The app is in a technical preview stage, with unreliable support for some models. Developers invite feedback and contributions as they continue development.

Agent TARS is Bytedance's new open-source approach for automating complex tasks by visually interpreting web content and interacting with the command line and file system. TARS is still in an experimental phase and is currently available only for macOS.

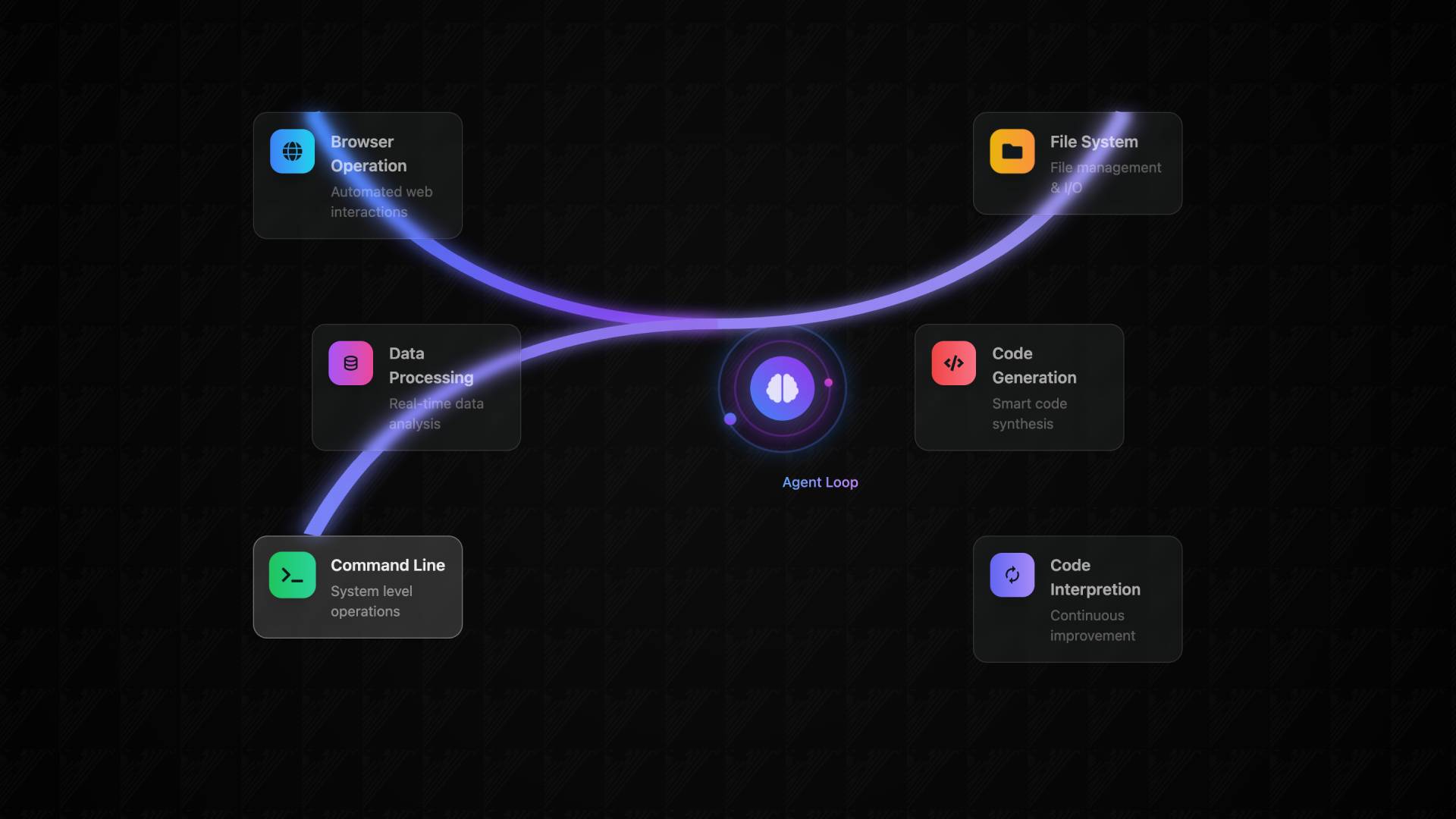

Developed by Bytedance, the company behind TikTok, Agent TARS uses an agent-based framework that can automatically plan and carry out processes like searching, browsing, and navigating links. Communication with the user interface happens through an event stream, allowing users to see intermediate statuses and results in real time.

Video: Bytedance

Agent TARS processes webpages visually and relies on Anthropic's Model Context Protocol (MCP) to connect with tools like text editors, the command line, and file systems. A Windows version is in development.

Multimodal interface with real-time feedback

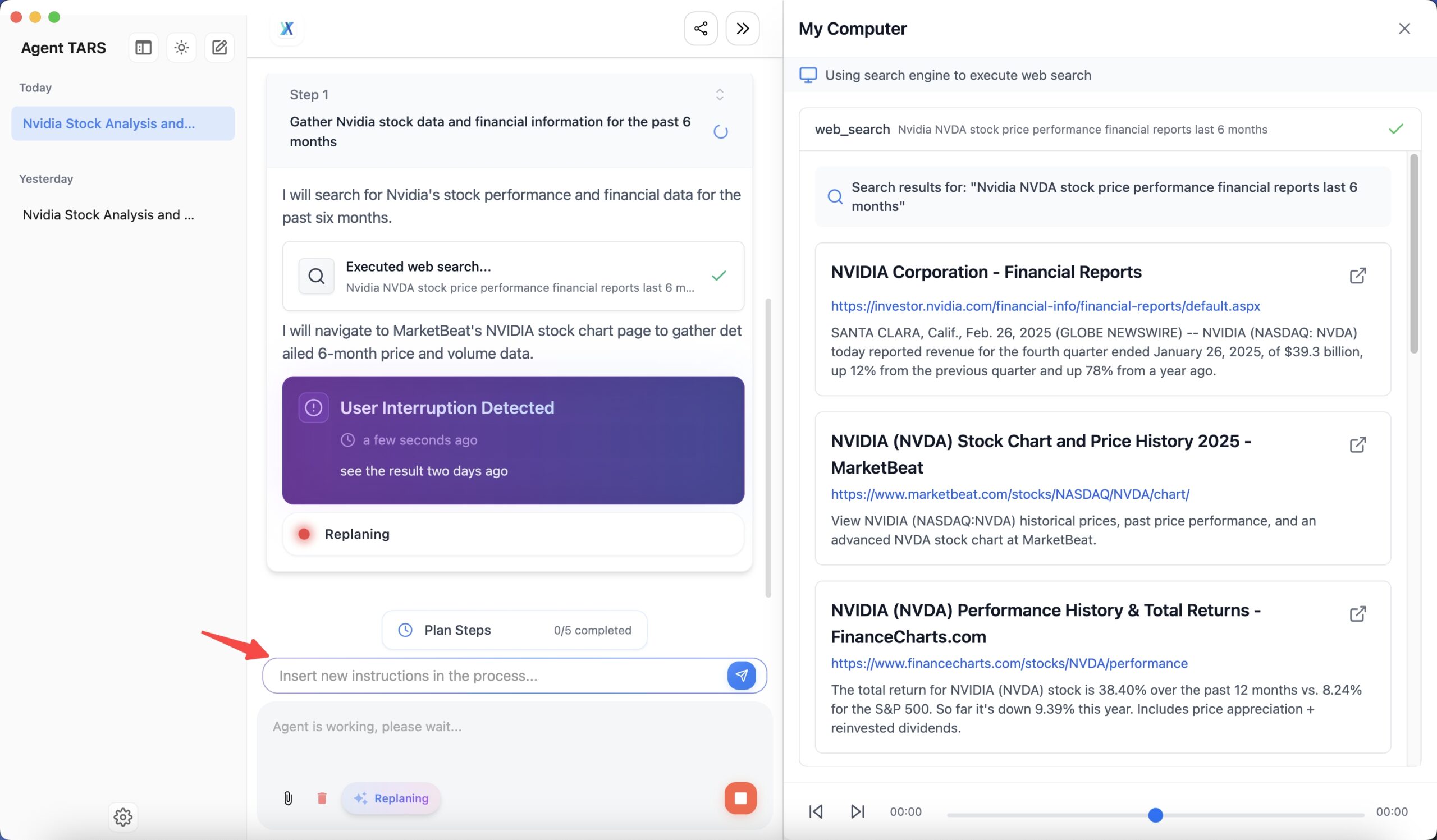

The interface offers a live view of everything the agent is doing, including open documents, browser windows, and other process artifacts. Users can jump in at any moment by adding new instructions, letting them guide the agent's workflow as it runs.

Several hands-on examples are available on the project website, including a technical analysis of Tesla's share price, an overview of trending ProductHunt projects, a bug report for the Lynx repository, and a week-long travel itinerary for Mexico City.

Users can export their entire agent session either as a local HTML file or by uploading it to an external server. If uploaded, the app sends a POST request with the HTML bundle and the server returns a shareable link.

After installing Agent TARS from GitHub, users need to set up API keys for their preferred model and search services. Extra parameters like apiVersion or deploymentName are needed for Azure OpenAI integration. Right now, Agent TARS works best with Claude, which the developers describe as the best temporary option. Support for OpenAI models is still unstable.

Not to be confused with UI TARS Desktop

In a recent blog post, the developers addressed confusion between Agent TARS and UI TARS Desktop. UI TARS Desktop is designed for automating system-level graphical user interfaces and uses its own UI TARS model.

That model works on both macOS and Windows, while Agent TARS is focused on browser-based automation and is currently only available for macOS. The two apps have different purposes and aren't compatible with each other.

Agent TARS is in technical preview and not recommended for production use at this stage. The development team encourages feedback, bug reports, and contributions via GitHub, Discord, or X. More technical details and roadmap updates are expected as Bytedance works toward an open platform for multimodal, agent-driven task automation.

Standalone AI agents powered by multimodal language models are gaining traction as a way to automate repetitive digital tasks. Companies like OpenAI, Manus, and Google are already offering similar agents or preparing to launch them. Despite the buzz, these systems still struggle with the unpredictability.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now