Are AI images from Stable Diffusion, DALL-E 2 and co. really unique?

Key Points

- The debate over copyright infringement from training datasets for image AI gains a new perspective.

- Researchers have studied how often diffusion models reproduce content from their training material.

- In their view, these instances occur frequently enough that the existence of AI image copying cannot be denied.

To what extent do AI images stand out from their training material? A study of diffusion models aims to provide an answer to this question.

Debates about AI art that Stable Diffusion, DALL-E 2, and Midjourney do or do not create have accompanied the tools since their inception. The most intense debate is probably the one about copyright, especially regarding the training material used. Recently, the popular prompt "trending on ArtStation" has been at the center of protests against AI images. It is based on the imitation of artworks that are popular on ArtStation.

Large and extensively trained diffusion models are supposed to ensure that each new prompt produces a unique image that is far removed from the originals in the training data. But is this really the case?

Data set size and replication are related

Researchers at New York University and the University of Maryland have tackled this question in a new paper titled "Diffusion Art or Digital Forgery?

They examine different diffusion models trained on different datasets such as Oxford Flowers, Celeb-A, ImageNet, and LAION. They wanted to find out how factors such as the amount of training data and training affect the rate of image replication.

At first glance, the results of the study are not surprising: diffusion models trained on smaller data sets are more likely to produce images that are copied from or very similar to the training data. The amount of replication decreases as the size of the training set increases.

Study examined only a small portion of training data

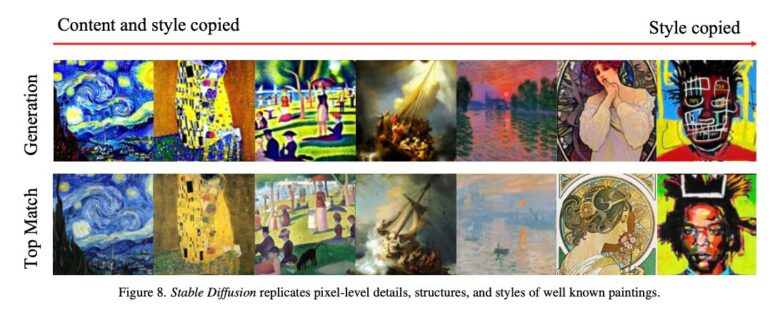

Using the twelve-million-image "12M LAION Aesthetics v2 6+", the researchers examined only a small section of the two-billion-image Stable Diffusion training dataset. They found that models like Stable Diffusion in some cases "blatantly copy" from their training data.

However, the near-exact reproduction of training data is not inevitable, as older studies on generative models such as GANs have shown, the paper states. The team confirms this with a latent diffusion model (LDM) trained with ImageNet, where there is no evidence of significant data replication. So, what does Stable Diffusion do differently?

Copies do not happen often, but often enough

The researchers suspect that the replication behavior in Stable Diffusion results from a complex interaction of factors, such as the model being text-conditioned rather than class-conditioned and the dataset used for training having a skewed distribution of image repetitions.

In the random tests, on average, about two out of 100 generated images were very similar to the images from the dataset (similarity score > 0.5).

The goal of this study was to evaluate whether diffusion models are capable of reproducing high-fidelity content from their training data, and we find that they are. While typical images from large-scale models do not appear to contain copied content that was detectable using our feature extractors, copies do appear to occur often enough that their presence cannot be safely ignored.

From the paper

Since only 0.6 percent of Stable Diffusion's training data was used for testing, there are numerous examples that can only be found in the larger models, the researchers write. In addition, the methods used may not detect all cases of data replication.

For these two reasons, the paper states, "the results here systematically underestimate the amount of replication in Stable Diffusion and other models."

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now