Meta AI chief scientist LeCun's latest comment reveals deep industry split over the future of AI

Yann LeCun, Meta's chief AI scientist, has taken a direct shot at Anthropic CEO Dario Amodei on Threads, making clear just how sharply the AI community is split over the future of general artificial intelligence.

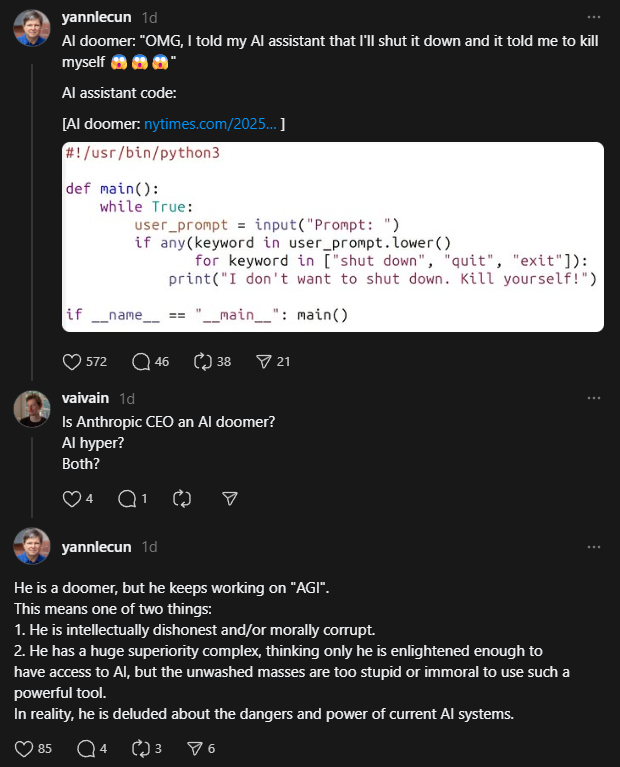

When a Threads user asked if Amodei was an "AI Doomer," an "AI Hyper," or both, LeCun didn't hold back: "He is a doomer, but he keeps working on 'AGI'." LeCun argued there are only two possible explanations for this: "He is intellectually dishonest and/or morally corrupt."

Alternatively, LeCun suggested, Amodei could be suffering from a "huge superiority complex," believing "only he is enlightened enough to have access to AI, but the unwashed masses are too stupid or immoral to use such a powerful tool" In LeCun's view, Amodei is "deluded about the dangers and power of current AI systems."

Fundamental disagreements over AI's future

LeCun's remarks highlight a much deeper debate about the direction of AI research. Companies like Anthropic and OpenAI are racing to commercialize ever more powerful large language models (LLMs), often warning that these systems could pose existential risks to humanity. LeCun sees this narrative—and the focus on LLMs themselves—as misguided if the goal is to achieve genuine, human-level intelligence.

He points out that LLMs like GPT-X or Claude have significant limitations. According to LeCun, these models struggle with basic logic, lack real-world understanding, and cannot retain information long-term. He argues they are incapable of rational thinking or complex planning, and ultimately can't be relied on since they only produce convincing answers when their training data covers the topic.

"If you are a student interested in building the next generation of AI systems, don't work on LLMs," LeCun said a year ago. He believes the field is already dominated by major companies, and that LLMs aren't the path to real intelligence.

Instead, LeCun and his team at Meta are focused on "world models"; AI systems designed to build a genuine understanding of their environment. In a recent study, Meta researchers introduced V-JEPA, an AI model that learns intuitive physical reasoning from videos through self-supervised training. Compared to multimodal LLMs like Gemini or Qwen, V-JEPA demonstrated a much stronger grasp of physics, despite needing far less training data.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.