Google launches Gemma 3n, a multimodal AI model built for real-time use on mobile devices

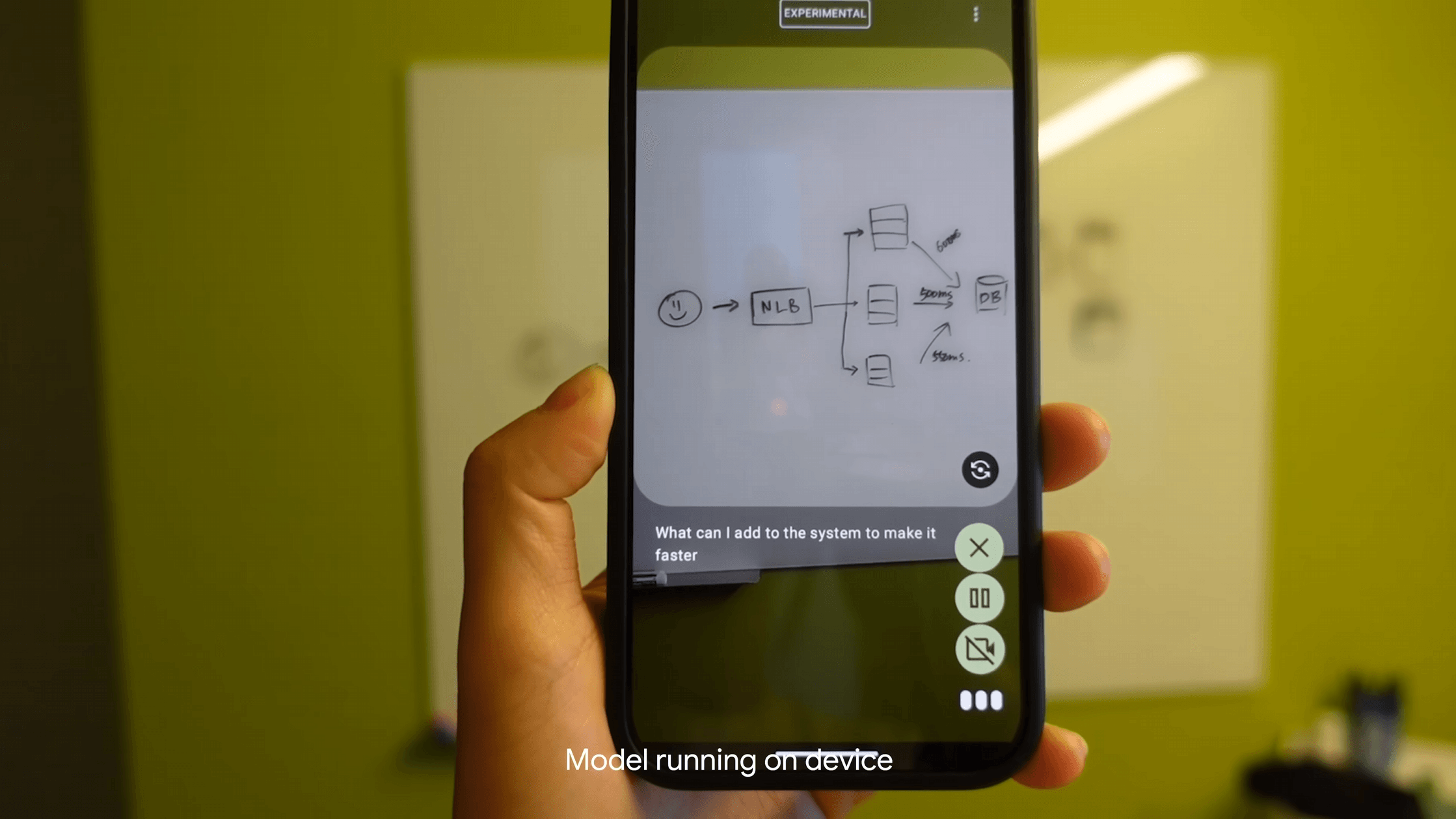

Google has introduced Gemma 3n, a multimodal AI model designed specifically for mobile devices.

Gemma 3n natively supports image, audio, video, and text inputs. It can generate text in up to 140 languages and handle multimodal tasks in 35 languages. The model comes in two sizes: E2B with 5 billion parameters and E4B with 8 billion. Thanks to architectural optimizations, E2B requires only 2 GB of RAM and E4B just 3 GB, with both models available in various quantized formats and sizes.

At the core of Gemma 3n is the MatFormer architecture, a nested transformer approach inspired by Matryoshka dolls. According to Google, the larger E4B model actually contains a fully functional E2B model inside. Developers can use either variant directly or create custom model sizes using the Mix-n-Match method, which allows individual layers to be disabled and the feedforward dimension to be adjusted.

The MatFormer architecture is also designed to enable dynamic switching between model sizes at runtime in the future, letting developers balance performance and memory usage based on device conditions.

Another key feature in Gemma 3n is the use of Per-Layer Embeddings (PLE). This technique computes embeddings for each layer on the CPU, while only the core weights stay on the GPU or TPU, reducing accelerator memory requirements to around 2 billion (E2B) or 4 billion (E4B) parameters.

Audio and vision: real-time processing on mobile devices

Gemma 3n processes audio using an encoder based on Google's Universal Speech Model (USM). Every 160 milliseconds, a chunk of audio is converted to a single token, enabling on-device applications like automatic speech recognition (ASR) and automatic speech translation (AST). For example, it can translate between English and various Romance languages. Audio clips can be up to 30 seconds long for now, with longer durations possible through further training.

For image and video tasks, Gemma 3n uses the new MobileNet-V5-300M encoder, which Google says can handle resolutions up to 768x768 pixels and analyze up to 60 images per second on a Google Pixel smartphone. The architecture builds on previous MobileNet-V4 models, but is larger and more powerful. Google claims the quantized MobileNet-V5 is 13 times faster than before, with nearly half the parameters and just a quarter of the memory footprint.

Benchmark results and open access

The E4B model scores over 1300 points in the LMArena benchmark, setting a new record for models under 10 billion parameters. Mix-n-Match intermediate variants also perform well on benchmarks like MMLU, according to Google. However, a quick test by developer Simon Willison found significant differences between the various quantized versions.

To coincide with the release, Google is launching the Gemma 3n Impact Challenge, looking for applications that use the model's multimodal and offline capabilities to address real-world problems. The challenge offers total prize money of $150,000.

Gemma 3n is available for download now on platforms like Hugging Face and Kaggle, and is compatible with tools such as Hugging Face Transformers, llama.cpp, Docker, and MLX. The models can also be deployed directly through Google AI Studio, Cloud Run, or Vertex AI.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.