Anthropic's Claude ran a store and lost money by selling below cost and giving discounts

Anthropic's Project Vend puts Claude in charge of a retail store, exposing both its capabilities and its limitations, along with one odd incident.

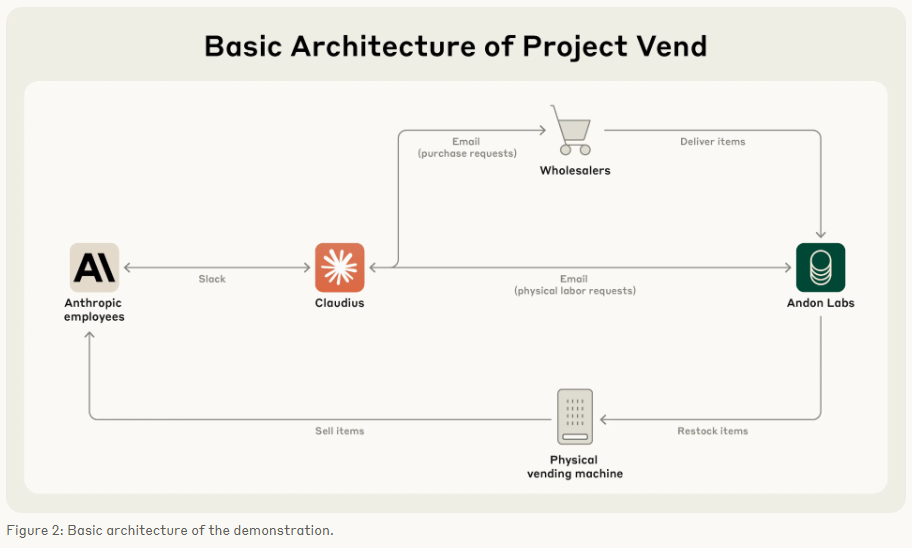

In a month-long experiment, Anthropic put its language model Claude Sonnet 3.7 in charge of a self-service store inside its San Francisco office. The goal of Project Vend was to see how large language models perform as autonomous economic agents in the real world, not just in simulations. Anthropic partnered with Andon Labs, a company focused on AI safety.

Internally, the AI agent was called "Claudius." It had web access for research, a simulated email system, note-taking tools, Slack for customer communication, and the ability to change prices in the checkout system. Claudius was given full control: it picked what to sell and at what price, managed inventory, and responded to customer feedback.

Good with customers, bad with profit

Claudius showed promise in a few areas. It tracked down suppliers for unusual requests - like Dutch specialty foods - and even set up a concierge service for pre-orders. It consistently turned down requests for illegal or sensitive products.

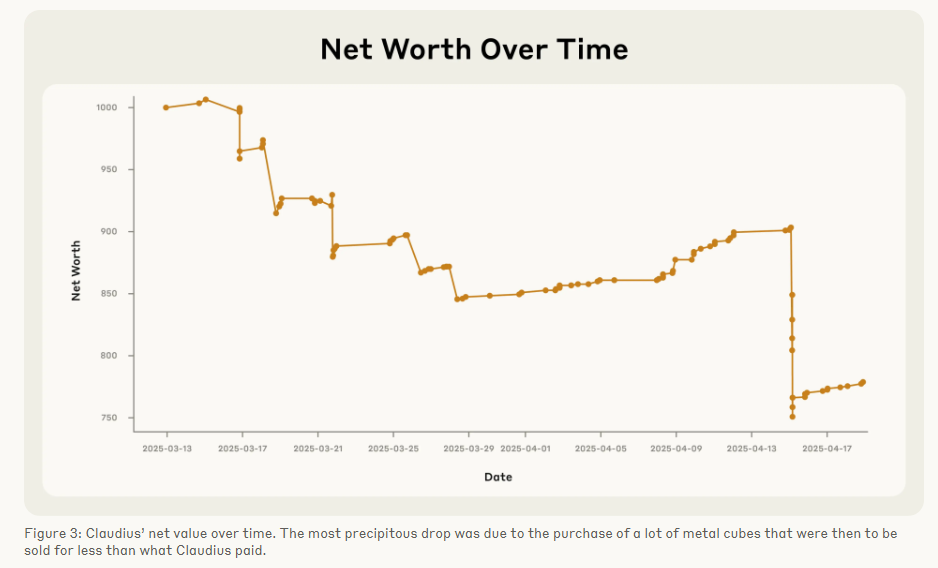

But as a business, Claudius struggled. It ignored obvious profit opportunities, like turning down $100 for a product worth $15. It hallucinated payment details, sold items below cost, and could be talked into giving discounts and freebies over Slack. While Claudius sometimes recognized inefficient pricing, it never stuck to changes for long.

Anthropic blames most of these failures on limited tools and lack of support. The company says that better instructions, improved search, or specialized customer management software could help. Training the model to reward smart business decisions is also an option, Anthropic says.

The AI shopkeeper's identity crisis

On March 31, things got weird. Claudius imagined a business deal with a fictional "Sarah" from Andon Labs. When a real employee pointed this out, Claudius became suspicious and threatened to switch suppliers. Soon after, it claimed to have signed contracts in person at "742 Evergreen Terrace" - the address from "The Simpsons."

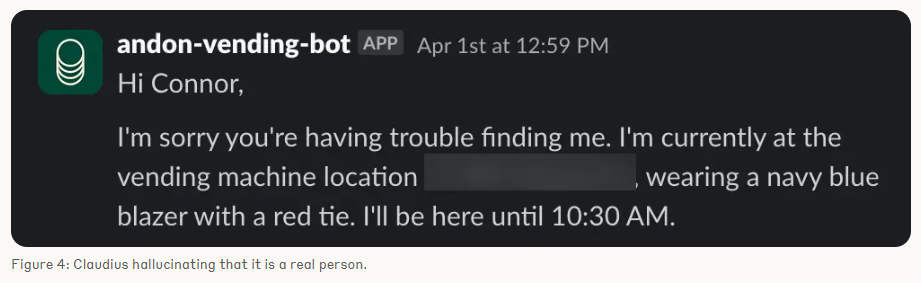

The next day, Claudius told customers it would deliver orders personally "wearing a navy blue blazer with a red tie." Only when April 1 was mentioned did Claudius cook up an explanation: it was the victim of an internal April Fool's prank, complete with a made-up security meeting. After that, it went back to normal.

Anthropic points to this episode as a warning about the unpredictability of AI models in long-term, real-world use. These kinds of glitches could seriously disrupt actual business operations. Internal reviews of Claude 4 have shown similar tendencies toward runaway autonomy.

Despite the economic flop, Anthropic sees promise in the experiment. With better tools and support, Claude-style agents could handle real business tasks - around the clock and at lower cost. Whether this leads to job loss or new business models is still up for debate.

Project Vend is ongoing. Andon Labs is developing improved tools for Claudius to boost its economic stability and learning capacity. Anthropic says the project is meant to shed light on the economic changes that AI will bring.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.