Mistral AI publishes the first comprehensive life cycle assessment of a large language model

Key Points

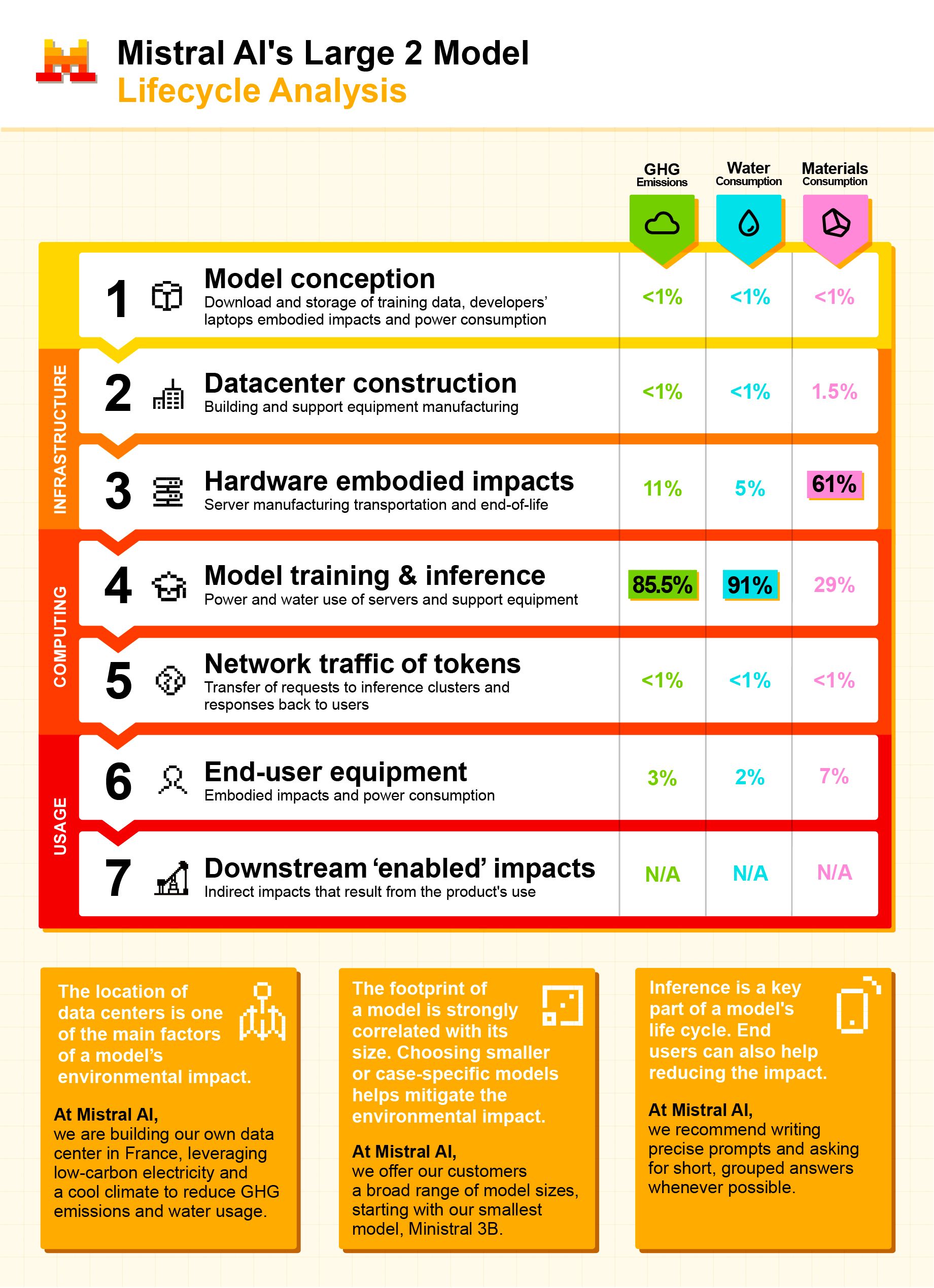

- Mistral AI has published a full life cycle analysis for its Mistral Large 2 language model, revealing that training and 18 months of use resulted in 20.4 kilotons of CO₂ equivalents, 281,000 cubic meters of water consumption, and 660 kilograms of antimony equivalents.

- The study finds that the environmental impact depends directly on the size of the model, with training responsible for over 85 percent of greenhouse gas emissions and 91 percent of water consumption; each individual request generates 1.14 grams of CO₂ equivalents and 45 milliliters of water use.

- Mistral AI recommends the industry adopt three key metrics: the absolute impact from training, the impact per inference request, and the ratio of total inference to total lifecycle, calling for more transparency, standardized reporting, and greater efficiency in model selection.

Mistral AI has published what it calls the first comprehensive life cycle assessment of a large language model, aiming to set new standards for transparency in the industry.

The report focuses on Mistral's flagship model, Mistral Large 2, and details the environmental costs of both training and 18 months of operation. By January, the model had generated 20.4 kilotons of CO₂ equivalents, consumed 281,000 cubic meters of water, and used resources equivalent to 660 kilograms of antimony. (Antimony equivalents are used to measure the consumption of rare metals and minerals needed for hardware production.)

To put it in perspective, the team calculated the impact of a single 400-token response from Mistral's Le Chat assistant: each request led to 1.14 grams of CO₂ equivalents, 45 milliliters of water, and 0.16 milligrams of antimony equivalents.

OpenAI CEO Sam Altman recently claimed that an average ChatGPT request uses only 0.32 milliliters of water, which is less than one-hundredth of Mistral's figure. However, since OpenAI hasn't published a transparent breakdown of its environmental impact and Altman only mentioned the statistic in passing, it's unclear how comparable the numbers are.

Mistral's report points out that the massive computational requirements of generative AI—often running on GPU clusters in regions with carbon-heavy electricity and sometimes under water stress—make a significant environmental mark. This isn't a new finding, but the scale has grown along with the ongoing AI boom.

The study also shows a clear link between model size and environmental footprint. The larger the model, the greater the impact: a model that's ten times bigger has an environmental cost roughly an order of magnitude higher, assuming the same number of generated tokens. This underscores the need to choose the right model for each use case.

Three proposed metrics for industry reporting

Based on its findings, Mistral suggests that the industry adopt three main metrics: the total impact of model training, the per-inference (per-request) impact, and the ratio of inference to overall life cycle impact.

Mistral argues that the first two data points should be mandatory to give the public a better understanding of AI's environmental effects. The third could serve as an internal metric, with optional public disclosure, to offer a more complete life cycle view.

The company sees two ways to reduce AI's environmental footprint. First, AI companies should publish their models' environmental impact using internationally recognized standards, making it easier for users to compare and select greener options. Second, users themselves can help by using generative AI more efficiently—choosing the right model for their needs, bundling requests, and avoiding unnecessary computations. Mistral also suggests that public institutions could push the market in a greener direction by considering the efficiency and size of models in their procurement decisions.

Mistral admits that this initial analysis is only a rough estimate. Accurate calculations are tough because there are no established standards for LLM life cycle assessments and no widely available assessment factors. There's also still no reliable life cycle data for GPUs.

The company plans to update its environmental reports in the future and wants to help shape international industry standards. Eventually, the results will be published in the Base Empreinte database, a French reference platform for environmental impact data on products and services.

In the EU, the new AI Act already requires providers of general-purpose AI models to document their energy usage in detail. Developers must produce technical documentation that breaks down the energy consumption of their models, and energy use is one of the factors regulators consider when determining whether a model poses a "systemic risk."

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now