Developers rely on AI tools more than ever, but trust is slipping

AI-generated code is often "almost right, but not quite," and that's wearing on developers. A new survey shows most spend more time fixing AI-generated code than they expected, and still turn to human expertise when it counts.

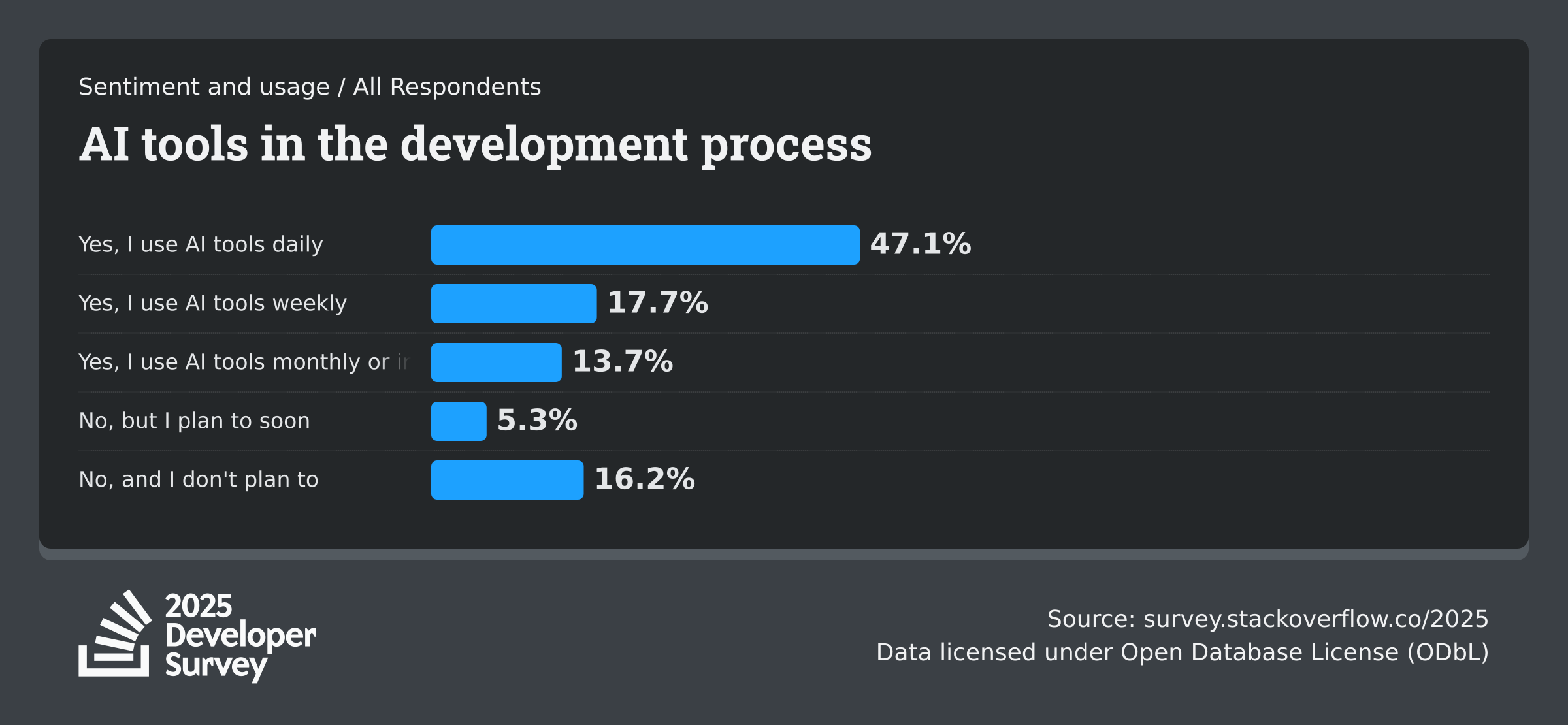

Only 33% of developers say they trust the accuracy of AI-generated code, down from 43% last year, according to the latest Stack Overflow Developer Survey 2025. Yet adoption keeps rising. Out of nearly 50,000 developers surveyed, 84% now use or plan to use AI tools, up from 76% the year before. Despite this, enthusiasm is fading: over 70% were positive about AI tools in 2023 and 2024, but that number has dropped to 60% for 2025.

"Almost right" doesn't cut it

Nearly half of developers (46%) say they don't trust the accuracy of AI tools, and just 3% have high trust in their outputs. The divide grows with experience: 20% of veteran developers "highly" distrust AI, while only 3% express strong trust.

Professional developers are somewhat more optimistic, with 61% feeling positive about AI tools compared to 53% of beginners. About half of pros use AI tools daily, with another 18% using them weekly.

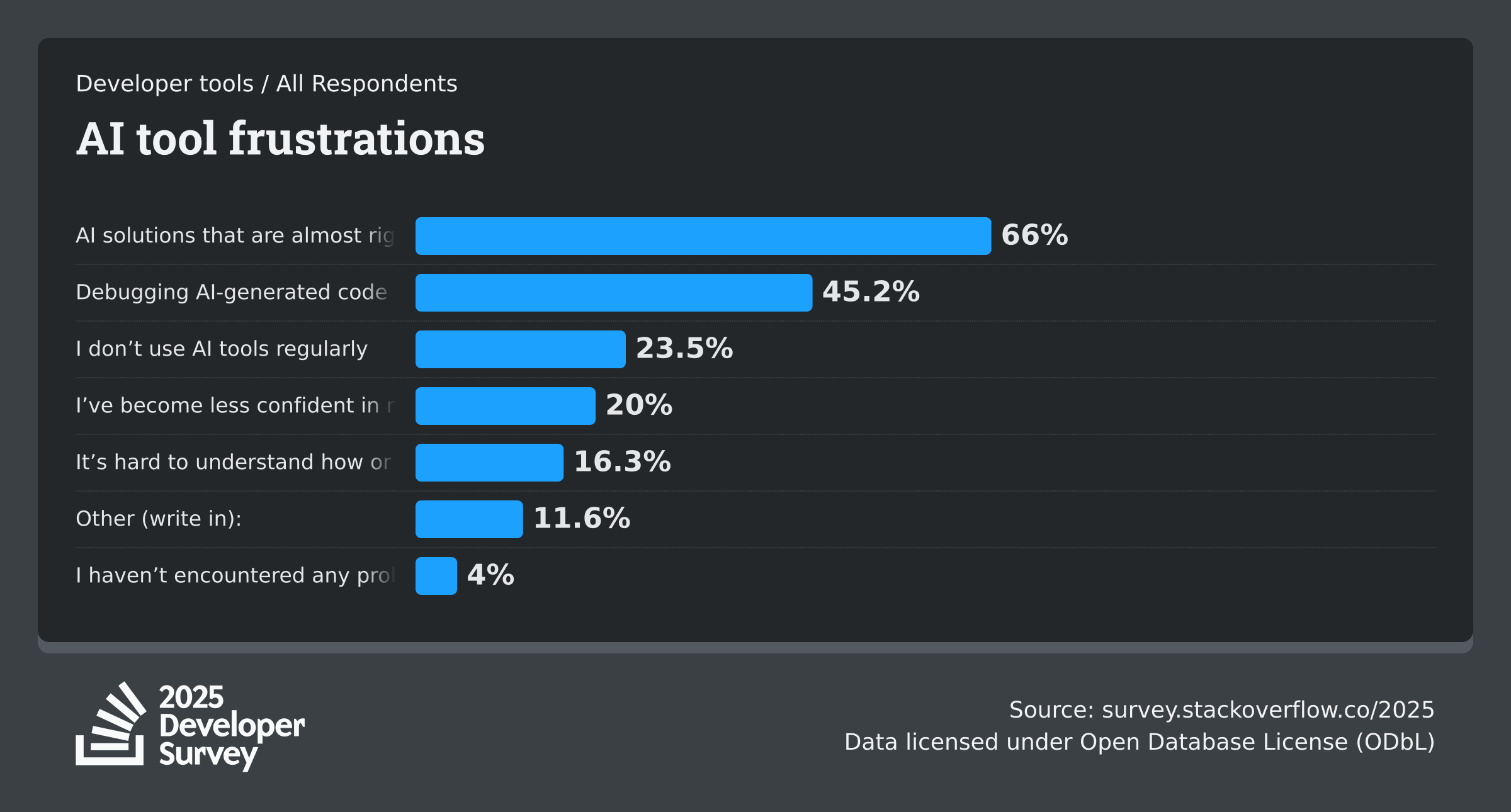

The main frustration is clear: 66% of developers say AI solutions are "almost right, but not quite." That leads to another headache—45% say they spend more time debugging AI code than they expected. One in five say AI tools have actually eroded their confidence in their own problem-solving skills.

AI falls short most often on complex tasks. Only 4.4% of developers say AI tools perform very well with difficult assignments. Meanwhile, 17.6% rate their complex-task performance as very poor, and another 22% call it simply poor.

Developers hold back on critical tasks

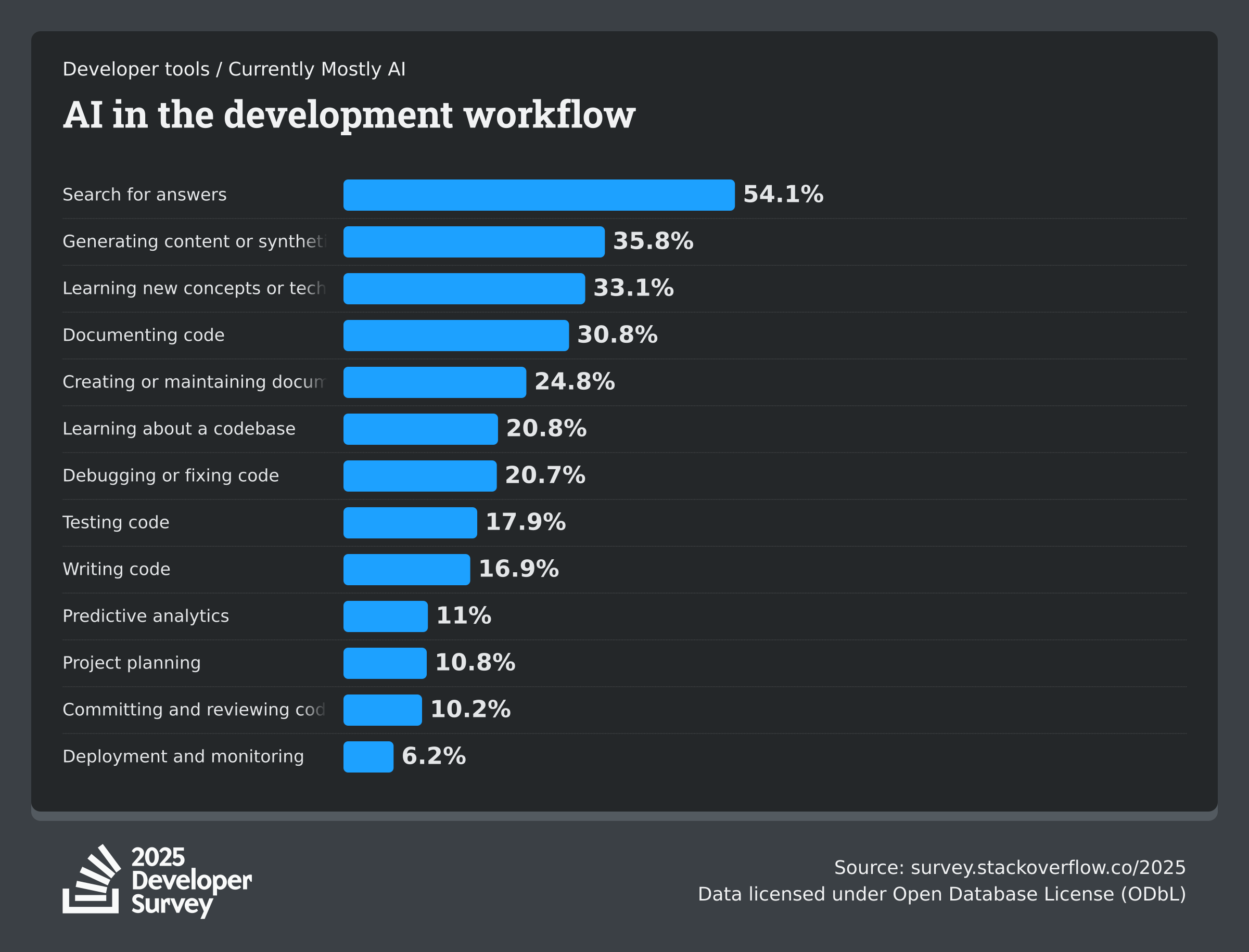

Skepticism spikes around system-critical work. According to the survey, 76% of developers do not plan to use AI for deployment or monitoring. Project planning is similar, with 69% staying away. Many are also reluctant to use AI for committing or reviewing code.

Instead, developers are most open to AI for less critical tasks: searching the web for answers, generating synthetic content, documenting code, maintaining documentation, or learning a new codebase.

AI agents still waiting for their moment

AI agents haven't gone mainstream. More than half of developers (52%) either skip agents altogether or prefer simpler AI tools. 38% don't plan to integrate agents at all, and just 14% use them daily.

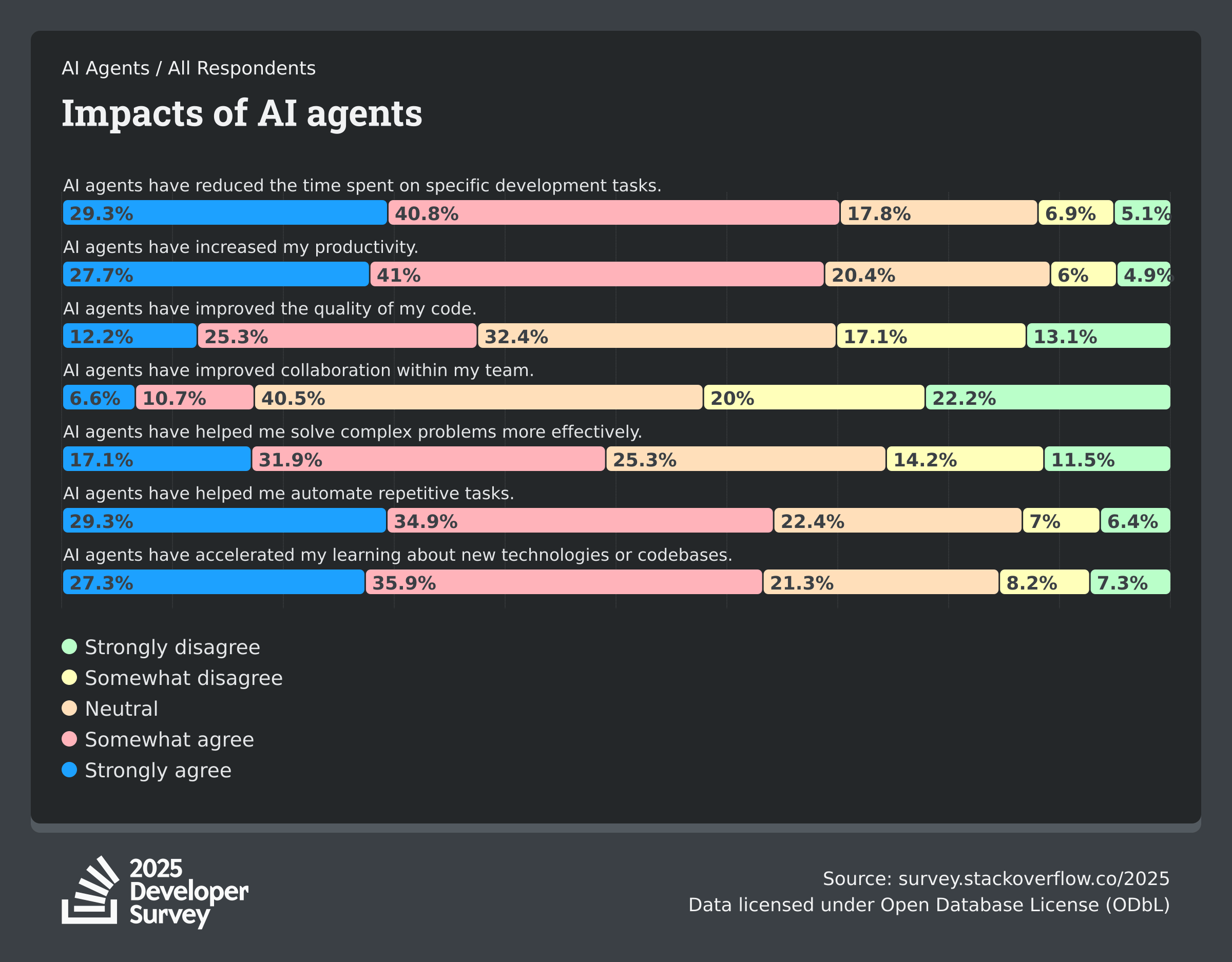

Those who do use agents report some gains: 70% say agents save them time on certain tasks, and 69% say they've boosted productivity. But only 17% see team collaboration improving.

Concerns run deep. 87% worry about the accuracy of AI agent output, and 81% have security and privacy concerns. More than half say platform costs keep them from using some AI tools.

When it matters, developers still look to people. Three in four would ask a human for help if they don't trust an AI answer, and 62% would seek human advice for ethical or safety issues—or just to fully understand something.

"Vibe coding"—letting AI and intuition guide the process—hasn't caught on: 72% don't practice it, another 5% flat-out reject it, and only 12% say yes.

A few big names dominate the AI tool landscape: 82% of developers who use AI agents rely on ChatGPT, followed by GitHub Copilot (68%) and Google Gemini (47%). Claude Code is used by 41%, Microsoft Copilot by 31%. Ollama leads orchestration tools at 51%, trailed by LangChain at 33%. For monitoring and security, Grafana and Prometheus are used by 43%, with Sentry at 32%.

More than half of developers (52%) say AI tools and agents make them more productive. 16% say these tools have fundamentally changed their work, while another 35% report "some" change.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.