Nvidia's Rubin CPX could force AMD back to the drawing board

Nvidia has introduced the Rubin CPX, a specialized accelerator built specifically for the "prefill" stage of AI inference. According to a new report from SemiAnalysis, the move could lock in Nvidia’s lead and force rivals like AMD back to the drawing board.

The key idea is "disaggregated serving" - splitting the two main phases of inference across different, specialized hardware. Prefill and decode have very different requirements, and running them on the same GPU wastes resources. SemiAnalysis argues that Nvidia’s new approach could be a "game changer" and widen the gap between Nvidia and competitors working on inference-specific chips.

Why inference is inefficient

Inference in large language models happens in two main stages. The prefill phase - generating the first token from a prompt - is compute-heavy but doesn’t need much memory bandwidth. The decode phase - generating all the tokens that follow - is the opposite: it stresses memory bandwidth but doesn’t use as much raw compute.

SemiAnalysis says it makes little sense to run prefill on today’s high-end GPUs loaded with expensive high-bandwidth memory (HBM). FLOPS capacity is what matters most in prefill, while the pricey memory bandwidth often goes unused. That’s the inefficiency Rubin CPX is designed to solve.

Rubin CPX: less memory, more efficiency

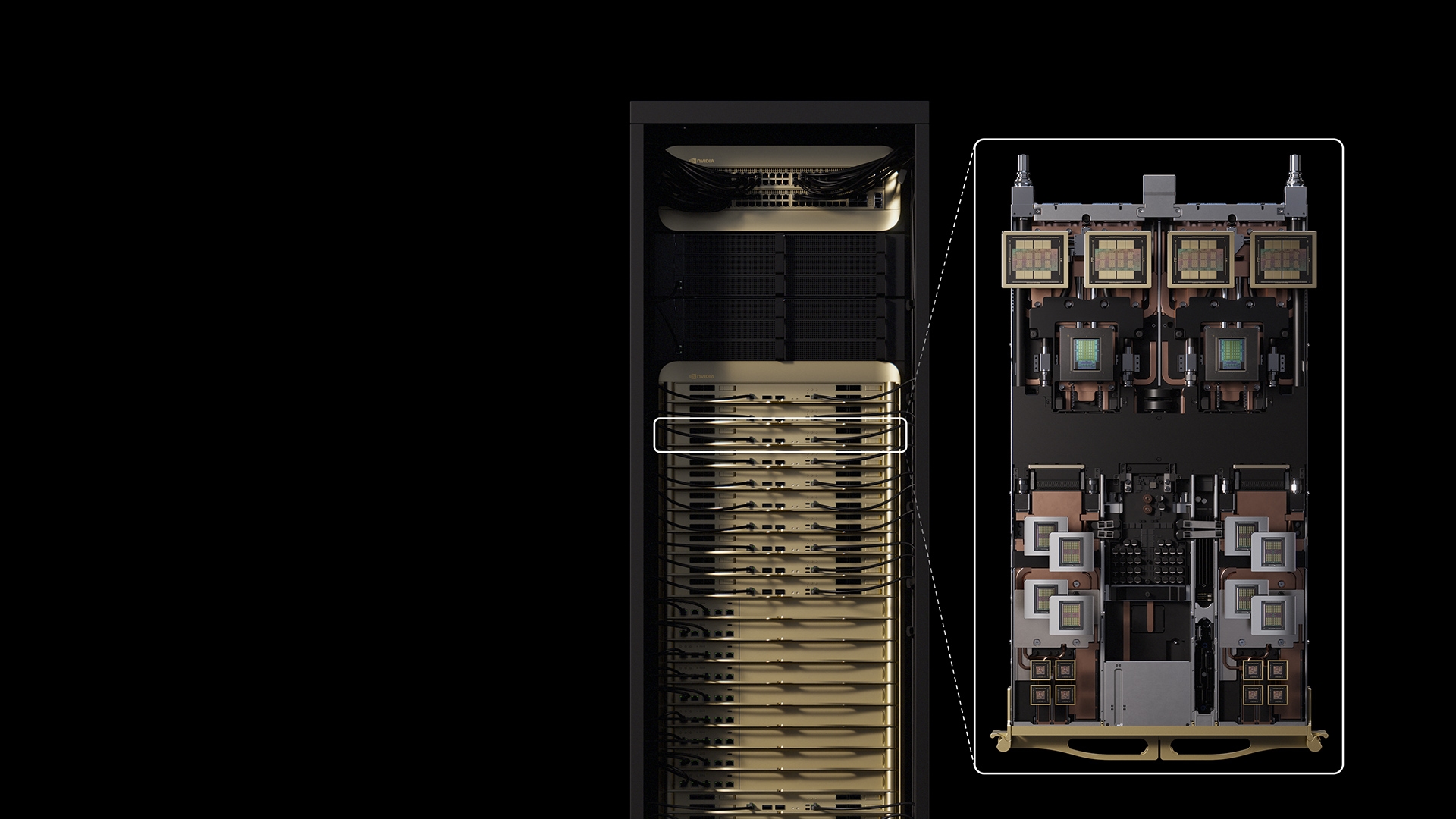

Rubin CPX is tuned for dense compute with far less bandwidth than a general-purpose GPU. It offers 20 PFLOPS of FP4 compute paired with 2 TB/s of memory bandwidth and 128 GB of GDDR7 memory. The upcoming standard Rubin R200 GPU, by comparison, is expected to deliver 33.3 PFLOPS with 288 GB of HBM4 and an enormous 20.5 TB/s bandwidth.

By dropping HBM in favor of cheaper GDDR7 and cutting back on advanced packaging, SemiAnalysis estimates Rubin CPX costs only around one-quarter as much to manufacture as R200. Nvidia also removed its high-speed NVLink interconnect in favor of PCIe Gen 6, which is considered sufficient for pipelined prefill tasks and further reduces cost.

Rivals under pressure

SemiAnalysis warns that Nvidia’s pivot to disaggregated serving puts competitors in a tough spot. AMD was on the verge of catching up to Nvidia’s rack-scale Rubin architecture with its MI400 system. Without a dedicated prefill chip, however, AMD would be shipping hardware with higher total cost of ownership for inference workloads. And with the R200’s memory bandwidth now raised to 20.5 TB/s, one of AMD’s advantages in MI400 is already gone.

Big players like Google, AWS, and Meta are better placed to design their own custom prefill chips, but having to do so creates yet another delay in their efforts to reach parity with Nvidia. SemiAnalysis suggests Nvidia’s strategy - innovating not just at the chip level but at full system scale - now sets the direction for the entire market. Others will either adapt to Nvidia’s playbook, or risk falling further behind.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.